Example Scenario

Let’s start with an example of a hospital that is using MLflow to train a model to predict the risk of sepsis in patients. Lab results, vital signs, and demographic data from patient medical records are employed to train the model. The hospital logs the following artifacts during the training process:

- The accuracy and loss metrics for the model.

- The parameters used to train the model.

- The model file itself.

- The hospital stores the artifacts in a local directory or Server called MLRuns.

After the model is trained, the hospital management wants to download the model file to a local/remote server so that it can be used to predict the risk of sepsis in real time.

An Overview of the MLflow Logging and Artifact Management Process

import mlflow

import pandas as pd_obj

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LogisticRegression as LR

from sklearn.metrics import accuracy_score as Acs, log_loss as LL

Load Patients Data

The code provided loads the generated synthetic patient data from CSV files into two Pandas DataFrames. The first DataFrame, patients_train_data, contains the training data. The second, patients_test_data, contains the test data.

The Pandas library is imported through pd_obj, and DataFrames are filled with CSV file contents using the read_csv() function. The function takes the file location and column names; all columns are used if the list of column names is empty.

The read_csv() function is used to load data of CSV files:

- synthetic_patient_data_train.csv: holds the training data.

- synthetic_patient_data_test.csv: contains the test data.

The read_csv() function produces two DataFrames:

- patients_train_data: for training data.

- patients_test_data: for test data.

Code:

patients_test_data = pd_obj.read_csv('synthetic_patient_data_test.csv')

Separate Features and Labels

Patients_Feature_Train and Patients_Train_Label are two empty DataFrames first created by the code. The SepsisLabel column is then deleted from the patients_train_data DataFrame using the drop() function.

The drop() function generates an updated DataFrame with a SepsisLabel label column containing anticipated values and features columns for forecasting labels.

The drop() function deletes the SepsisLabel column from the Patients_Feature_Test and Patients_Test_Label DataFrames, creating an updated DataFrame with missing columns. The training data set features and labels are stored in Patients_Feature_Train and Patients_Train_Label DataFrames.

Training data is ingested to improve the machine learning model. To evaluate how well the model works, test data is used.

Code:

Patients_Train_Label = patients_train_data['SepsisLabel']

Patients_Feature_Test = patients_test_data.drop(columns=['SepsisLabel'])

Patients_Test_Label = patients_test_data['SepsisLabel']

Set Tracking URI and Create Experiment

The code first sets the tracking URI, which is the address of the MLflow tracking server.

The experiment is named Sepsis Risk Prediction Experiment and it will be used to monitor the profess of the machine learning project.

The tracking URI for the current session is established using the mlflow.set_tracking_uri() method. A new experiment with the supplied name is created using the mlflow.set_experiment() function.

The artifacts and metrics for the machine learning project are stored in the tracking URI.

Code Snippet:

exp_name = "Sepsis Risk Prediction Experiment"

mlflow.set_tracking_uri(experiment_tracking_URI)

mlflow.set_experiment(exp_name)

Start an MLflow Run and Log Parameters.

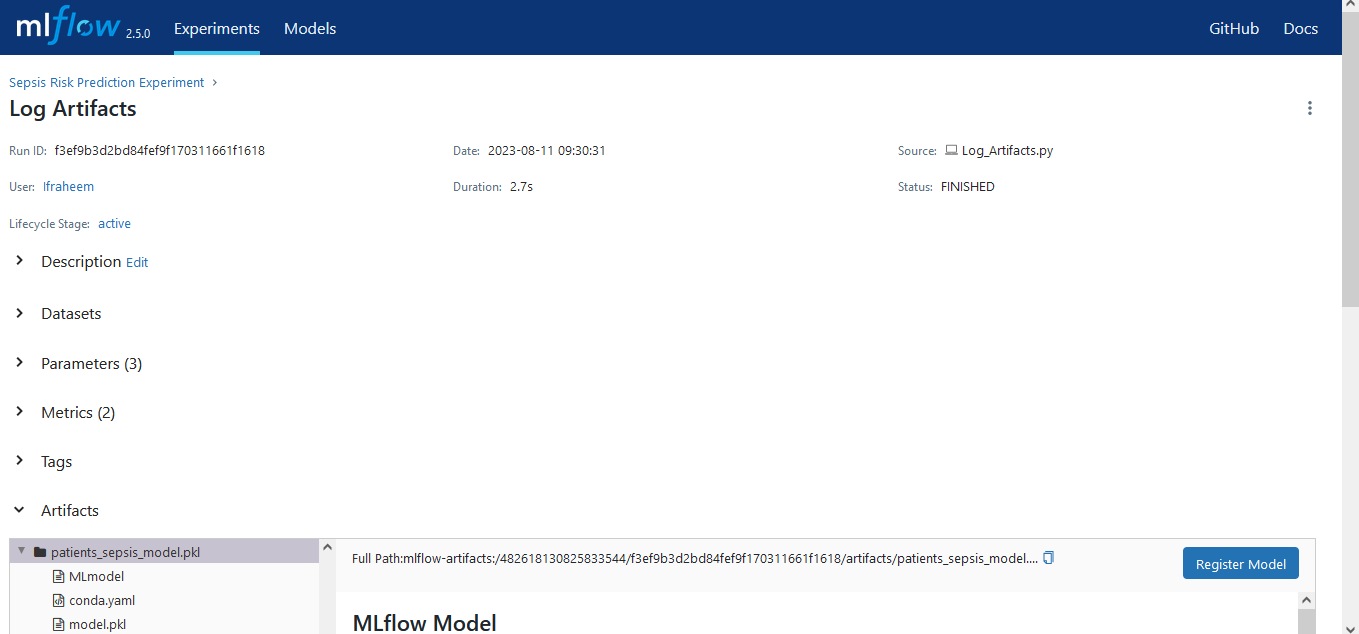

The code initiates an MLflow run called Log Artifacts to track progress and log parameters for training the model, stored in the log_parameters dictionary. The mlflow.log_params() function logs the parameters to the current run.

The parameters that are logged in this example are:

- Model: The type of model that is being trained.

- Version: The version of the model.

- Penalty: The type of penalty that is being used for the model.

Code Snippet:

log_parameters = {

"Model": "Logistic Regression",

"Version": 1.0,

"Penalty": "l2"

}

mlflow.log_params(log_parameters)

Train Model and Evaluate Performance

The LR() function from the scikit-learn library is used in the code first to train a simple logistic regression model. The log_parameters dictionary contains the model’s parameters.

The training data set is then used to fit the model. The training data set and the labels are sent as inputs to the fit() algorithm.

On the test set, the code then makes predictions. The test data set is passed to the predict() function, which produces the predictions for the labels.

The code computes the accuracy and log loss metrics for the model. The fraction of correctly predicted events is known as accuracy. The model’s ability to predict the labels is gauged by the log loss. The MLflow tracking server stores the accuracy and log loss metrics. This enables us to monitor the machine learning model’s effectiveness and evaluate it against other models.

Code:

patients_model.fit(Patients_Feature_Train, Patients_Train_Label)

Patients_Test_pred = patients_model.predict(Patients_Feature_Test)

model_accuracy = Acs(Patients_Test_Label, Patients_Test_pred)

model_logloss = LL(Patients_Test_Label, Patients_Test_pred)

Log Metrics and Artifacts

The accuracy and log loss data are first logged to the MLflow tracking server0.

Data is consumed to train the machine learning model. The performance of the model is evaluated using the test data. The method then uploads the artifact of the trained model to the MLflow tracking server. The model object and the file name are the only arguments given to the mlflow.sklearn.log_model() function. The model object is saved to the designated file with the given name, which is then recorded by the MLflow tracking server.

The code logs the test data artifact to the MLflow tracking server. The test results are saved to a CSV file using the function patients_test_data.to_csv(). The MLflow tracking server receives the CSV file via the mlflow.log_artifact() function.

The MLflow tracking server houses all metrics, artifacts for the training model, and test data.

Code:

mlflow.log_metric("Logloss", model_logloss)

patient_model_file = "patients_sepsis_model.pkl"

mlflow.sklearn.log_model(patients_model, patient_model_file)

patients_test_data.to_csv("patients_test_data.csv", index=False)

mlflow.log_artifact("patients_test_data.csv")

Code Execution

Run the code:

Conclusion

Tracking the development of the machine learning (ML) project needs logging artifacts. It enables us to monitor the model’s performance over time and spot any potential errors. It is also simpler to replicate the experiment’s results when artifacts are logged. It’s crucial to manage artifacts if we want to share the model with other team members. It makes it straightforward for us to share the model and guarantees that everyone is working with the same version of it.