This blog will demonstrate how to implement scaling and load balancing in Docker compose.

How to Scaling up the Services in Docker Compose?

Scaling in Docker means making replicas of compose services or containers. These replicas are managed on the host. To implement the scaling in Docker compose, go through the provided instructions.

Step 1: Generate Dockerfile

Generate a Dockerfile that will containerize the Golang “main1.go” program. For this purpose, paste the provided code into the file:

WORKDIR /go/src/app

COPY main1.go .

RUN go build -o webserver .

EXPOSE 8080:8080

ENTRYPOINT ["./webserver"]

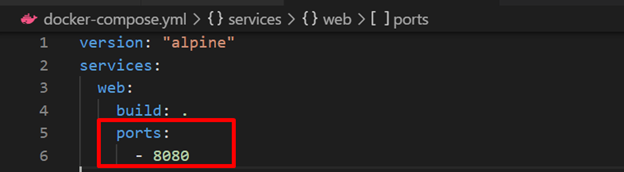

Step 2: Generate Compose File

Next, create another file named “docker-compose.yml” file and paste the below-provided instructions:

services:

web:

build: .

ports:

- 8080

Here:

- “services” is used to create and configure the docker-compose service. For that purpose, we have configured the “web” service.

- “build” is utilized to specify the Dockerfile. In the given code block, the build key will use the above provided Dockerfile.

- “ports” are exposing ports for containers. Here, we have utilized the “8080” instead of “8080:8080”. This is because when we scale the different services, the binding port “8080” will be allocated to only one service, and the other will generate the error. The “ports” value “8080” allows Docker to assign the ports to services on the host network automatically:

Alternatively, users can assign the “ports” value in the range such as “80-83:8080”. This will automatically assign the exposing ports within the specified range to each container or service.

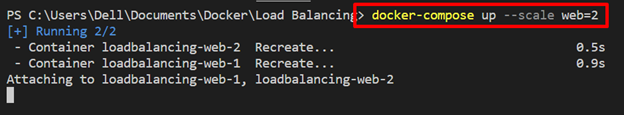

Step 3: Fire up the Containers

Next, fire up the containers using the “docker-compose up” command. To replicate the “web” service, utilize the “–scale” option along with the “<service-name>=<no of replicas>” value as shown below:

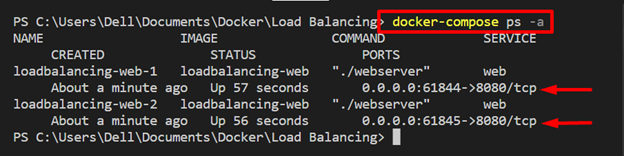

Step 4: List Compose Containers

List the compose containers and verify if the scaling services are executing or not:

You can see two replicas of the “web” service are running on “61844” and “61845” local host ports respectively:

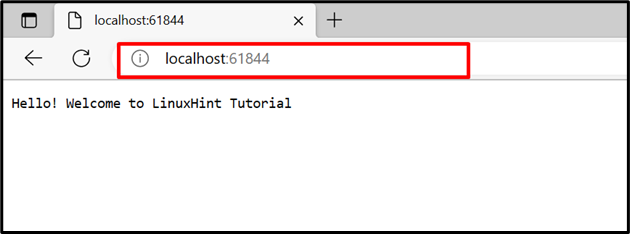

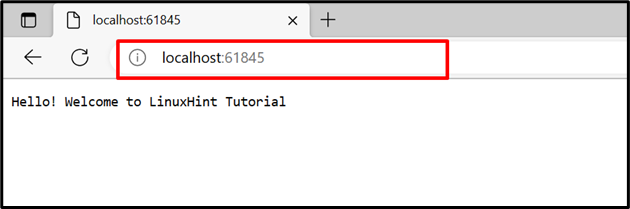

For confirmation, navigate to the assigned ports of the local host and verify if the service is running or not.

It can be observed that the “web” service has been successfully running on assigned ports:

How to Implement Load Balancing in Docker Compose?

The load balancer is one of the best solutions for managing traffic from different containers or clients on the server. It increases the reliability and availability of applications and services. Different routing criteria are used at the backend to manage the multi containers applications, such as round robin.

To implement the load balancing technique on compose services, utilize the given instructions.

Step 1: Create “nginx.conf” File

Create an “nginx.conf” file and paste the below code into the file. These instructions include:

- “upstream” with the name “all” specify the upstream service. Here, you can specify as many services as you are needed to manage. For instance, we have defined the “web” service expected to expose on port 8080.

- In the “server”, we have set the listening port “8080” for the nginx load balancer and passed the proxy “http://all/” to manage the upstream service:

events {

worker_connections 1000;

}

http {

upstream all {

server web:8080;

}

server {

listen 8080;

location / {

proxy_pass http://all/;

}

}

}

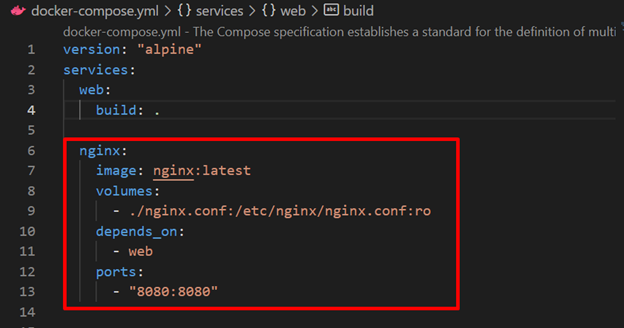

Step 2: Configure Load Balancer Nginx Service in “docker-compose.yml” File

Next, configure the load balancer “nginx” service in the “docker-compose” file. For this purpose, we have specified the following keys:

- “image” defines the base image for the “nginx” service.

- “volumes” is used to bind the “nginx.conf” to the container’s target path.

- “depends_on” specifies that the “nginx” service will depend on the “web” service:

- “ports” specify the listening port of the load balancer nginx service:

services:

web:

build: .

nginx:

image: nginx:latest

volumes:

- ./nginx.conf:/etc/nginx/nginx.conf:ro

depends_on:

- web

ports:

- 8080:8080

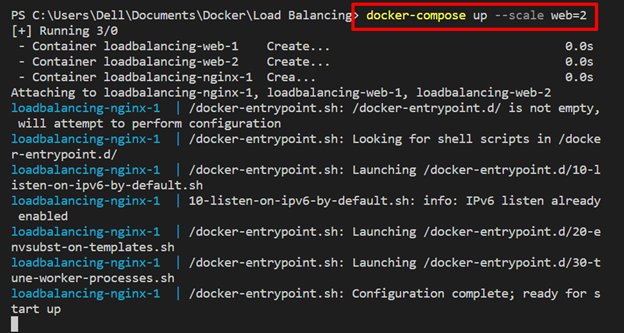

Step 3: Run Compose Containers

Now, run the compose container along with the “–scale” option to execute the web service replicas:

Here, these web services replicas are managed on load balancer service “nginx”:

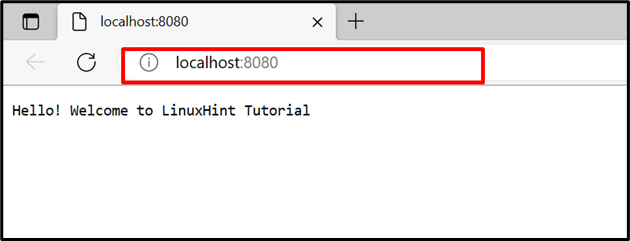

Navigate the listening port of the “nginx” service and verify if the load balance manages the two containers of web service on the same port or not. Refresh the page to switch to the second container, and again refresh the page to switch to the first container:

This is all about Docker compose load balancing and scaling.

Conclusion

Load balancing and scaling are techniques to increase the availability and reliability of the application. The Docker scaling generates the replicas of specified services and load balancer balance or manages the traffic to and from different containers on the server. For this purpose, we have used “nginx” as a load balancer. This blog has demonstrated Docker compose load balancing and scaling.