This article provides a demonstration of implementing AutoProcessor and AutoModel.

How to Implement AutoProcessor and AutoModel in Transformers?

AutoProcessor and AutoModel functions originate from the Auto Class. The Auto Glass includes multiple useful functions such as:

Hugging Face is one of the most important platforms that contributes majorly to NLP progress and development. The Auto Class in Hugging Face is efficient enough to infer and load the correct architecture automatically. This article discusses the two functions of the Auto Class i.e., AutoModel and AutoProcessor.

How to Implement AutoProcessor in Transformers?

There are multiple extensive models introduced by the Hugging Face on Model’s Hub. All the models present on this hub are public and ready to use. In Hugging Face, the user can combine the functionality and information of two models for better accuracy and results.

The term “multi-modal” refers to the functionality of two models which in turn provides better outputs as the training is taken on multiple modalities such as Text and Images. To load these multimodal, we can utilize the functionality of AutoProcessor.

Here is a practical demonstration of the functions of Auto Class:

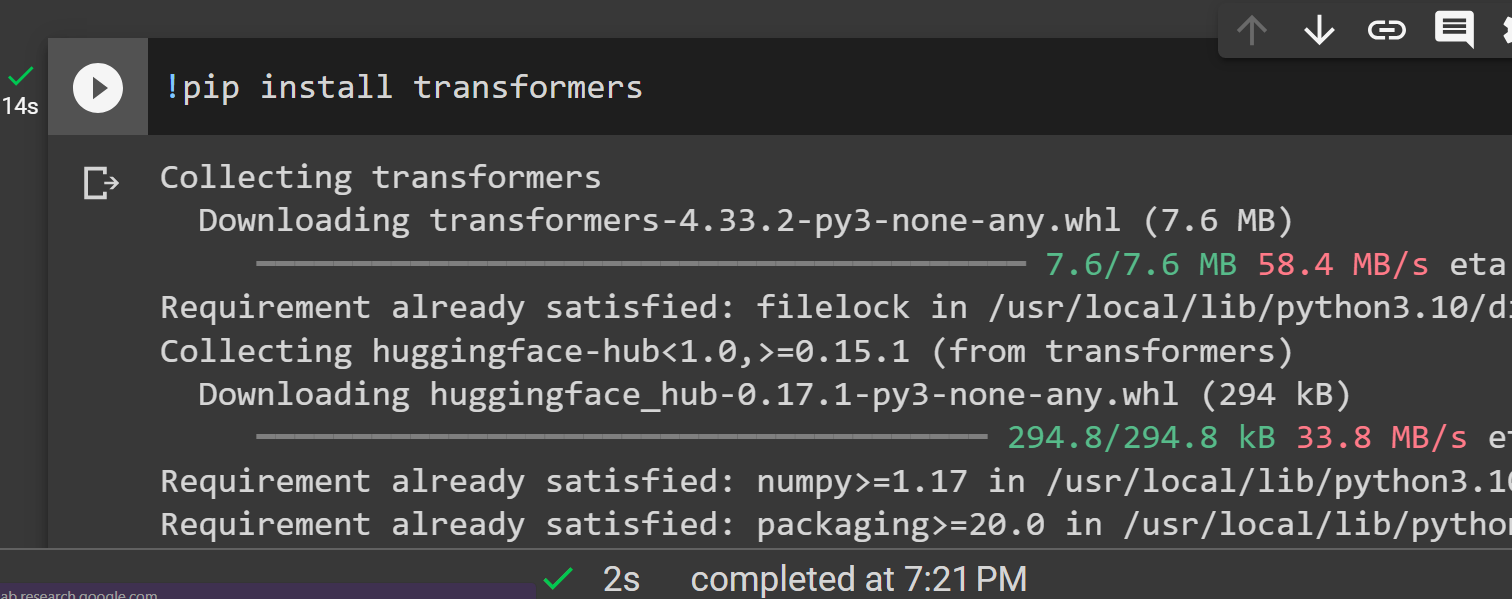

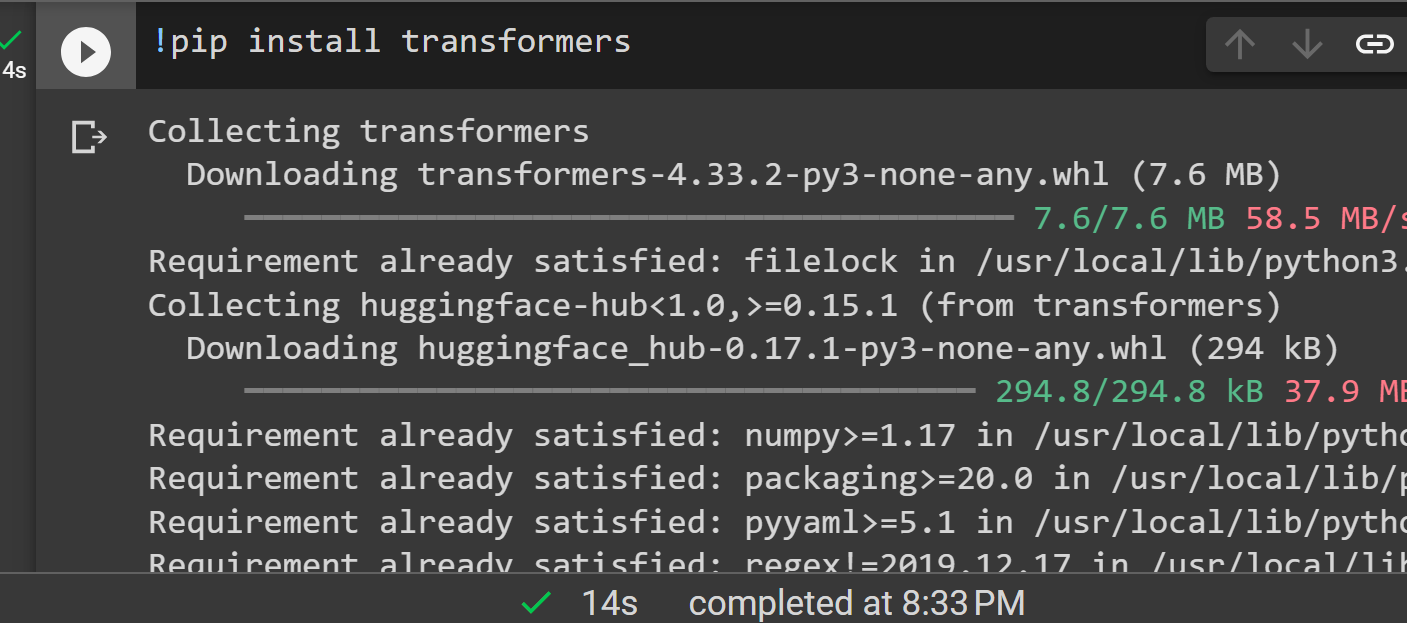

Step 1: Install Transformers

To install Transformers, provide the following command:

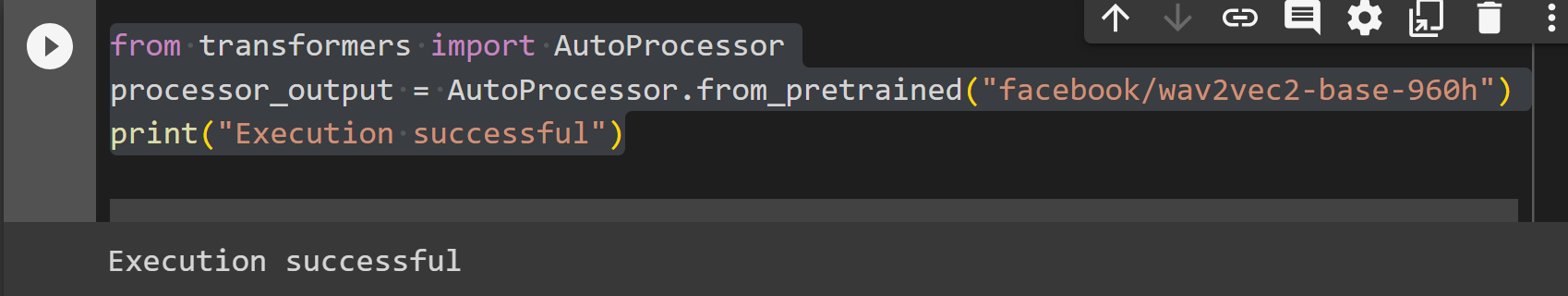

Step 2: Import AutoProcessor

The next step is to import AutoProcessor functionality from the Tokenizer. For this purpose, provide the following code:

processor_output = AutoProcessor.from_pretrained("facebook/wav2vec2-base-960h")

print("Execution successful")

That is all from this guide. The link to the above Google Colab is also mentioned.

How to Implement AutoModel in Transformers?

Another function of the Auto Class is an AutoModel. The AutoModel function allows its users to load a model for any defined or specific task. This function can be loaded for both TensorFlow and PyTorch.

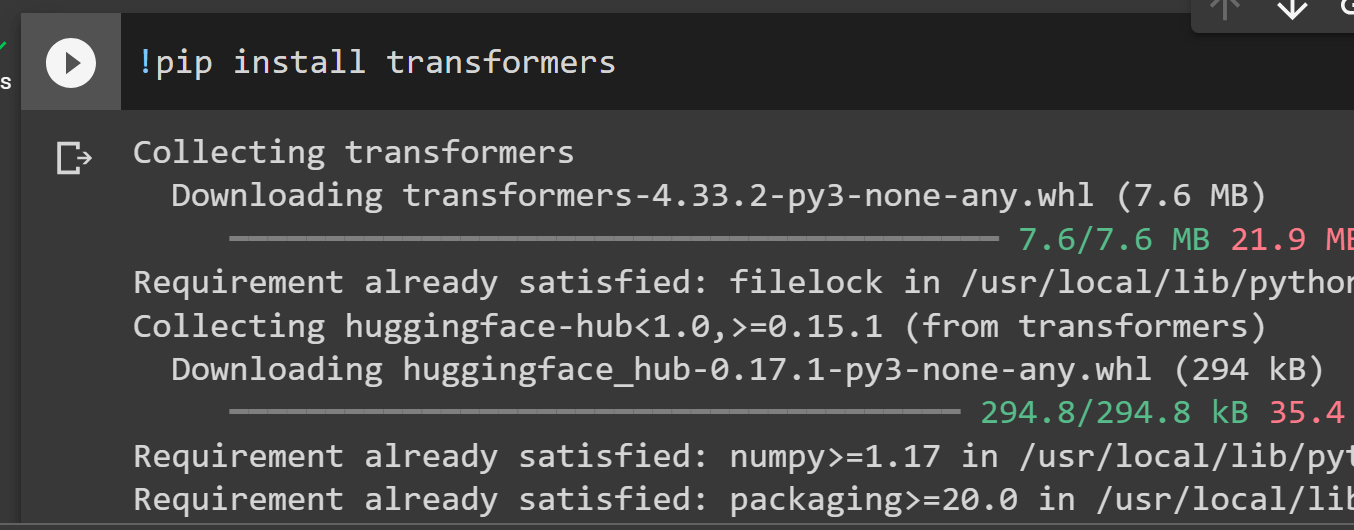

Method 1: Using AutoModel in PyTorch

Here is a step-by-step tutorial for utilizing this function:

The first step is to install the “transformers” library. To install the Transformers, provide the following command:

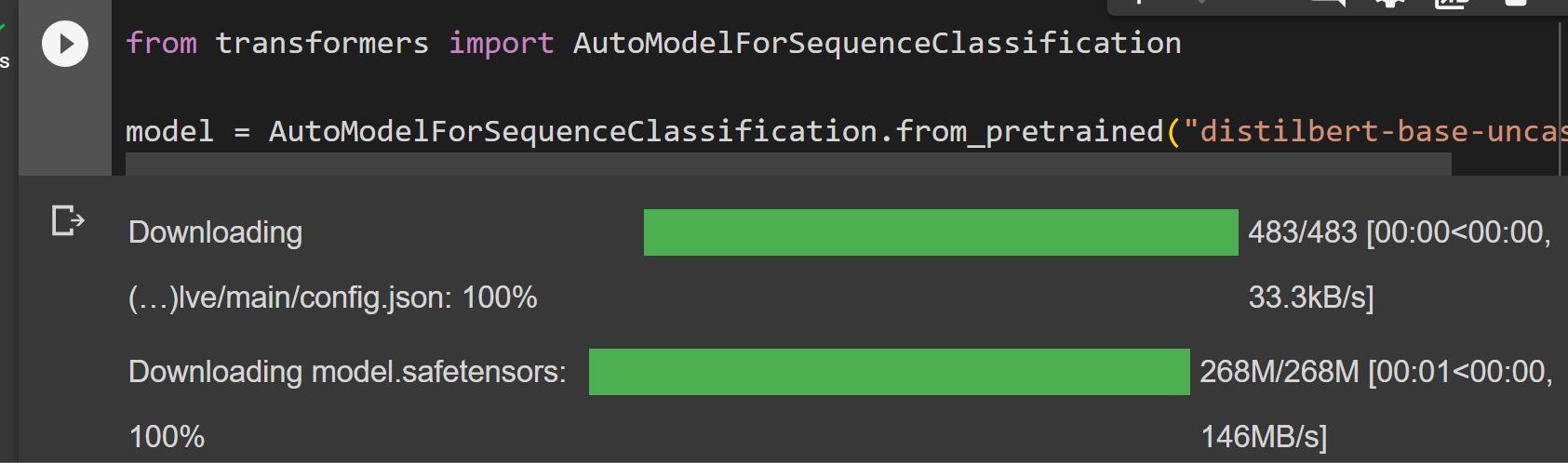

Here in this code, we are loading a model for sequence classification. Therefore, we will be installing the “AutoModelForSequenceClassification” function:

model = AutoModelForSequenceClassification.from_pretrained("distilbert-base-uncased")

The link to the Google Colab is also given.

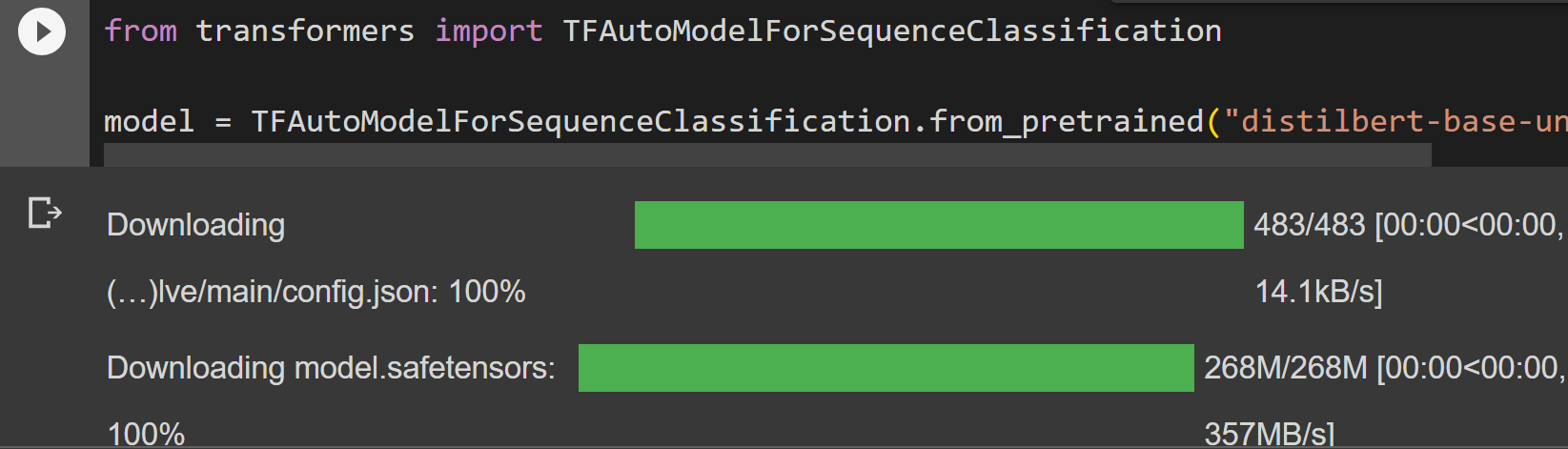

Method 2: Using AutoModel in TensorFlow

Here is a step-by-step tutorial for utilizing this function in TensorFlow:

To install the transformer, provide the following command:

For the demonstration purposes, we are using sequence classification model. In TensorFlow, we will import the AutoModel from the transformers:

model = TFAutoModelForSequenceClassification.from_pretrained("distilbert-base-uncased")

That is all from this guide. The link to this Google Colab is given here.

Conclusion

To implement AutoProcessor and AutoModel in Transformers, install the “transformers” library and then import the “AutoProcessor” and “AutoModel” functions from it. Auto Class is a generic class that contains many important utilities. This article is a step-by-step guide to implementing AutoProcessor and AutoModel in Transformers.