Quick Outline

This post will demonstrate the following:

How to Implement the Self-Ask With Search Chain

- Installing Frameworks

- Building Environment

- Importing Libraries

- Building Language Models

- Using LangChain Expression Language

- Configuring Agent Executor

- Running the Agent

- Using Self-Ask Agent

How to Implement the Self-Ask With Search Chain?

Self-Ask is the process to improve the chaining process as it understands the commands thoroughly. The chains understand the question by extracting the data about all the important terms from the dataset. Once the model is trained and understands the query, it generates the response to the query asked by the user.

To learn the process of implementing the self-ask with the search chains in LangChain, simply go through the following guide:

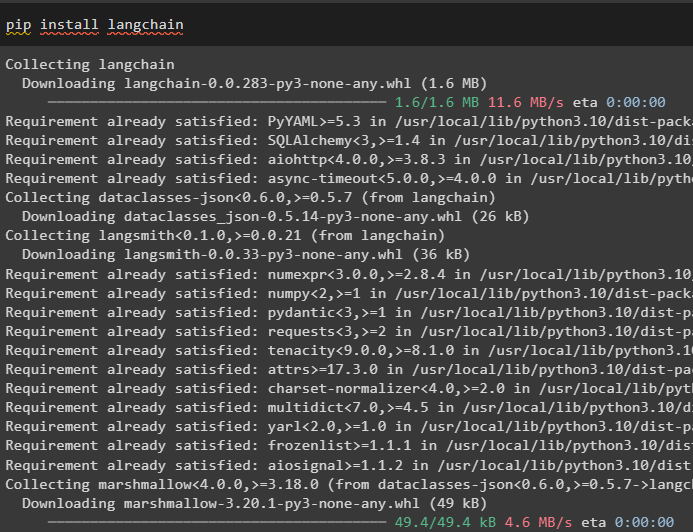

Step 1: Installing Frameworks

First of all, start the process by installing the LangChain process using the following code and get all the dependencies for the process:

After installing the LangChain, install the “google-search-results” to get the search results from Google using the OpenAI environment:

Step 2: Building Environment

Once the modules and frameworks are installed, set up the environment for the OpenAI and SerpAPi using their APIs using the following code. Import the os and getpass libraries that can be used to enter the API keys from their respective accounts:

import getpass

os.environ["OPENAI_API_KEY"] = getpass.getpass("OpenAI API Key:")

os.environ["SERPAPI_API_KEY"] = getpass.getpass("Serpapi API Key:")

Step 3: Importing Libraries

After setting up the environment, simply import the required libraries from the LangChain dependencies like utilities, agents, llm, and others:

from langchain.utilities import SerpAPIWrapper

from langchain.agents.output_parsers import SelfAskOutputParser

from langchain.agents.format_scratchpad import format_log_to_str

from langchain import hub

from langchain.agents import initialize_agent, Tool

from langchain.agents import AgentType

Step 4: Building Language Models

Getting the above libraries is required throughout the process as OpenAI() is used to configure the language model. Use the SerpAPIWrapper() method to configure the search variable and set the tools required for the agent to perform all the tasks:

search = SerpAPIWrapper()

tools = [

Tool(

name="Intermediate Answer",

func=search.run,

description="useful for when you need to ask with search",

)

]

Step 5: Using LangChain Expression Language

Getting started with configuring the agent using the LangChain Expression Language (LCEL) by loading the model in the prompt variable:

Define another variable that can be executed to stop generating text and control the length of the replies:

Now, configure the agents using Lambda which is an event-driven serverless platform to generate the responses for the questions. Also, configure the steps needed to train and test the model to get the optimized results using the components configured earlier:

"input": lambda x: x["input"],

"agent_scratchpad": lambda x: format_log_to_str(

x['intermediate_steps'],

observation_prefix="\nIntermediate answer: ",

llm_prefix="",

),

} | prompt | llm_with_stop | SelfAskOutputParser()

Step 6: Configuring Agent Executor

Before testing the method, simply import the AgentExecutor library from the LangChain to make the agent responsive:

Define the agent_executor variable by calling the AgentExecutor() method and using the components as its arguments:

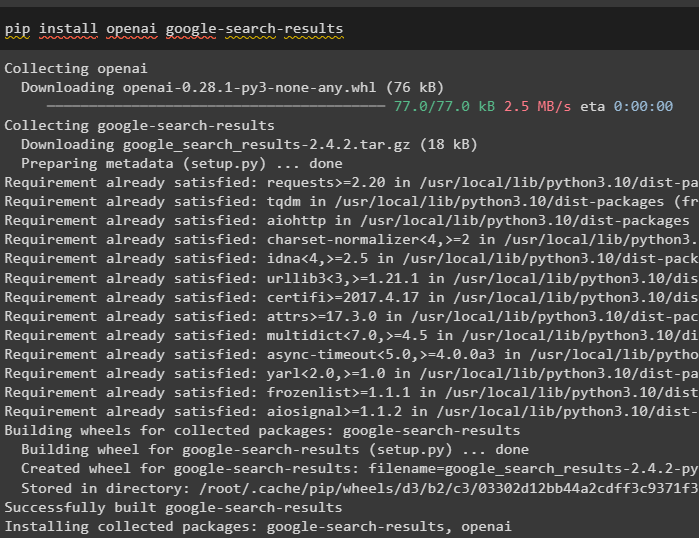

Step 7: Running the Agent

Once the agent executor is configured, simply test it by providing the question/prompt in the input variable:

Executing the above code has responded with the name of the US Open Champion in the output i.e. Dominic Thiem:

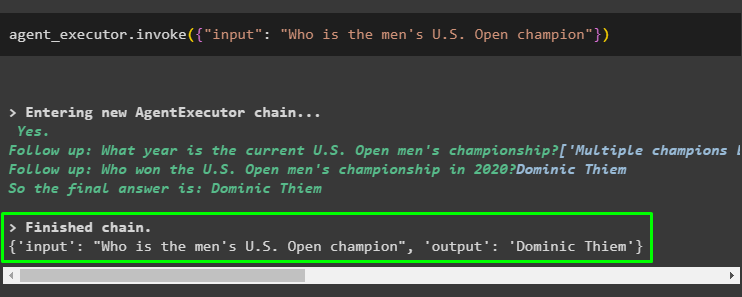

Step 8: Using Self-Ask Agent

After getting the response from the agent, use the SELF_ASK_WITH_SEARCH agent with the query in the run() method:

tools, llm, agent=AgentType.SELF_ASK_WITH_SEARCH, verbose=True

)

self_ask_with_search.run(

"What is the hometown of Dominic Thiem the US Open World Champion"

)

The following screenshot displays that the self-ask agent extracts the information about each important term from the dataset. Once it gathers all the information about the query and understands the questions, it simply generates the answer. The questions self-asked by the agent are:

- Who is Dominic Thiem?

- What is the hometown of Dominic Thiem?

After getting the answers to these questions, the agent has generated the answer to the original question which is “Wiener Neustadt, Austria”:

That’s all about the process of implementing the self-ask with a search chain using the LangChain framework.

Conclusion

To implement the self-ask with search in LangChain, simply install the required modules like google-search-results to get the results from the agent. After that, set up the environment using the API keys from the OpenAI and SerpAPi accounts to get started with the process. Configure the agent and build the model with the self-ask model to test it using the AgentExecutor() method.