This blog will illustrate how to expose and deploy multiple container applications on the same port using load balancing.

How to Expose Multiple Container Applications on the Same Port With Load Balancing?

The load balancing or reverse proxy is a technique to distribute traffic from different containers on a server. The load balancing can use different routing algorithms, such as the round robin algorithm, to allocate time spam to run the first container, then the second container, and again switch back to the first container, and so on. This can increase the application’s availability, capability, and reliability.

For the illustration, utilize the mentioned procedure.

Step 1: Create Dockerfile

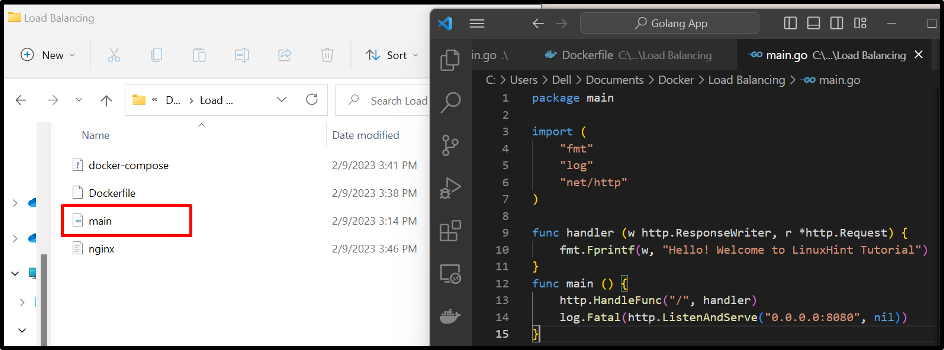

First, create a Dockerfile to containerize the application. For instance, we have defined the instructions to containerize the “main.go” app:

WORKDIR /go/src/app

COPY main.go .

RUN go build -o webserver .

ENTRYPOINT ["./webserver"]

Here, we have two different “main.go” programs in two different directories. In our scenario, the first program will use Dockerfile to configure the service:

The second program also has the same Dockerfile in its directory. Using this file, we have built the new Docker image “go1-image” that will be used to configure the second service in the compose file. To create or build the image, you can go through our associated article:

Step 2: Create Compose File

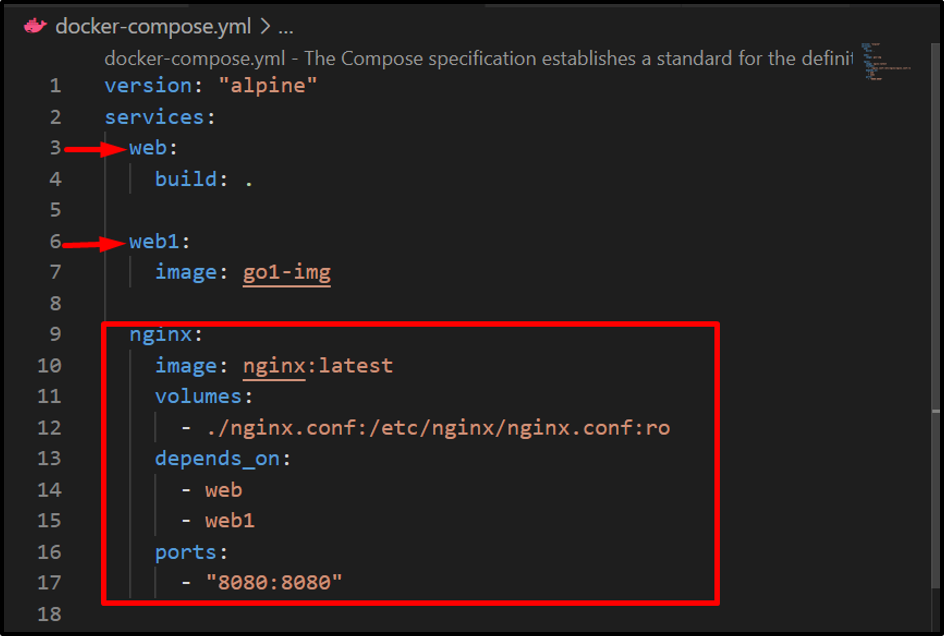

Next, create a compose file named “docker-compose.yml” file that contains the following instructions:

- “services” configure the three different services “web”, “web1”, and “nginx”. The “web” service will execute the first program, the “web1” service will execute the second program, and “nginx” will run as load balance to balance or manage the traffic from different containers.

- “web” will use the Dockerfile to containerize the service. However, the “web1” service will use the image “go1-img” to containerize the second program.

- “volumes” key is used to attach the nginx.conf file to the nginx container to upstream the services.

- “depends_on” key specifies that the “nginx” service depends on “web” and “web1” services.

- “ports” key defines the exposing port of the nginx load balancer where upstream services will execute:

services:

web:

build: .

web1:

image: go1-img

nginx:

image: nginx:latest

volumes:

- ./nginx.conf:/etc/nginx/nginx.conf:ro

depends_on:

- web

- web1

ports:

- 8080:8080

Step 3: Create “nginx.conf” File

After that, create the “nginx.conf” file and configure the upstream services, listening port of the load balancer and define the proxy “http://all/” to manage the upstream services:

events {

worker_connections 1000;

}

http {

upstream all {

server web:8080;

server web1:8080;

}

server {

listen 8080;

location / {

proxy_pass http://all/;

}

}

}

Step 4: Fire Up the Containers

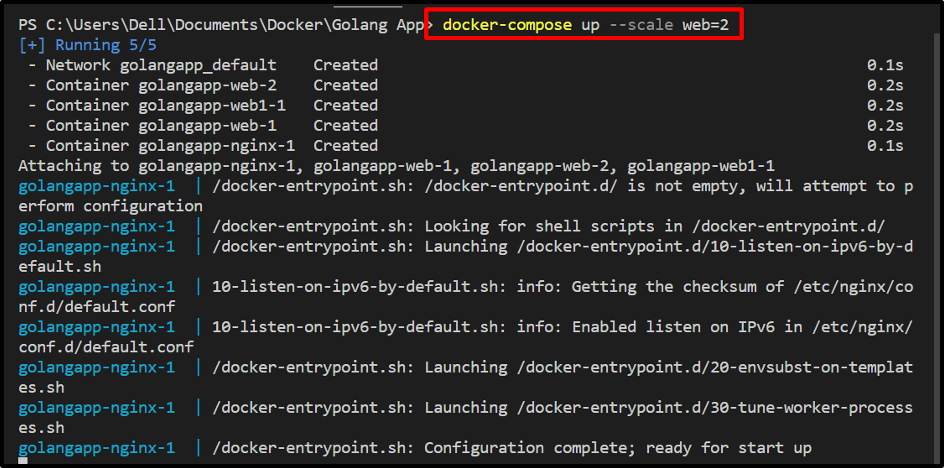

Execute the “docker-compose up” command to fire up the services in separate containers. Here “–scale” option is used to generate the two replicas of the first or “web” service:

docker-compose up –scale web=2

For the verification, go to the exposing port of the “nginx” service container and verify if it is accepting the stream from specified services or not:

From the above-given output, it can be observed that we have successfully executed multiple containers or services on the same port.

Conclusion

To execute or expose the multiple container applications on the same port using a load balancer/reverse proxy, first, create an “nginx.conf” file to configure the load balancer configurations such as upstreaming services, listening ports, and proxy to upstream the service. Then, configure the load balancing service in the compose file. This blog has demonstrated how to expose and run multiple containers or services on the same port.