We are seeing an increased use and adoption of solutions that enable the teams, specialists, and enthusiasts in data science to communicate remotely as a result of the recent shift to hybrid and flexible work methods.

Indeed, the OS community plays a larger role in the advancement of AI. No company, not even the “tech giants” will be able to “solve AI” alone; sharing information and resources is the way of the future!

Hugging Face meets this need by providing a community “Hub”. Everyone can share and explore the models and datasets in this central location. With the intention of democratizing the AI for all, they hope to become a place with the largest collection of models and datasets.

A library called “Datasets” facilitates the sharing and access to datasets for applications such as audio, computer vision, and natural language processing (NLP).

With just one line of code, you can load a dataset and use the effective data processing techniques to quickly prepare it for training the Deep Learning models. Process the large datasets with zero-copy reads without memory constraints supported by the Apache Arrow format to achieve the fastest performance. In addition, the interaction with Hugging Face Hub (https://huggingface.co/datasets) simplifies the import and distribution of datasets to the larger machine learning community.

Find your dataset now in the Hugging Face Hub (https://huggingface.co/datasets) and use the live viewer to see it up close.

Hugging Face Dataset Download

Finding high-quality datasets that are accessible and repeatable can be a challenge. One of the main goals of Hugging Face Datasets is to provide an easy way to import the datasets of any format and type. Searching for an existing dataset in the Hugging Face Hub, a community-driven collection of datasets for NLP, computer vision, and audio applications, and using the Hugging Face Datasets to download and create the dataset are the easiest ways to get started.

We use the rotten_tomatoes (https://huggingface.co/datasets/rotten_tomatoes) and MinDS-14 (https://huggingface.co/datasets/PolyAI/minds14) datasets, but feel free to load any dataset. Head over to the hub right now to choose a dataset for your project!

Before you go to the trouble of downloading a dataset, it can be useful to quickly learn some background information. The DatasetInfo (https://huggingface.co/docs/datasets/v2.13.1/en/package_reference/main_classes#datasets.DatasetInfo) contains an information about a dataset including the details such as the dataset description, characteristics, and size.

Install Hugging Face

First of all, we are going to create a virtual environment. The virtual environment steps are given in the following:

To create a virtual environment in Linux using Python’s built-in venv module, follow these steps:

1. Launch the terminal on your Linux system by either accessing it through the applications menu or using the Ctrl + Alt + T keyboard shortcut.

2. If the virtualenv package is not already installed on your Linux distribution, you can install it by executing the following command:

3. Once you have the virtualenv package installed (if needed), navigate to the desired directory where you wish to create the virtual environment. Then, create a virtual environment named “myenv” (you can use any name as per your choice) by running the following command:

4. Activate the virtual environment by executing the following command:

5. Upon running this command, you will notice that “(myenv)” is added to your terminal prompt which indicates that the virtual environment is now active.

6. With the virtual environment activated, you can use pip to install the Python packages and work on your project within this isolated environment. Any package you install or libraries that you utilize will be specific to this virtual environment and will not interfere with your system-wide Python installation.

7. To deactivate the virtual environment once you finish working within it, run the following command:

The (myenv) indicator will disappear from your terminal prompt which indicates that you returned to your system’s default Python environment.

Steps to Install Hugging Face

1. Activate the virtual environment by running the following command:

2. Install the Hugging Face library. Once you have your virtual environment activated (if you created one), you can install the Hugging Face library using pip which is the package installer for Python. Run the following command:

This command installs the Transformers library which is the main library that is provided by Hugging Face for natural language processing tasks.

3. Verify the installation. After the installation completes, you can verify that the Hugging Face library is successfully installed by running the following Python code:

print(transformers.__version__)

If the installation is successful, it should print the version number of the installed library. Run the following command to see if Transformers is installed correctly:

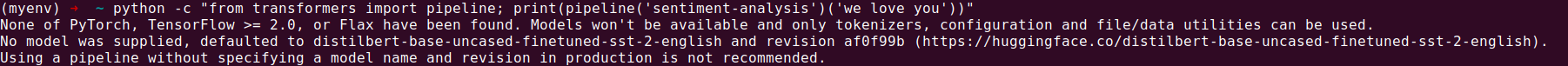

If you get the following error, you must have to install either Tensorflow, PyTorch, or Flax:

To install the Hugging Face Datasets library, you can follow these steps:

Install the Hugging Face Datasets Library

Once you have your virtual environment activated (if you created one), you can install the Hugging Face Datasets library using pip which is the package installer for Python. Run the following command:

This command installs the datasets library which is the main library that is provided by Hugging Face to access and work with various datasets.

Verify the Installation

After the installation completes, you can verify that the Hugging Face Datasets library is successfully installed by running the following Python code:

print(datasets.__version__)

If the installation is successful, it should print the version number of the installed library.

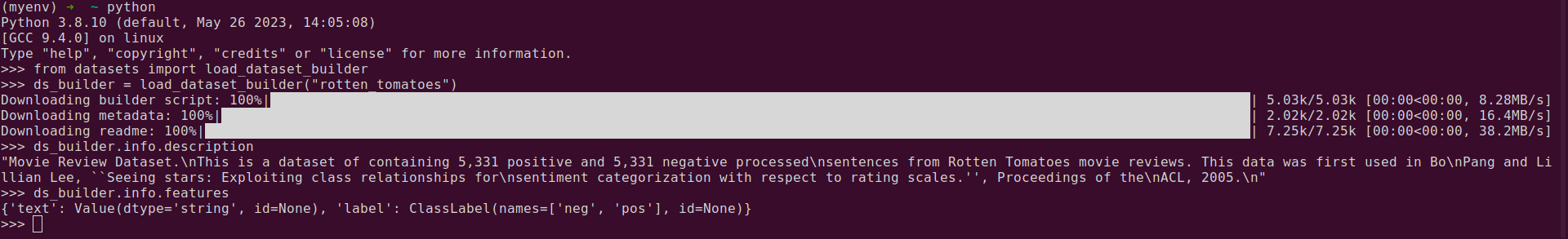

To load a dataset builder and see the properties of a dataset without downloading it, use the load_dataset_builder() (https://huggingface.co/docs/datasets/v2.13.1/en/package_reference/loading_methods#datasets.load_dataset_builder) function:

ds_builder = load_dataset_builder("rotten_tomatoes")

# Inspect dataset description

ds_builder.info.description

# Inspect dataset features

ds_builder.info.features

{'label': ClassLabel(num_classes=2, names=['neg', 'pos'], id=None),

'text': Value(dtype='string', id=None)}

See the example for the previous code:

If the dataset is satisfactory, load it using the load_dataset() (https://huggingface.co/docs/datasets/v2.13.1/en/package_reference/loading_methods#datasets.load_dataset).

dataset = load_dataset("rotten_tomatoes", split="train")

Split the Dataset

A split is a distinct dataset subset such as train and test. The get_dataset_split_names() (https://huggingface.co/docs/datasets/v2.13.1/en/package_reference/loading_methods#datasets.get_dataset_split_names) method returns a list of split names for a dataset:

get_dataset_split_names("rotten_tomatoes")

After that, you may use the “split” argument to load a certain split. A Dataset object is produced after loading a split dataset:

dataset = load_dataset("rotten_tomatoes", split="train")

dataset

Dataset({

features: ['text', 'label'],

num_rows: 8530

})

The Datasets gives a DatasetDict object if you don’t specify a split:

dataset = load_dataset("rotten_tomatoes")

DatasetDict({

train: Dataset({

features: ['text', 'label'],

num_rows: 8530

})

validation: Dataset({

features: ['text', 'label'],

num_rows: 1066

})

test: Dataset({

features: ['text', 'label'],

num_rows: 1066

})

})

Several sub-datasets can be found in certain datasets. One sub-dataset of the MInDS-14 dataset (https://huggingface.co/datasets/PolyAI/minds14), for instance, contains the audio data in several languages. The configurations that make up these sub-datasets must be explicitly chosen when importing the dataset. The Datasets will report a ValueError and will prompt you to select a configuration if you don’t supply a configuration name.

To receive a list of all the settings that your dataset may support, use the get_dataset_config_names() (https://huggingface.co/docs/datasets/v2.13.1/en/package_reference/loading_methods#datasets.get_dataset_config_names) function:

configs = get_dataset_config_names("PolyAI/minds14")

print(configs)

['cs-CZ', 'de-DE', 'en-AU', 'en-GB', 'en-US', 'es-ES', 'fr-FR',

'it-IT', 'ko-KR', 'nl-NL', 'pl-PL', 'pt-PT', 'ru-RU', 'zh-CN', 'all']

Then, load the desired configuration:

mindsFR = load_dataset("PolyAI/minds14", "fr-FR", split="train")

Example Demo:

Let’s demonstrate how to create an NLP program with a pre-trained Hugging Face model. Let’s create a text with a pre-trained model that is trained on user input. The steps that we’ll discuss are as follows:

1. Begin by installing the required libraries on your system.

2. Next, import the necessary modules into your code.

3. Load the pre-trained model into the memory for further processing.

4. Utilize the user prompts to generate the text based on the loaded model.

To gain a comprehensive understanding, let’s explore each step in detail, complete with explanations and a demonstration.

Step 1: Install the Required Libraries First

Ensure that the Transformers library is set up on your computer. You can install it using pip:

Step 2: Add the Necessary Modules

The required modules are imported from the “Transformers” library and other common Python libraries:

Step 3: Load the Previously Trained Model

Let’s import a pre-trained GPT-2 model and its associated tokenizer in this stage. A cutting-edge language-generating model is GPT-2 (Generative Pre-trained Transformer 2).

tokenizer = GPT2Tokenizer.from_pretrained('gpt2')

We instantiate a GPT2LMHeadModel object and load the pre-trained weights using the from_pretrained method. We also create a GPT2Tokenizer object to tokenize the input prompts and decode the generated text.

Step 4: Generate the Text Based on User Prompts

Now, we write a function that takes the user prompts and generates the text based on those prompts.

input_ids = tokenizer.encode(prompt, return_tensors='pt')

output = model.generate(input_ids, max_length=len(input_ids[0]) +

num_words, num_return_sequences=1)

generated_text = tokenizer.decode(output[:, len(input_ids[0]):][0],

skip_special_tokens=True)

return generated_text

The generate_text function takes a prompt string as input and generates the num_words words of text based on the prompt.

First, we tokenize the prompt using the tokenizer’s encode method. We pass the return_tensors=’pt’ to get the PyTorch tensors as output.

Then, we pass the encoded prompt to generate the method of the model object. We specify the max_length to control the length of the generated text. The num_return_sequences parameter determines how many different sequences to generate.

The generated output is a sequence of token IDs. We extract the generated text by excluding the input prompt tokens using slicing. We decode the generated token IDs into a human-readable text using the tokenizer’s decode method. We also skip the special tokens like [PAD], [CLS], and [SEP] by setting skip_special_tokens=True.

Let’s see a demo of the program:

generated_text = generate_text(prompt, num_words=<strong>20</strong>)

print(generated_text)

Output:

In this demonstration, we give the model the “Once upon a time” cue and ask it to produce 20 more words of text based on it. The plot is creatively and logically continued in the created text.

Based on your unique demands, you can further modify and improve this application. You are welcome to go through the Hugging Face documentation to learn more about the models, tokenizers, and features that are offered.

We are done now! Utilizing a pre-trained Hugging Face model, you created an NLP algorithm that can output the text in response to the user input. After reading the explanations that are given here, you should have a solid knowledge of each phase and how to use the pre-trained models for various NLP tasks.

Conclusion

This blog post introduces the Hugging Face dataset and its role in facilitating the sharing and exploration of models and datasets within the AI community. It emphasizes the importance of collaboration and knowledge sharing in the field of AI, highlighting the growing role of the open-source community in advancing the artificial intelligence. The Hugging Face Hub serves as a central location for sharing and accessing the datasets, with the goal of democratizing AI and creating the largest collection of models and datasets. The post also mentions the Datasets library which simplifies the sharing and access of datasets across various domains. By leveraging the efficient data processing techniques and the Apache Arrow format, the users can quickly prepare the large datasets to train the deep learning models with optimal performance. To explore and interact with datasets, the Hugging Face Hub provides a live viewer that allows the users to examine the datasets in detail. Overall, Hugging Face provides a valuable resource for data scientists, specialists, and enthusiasts to collaborate and advance the field of AI together.