This article provides a step-by-step guide about using Tokenizers in Hugging Face Transformers.

What is a Tokenizer?

Tokenizer is an important concept of the NLP, and its main objective is to translate the raw text into numbers. There are various techniques and methodologies present for this purpose. However, it is worth noting that each technique serves a specific purpose.

How to Use Tokenizers in Hugging Face Transformers?

How to Use Tokenizers in Hugging Face Transformers?

The tokenizer library must be first installed before using it and importing functions from it. After that, train a model using AutoTokenizer, and then provide the input to perform tokenization.

Hugging Face introduces three major categories of Tokenization which are given below:

- Word-based Tokenizer

- Character-based Tokenizer

- Subword-based Tokenizer

Here is a step-by-step guide to use Tokenizers in Transformers:

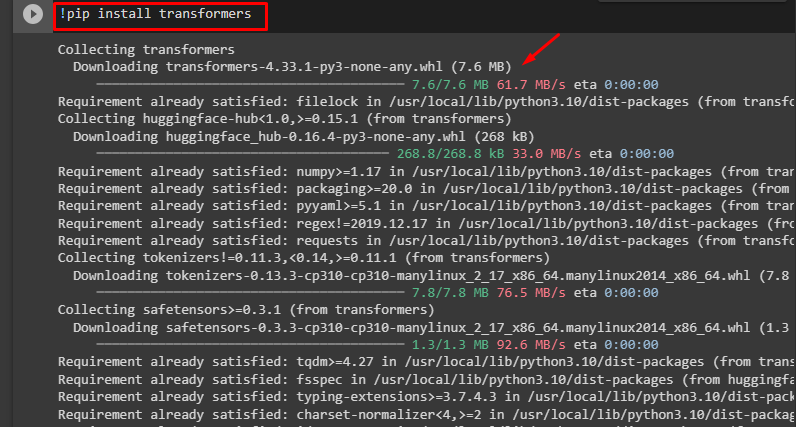

Step 1: Install Transformers

To install transformers, use the pip command in the following command:

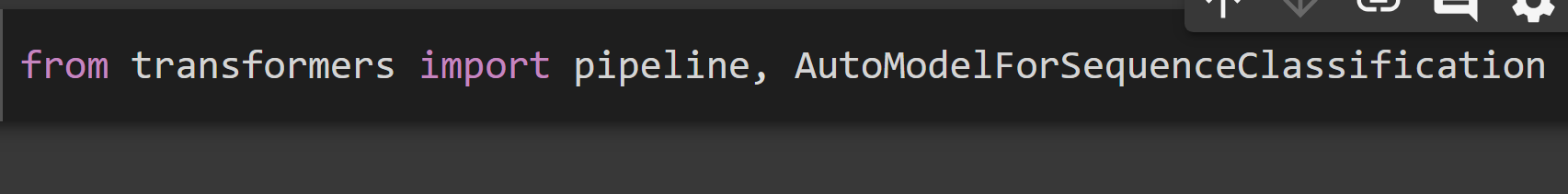

Step 2: Import Classes

From transformers, import pipeline, and AutoModelForSequenceClassification library to perform classification:

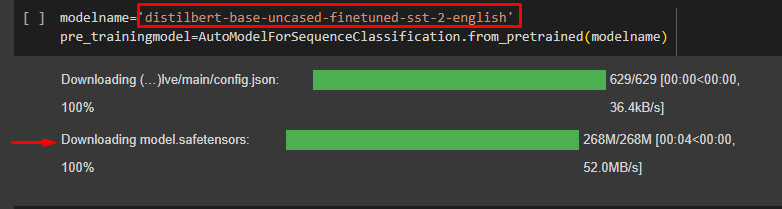

Step 3: Import Model

The “AutoModelForSequenceClassification” is a method that belongs to Auto-Class for tokenization. The from_pretrained() method is used to return the correct model class based on the model type.

Here we have provided the name of the model in the “modelname” variable:

pre_trainingmodel=AutoModelForSequenceClassification.from_pretrained(modelname)

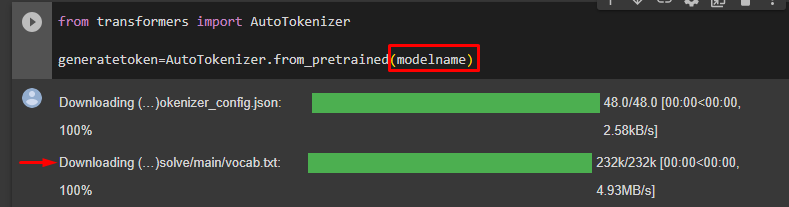

Step 4: Import AutoTokenizer

Provide the following command to generate tokens by passing the “modelname” as the argument:

generatetoken=AutoTokenizer.from_pretrained(modelname)

Step 5: Generate Token

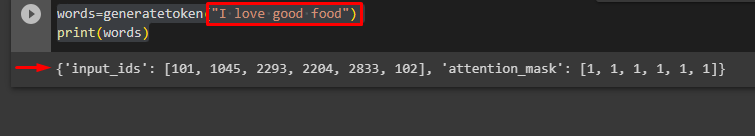

Now, we will generate tokens on a sentence “I love good food” by using the “generatetoken” variable:

print(words)

The output is given as follows:

The code to the above Google Colab is given here.

Conclusion

To use Tokenizers in Hugging Face, install the library using the pip command, train a model using AutoTokenizer, and then provide the input to perform tokenization. By using tokenization, assign weights to the words based on which they are sequenced to retain the meaning of the sentence. This score also determines their worth for analysis. This article is a detailed guide on how to use Tokenizers in Hugging Face Transformers.