Example 1: Eliminating Duplicates Using Python Sets

Leveraging sets is a highly efficient method for removing duplicates from a list in Python. A set is an unordered collection of unique elements. By converting a list into a set, we automatically eliminate any duplicate entries. The inherent property of sets to allow only the distinct values makes them an ideal tool for simplifying the process of obtaining unique elements from a list.

Let’s imagine a simple scenario where we have a list of user IDs. But due to some data entry errors or system malfunctions, the list contains duplicate IDs. To ensure the accurate user analytics, we need to remove these duplicates. We’ll use the method of using sets to achieve this.

unique_IDs = set(IDs_with_duplicates)

IDs_without_duplicates = list(unique_IDs)

print("Original User IDs:", IDs_with_duplicates)

print("User IDs without Duplicates:", IDs_without_duplicates)

In this code snippet, we have a list of user IDs that contain the duplicates, denoted by “IDs_with_duplicates”. Using the set() function, we create a set called “unique_IDs” from the original list, eliminating the duplicate entries. Subsequently, we convert this set back into a list named “IDs_without_duplicates” to preserve the original order of elements. The “print” statements display both the original list with duplicates and the refined list without duplicates.

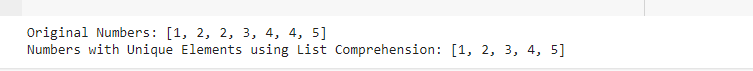

Example 2: List Comprehension for Uniqueness

Using a condition and an existing iterable (like a list), list comprehension offers a concise syntax for building the lists. List comprehension lets us go through the original list and selectively add the entries to the new list as long as they haven’t been added previously, all in the process of removing duplicates.

unique_values = [x for i, x in enumerate(numbers_with_duplicates) if x not in numbers_with_duplicates[:i]]

print("Original Numbers:", numbers_with_duplicates)

print("Numbers with Unique Elements using List Comprehension:", unique_values)

In this example, we start with a list of numbers that contain the duplicates. We create a new list (unique_numbers) by iterating through the original list using list comprehension which is a clear and understandable method. The list comprehension includes only those elements that have not been encountered before by checking if each element is not present in the portion of the list preceding the current iteration. This ensures that only the first occurrence of each number is retained which results in a clean list of unique elements. The code then displays both the original list with duplicates and the refined list with unique elements, showcasing the effectiveness of list comprehension to ensure the uniqueness while maintaining the order of the original elements.

Example 3: Python Dict.fromkeys() Method for Unique Keys

The dict.fromkeys() method takes an iterable (a list) as its argument and creates a new dictionary where each unique element of the iterable becomes a key. Since dictionaries inherently allow the unique keys, any duplicate elements in the original list are automatically eliminated during this process. The order of elements in the resulting dictionary corresponds to their order of first appearance in the original list.

Here is the example code:

unique_list = list(dict.fromkeys(Actual_list))

print("Actual List:", Actual_list)

print("List without Duplicates using dict.fromkeys():", unique_list)

In this example, “dict.fromkeys(original_list)” generates a dictionary where each unique element of “original_list” becomes a key. Converting this dictionary to a list with list() provides the final result, ensuring a clean list with unique elements in the order of their first occurrence in the original list.

This method is both concise and efficient to remove the duplicates while maintaining the original order of elements.

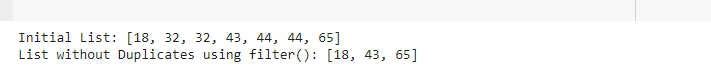

Example 4: Filtering the Duplicates with the Filter() Function

The filter() function in Python can sift through the original list and construct a new one without duplicates. By leveraging a “lambda” function within filter(), we can create a new list that retains only the unique elements. The “lambda” function checks the count of each element in the original list and only those elements with a count of 1 are included in the filtered list, effectively removing the duplicates.

filtered_list = list(filter(lambda x: initial_list.count(x) == 1, initial_list))

print("Initial List:", initial_list)

print("List without Duplicates using filter():", filtered_list)

Here, the “lambda x: initial_list.count(x) == 1” function serves as the filtering condition within filter(). It checks the count of each element in the original list and includes only those with a count of 1. Duplicates are essentially eliminated because only the unique elements remain in the “filtered_list” resultant. This demonstrates how the filter() function can be harnessed for a specific condition, providing an alternative method to achieve the uniqueness in a list.

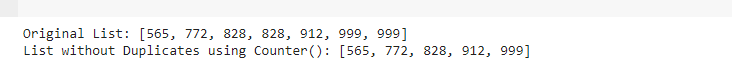

Example 5: Python’s Counter() Method

The Counter() function in Python, found in the “collections” module, counts each member in the list by transforming it into a “Counter” object. A “Counter” object serves as a dictionary subclass that counts the hashable items. We can then construct a new list by including only those elements with a count of 1, signifying its uniqueness.

new_list = [565, 772, 828, 828, 912, 999, 999]

unique_list = list(Counter(new_list))

print("Original List:", new_list)

print("List without Duplicates using Counter():", unique_list)

We import the “Counter” class from the “collections” module. Then, we have a new list, “new_list”, which contains some duplicate elements. Using the “Counter(new_list)” approach, we create a “Counter” object that removes duplicates. By converting this “Counter” object into a list, we obtain the “unique_list” which contains only the distinct elements from the original list. The “print” statements display both the original list with duplicates and the refined list without duplicates.

Conclusion

Python provides diverse methods to remove the duplicates from the lists, each catering to different needs and preferences. Whether simplicity, order preservation, or efficiency is paramount, the developers can choose from the techniques that are presented in this article. The simplicity of using a set or list comprehension is ideal when the order preservation is not crucial, while the more intricate approaches that involve the dictionaries, Counter(), and dict.fromkeys() offer solutions that maintain the original order of elements. These methods’ adaptability gives the programmers the ability to customize their solutions to the unique needs of their projects.