In Natural Language Processing, Transformers offer a variety of solutions for complex tasks such as computer vision, image classification, text classification, audio conversions, etc. One of the functions of the Transformer library is the pipeline() function which is used for performing many complex tasks such as downloading the models, specifying the models, performing inference tasks, etc.

This article provides a demonstration of how to use pipelines on large models with the Accelerate library in Transformers.

How to Use Pipeline on Large Models with Accelerate in Transformers?

Downloading large models is not only heavy on resources but is also time-consuming. There are multiple models which are trained over massive volumes of data. Such models cannot be downloaded easily and will always be at risk of download failure or any error occurrence.

Hugging Face has introduced the Accelerate library that provides ease to its users to download these huge models. Here is a step-by-step guide to using pipeline on large models with Accelerate library in Transformers:

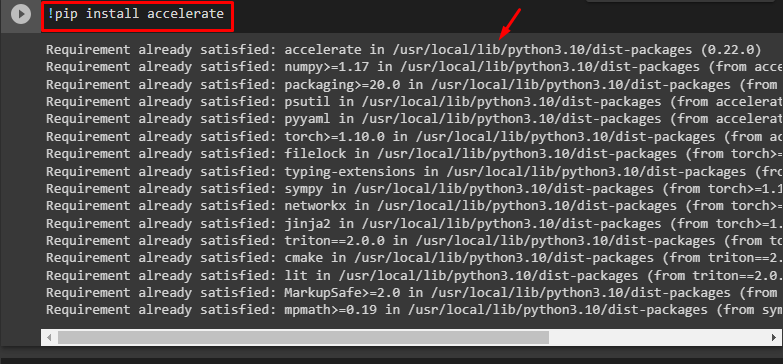

Step 1: Install Accelerate Library

To get started with the Accelerate library, we first need to install it. For this purpose, provide the following command:

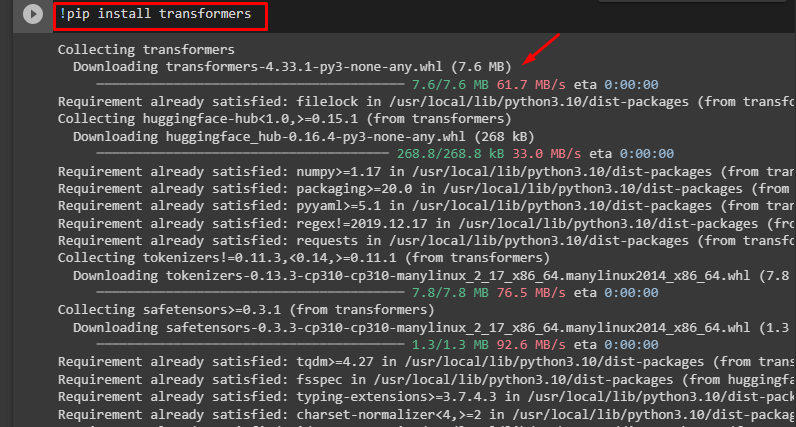

Step 2: Install Transformers

Next, we will install the Transformer library by using the following command:

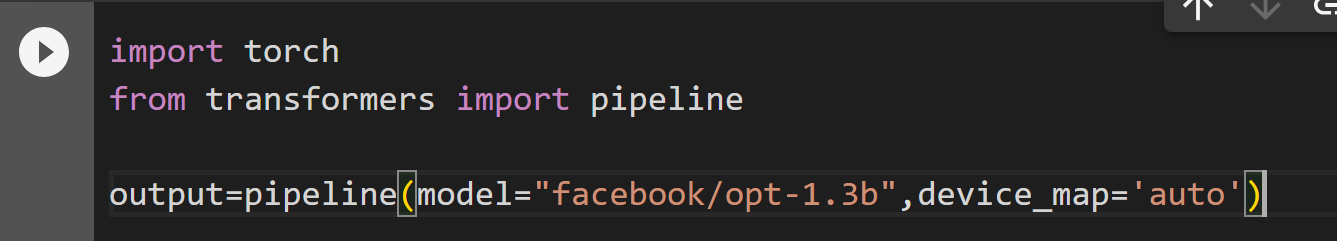

Step 3: Import Libraries

Now we will import all the important libraries. Here we have used the “facebook/opt-1.3b” model which falls under the category of “large models”. The device_map= ‘auto’ is used when the model to be downloaded is large and requires more than one GPU. So, the “accelerate” will automatically determine how to load and store these huge models:

from transformers import pipeline

output=pipeline(model="facebook/opt-1.3b",device_map='auto')

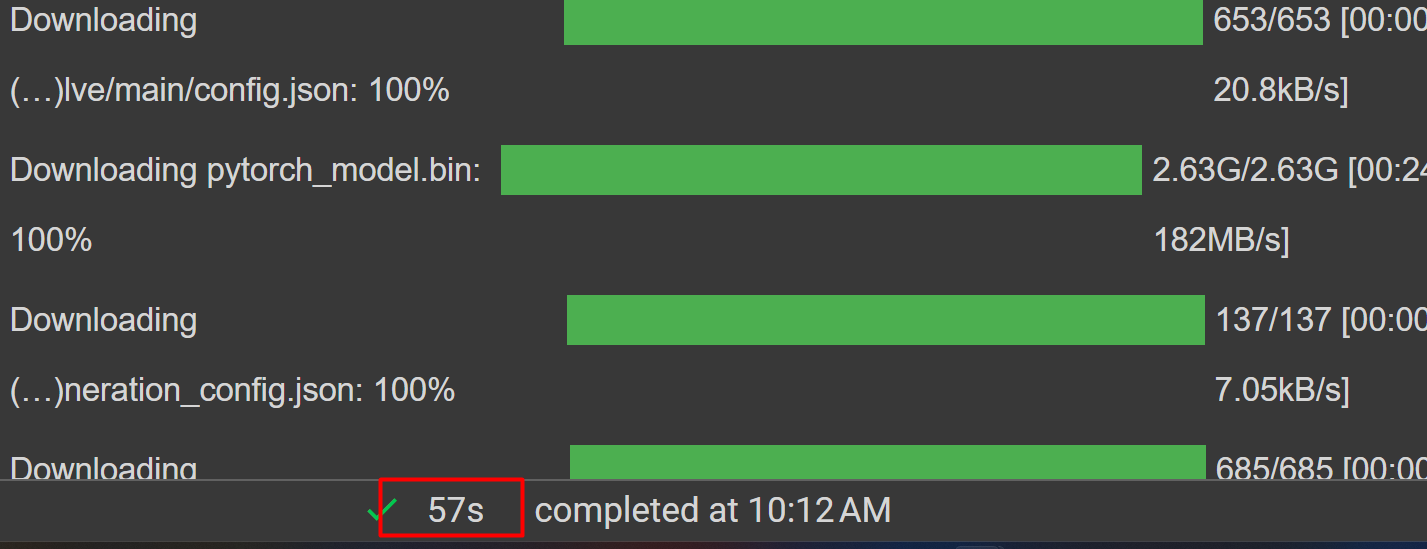

The entire model was downloaded in “57 seconds”:

That is all from this guide. The link to the Google Colab for this code is also mentioned.

Conclusion

To use the Accelerate library, install it using the “!pip install accelerate” command, and provide the link of the large model to the pipeline() function. This library is efficient for downloading huge models in Machine Learning. This article is a step-by-step demonstration on how to use pipelines on large models with the Accelerate library in Transformers.