A pipeline in HuggingFace refers to a high-level interface that simplifies the process of running the inference on text inputs. It handles the model loading, tokenization, inference, and post-processing steps which make it easier for developers to use the pre-trained models for specific tasks without having to deal with the intricacies of the underlying model architecture.

Some of the pipeline’s features for language inference will be put into practice in this article.

Installing the Required Library

To be able to use the pipelines, we install the “Transformers” package into our project. This package is present in the PyPi and thus can be installed with the pip command. So, we first install it.

The command to install the Transformers is as follows:

Running this command eventually installs the “Transformers” library which allows us to use the pipeline inference in our Python program.

Now, let’s create some examples that use the pipeline inference in Python.

Example 1: Using the HuggingFace pipeline Inference for Text Generation in Python

In this illustration, we will do the text generation using the pipeline inference in Python. Pipelines are generally associated with some specific task, but we can also use the pipeline abstraction if we do not want to specify some particular pipeline. This contains all the specific task pipelines. The pipeline uses the default model for the mentioned task.

Here, the pipeline takes the input prompt and the text is predicted based on it. Let’s create a program to implement this method:

prediction = pipeline(task="text-generation")

prediction("Living a contented life is")

First, import the required module which is the pipeline. We import the pipeline from the “Transformers” library. Now, we can use all the features of this module in our project.

After that, we need to create a pipeline and specify the task identifier for inference. The pipeline is constructed by simply invoking the pipeline() method. We specify the task identifier as “text-generation” in this method for inference. It means that the task of this pipeline is to generate some text following the input prompt. After that, the pipeline receives the input text. The text that we specify here is “Living a contented life is”.

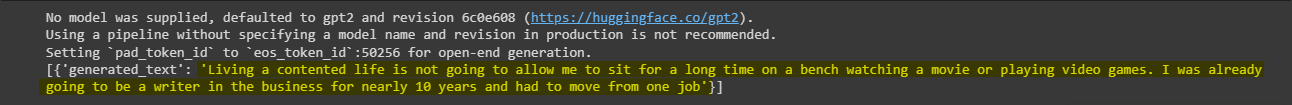

Now, the pipe takes this prompt and generates some text using it. Let’s execute the code to see the generated output text:

Since we haven’t specified any model for inference, the output shows that the default model is used. Then, we get the generated text following the prompt.

We execute this program with a single input text. Text generation can also be achieved for more than one input prompt.

["Living a contented life is",

"Success is not permanent. Failure is not fatal."]

)

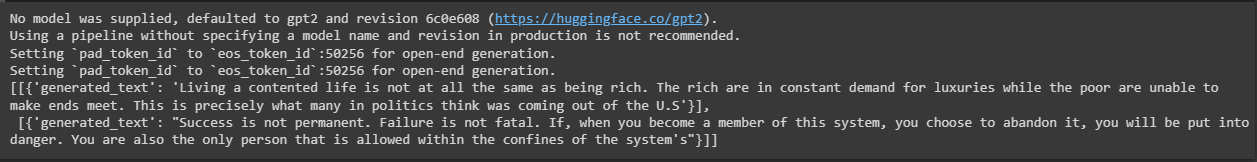

To do this, you have to pass the input prompts as a list to the pipeline. So, we add another text string as “Success is not permanent. Failure is not fatal.” With the previous text string, both are separated by a comma.

The following output is generated from this program:

Example 2: Using the HuggingFace Pipeline Inference for Text Classification in Python

For the instance that we create here, the task is to do the text classification with pipeline inference in Python. Text classification is an approach to machine learning that we use to anticipate the category of the input data based on the predefined classes of the data. We provide the input text to the pipeline identifier and the pipeline analyzes the text and assigns the relevant labels to it. Here, we use it for sentiment analysis like positive, negative, or neutral.

The program to implement this technique is as follows:

classifier = pipeline(model="text-classification")

classifier("I love eating mangoes.")

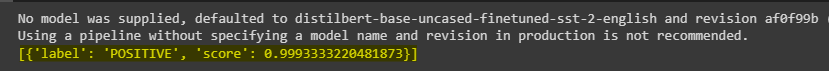

We initially import the fundamental module which is a pipeline from the “Transformers” library. After that, the pipeline() method is invoked and the task identifier is provided to it. Since we are classifying the text, the task that is used here is “text-classification”. The text that we want to analyze on the bases of sentiments is provided as “I love eating mangoes.” We simply pass this input to the pipeline to label it after analyzing.

The following snapshot shows us the generated response. The text is labeled as “POSITIVE” and is also scored with 0.999.

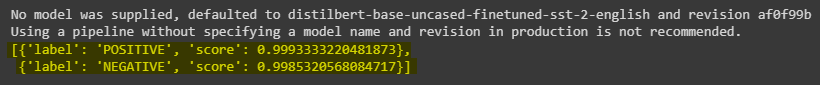

Now, we try it with three inputs. We use the previous one in addition to another text string which is “I don’t like papaya.”

["I love eating mangoes.",

"I do not like papaya."]

)

Now, the executed program gives us two outputs. The first one is labeled as POSITIVE while the other is labelled as NEGATIVE.

When we execute it with the default model, the identifier only gives us two outcomes, whether positive or negative sentiment. But if we specify a model for text classification, we can also classify which text is Neutral.

["I love eating mangoes.",

"I do not like papaya.",

"We eat fruits."]

)

So, we use a model from the hub which is roberta-large-mnli. We don’t need to specify the task identifiers when specifying a model. We add another text string and then execute the program with three input text strings to be classified.

The generated response is displayed in the following where you can see that the first two strings are labeled NEUTRAL and the last string is classified as ENTAILMENT:

Example 3: Using the HuggingFace Pipeline Inference for Summarization in Python

For the last instance, we will learn to generate a summary of the input text. We specify a model which analyzes the provided text and creates a summary of it with the specified max and min length.

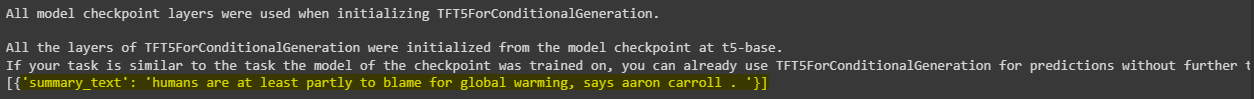

summary = pipeline("summarization", model="t5-base", tokenizer="t5-base", framework="tf")

summary("Earth is warming up, and humans are at least partially to blame. The causes, effects, and complexities of global warming are important to understand so that we can fight for the health of our planet.", min_length=5, max_length=22)

The pipeline module is imported. Then, we create a pipeline with a specified task summarization, model, and tokenizer t5-base. The framework t5 is also specified. Lastly, the text that needs to be summarized is passed to the pipeline.

The generated summary of the provided input can be seen in the following snapshot:

Conclusion

The HuggingFace pipeline inference is discussed in this article. Pipeline inference provides us with several features; three of them are covered in this article. For the first instance, the text generation feature of pipeline inference is elaborated. Then, we implemented a text classification pipeline technique to label the text. Lastly, we learned to generate a summary of input text using the pipeline inference in Python.