Saving to disk involves writing the dataset’s files, metadata, and other necessary information to a specified directory on the computer’s storage. This allows us to persist the dataset’s state so that we can access it later without having to load it from the original source or recreate it from scratch.

Loading from disk, on the other hand, refers to reading the saved dataset files and metadata from the disk into the memory. By doing so, we can quickly access the dataset and its examples without the need for network requests or lengthy processing steps.

A library called “Datasets” facilitates the sharing and access to datasets for applications such as audio, computer vision, and natural language processing (NLP).

With just one line of code, you can load a dataset and use the effective data processing techniques to quickly prepare it to train the Deep Learning models. Processing the large datasets with zero-copy reads without memory constraints is supported by the Apache Arrow format to achieve the fastest performance. In addition, the interaction with the Hugging Face Hub that simplifies the import and distribution of datasets to the larger machine learning community.

Find your dataset now in the Hugging Face Hub and use the live viewer to see it up close.

Hugging Face Dataset Download

Finding high-quality datasets that are accessible and repeatable can be a challenge. One of the main goals of Hugging Face datasets is to provide an easy way to import the datasets of any format and type. Searching for an existing dataset in the Hugging Face Hub, a community-driven collection of datasets for NLP, computer vision, and audio applications, and using the Hugging Face datasets to download and create the dataset are the easiest ways to get started.

Before you go to the trouble of downloading a dataset, it can be useful to quickly learn some background information. The DatasetInfo contains information about a dataset including the details such as the dataset description, characteristics, and size.

This example program presents a detailed explanation of a Python program that demonstrates how to use the Hugging Face Datasets library to download a dataset, save it to disk, and load it back into the memory. The Hugging Face Datasets library provides a convenient way to work with various datasets for natural language processing tasks.

Requirements:

To run the code that is provided in this example, you need the following:

- Python: Make sure that you have Python installed on your system.

- Hugging Face Datasets: Install the Hugging Face Datasets library using the “pip install datasets”.

- Hugging Face Hub: Install the Hugging Face Hub library using the “pip install huggingface_hub”.

Example: We save the following program with the “test.py” name.

# Step 1: Check available datasets

print("Available datasets:")

print(list_datasets())

# Step 2: Download a dataset

dataset_name = "imdb"

print(f"Downloading the '{dataset_name}' dataset...")

dataset = load_dataset(dataset_name)

print("Download complete.")

# Step 3: Save the dataset to disk

save_dir = "saved_dataset"

print(f"Saving the '{dataset_name}' dataset to disk at '{save_dir}'...")

dataset.save_to_disk(save_dir)

print("Saving complete.")

# Step 4: Load the saved dataset from disk

from datasets import load_from_disk

print(f"Loading the '{dataset_name}' dataset from disk...")

loaded_dataset = load_from_disk(save_dir)

print("Loading complete.")

# Step 5: Access the loaded dataset

split_name = "train"

print(f"Accessing the '{split_name}' split of the

'{dataset_name}' dataset...")

print(loaded_dataset[split_name][0])

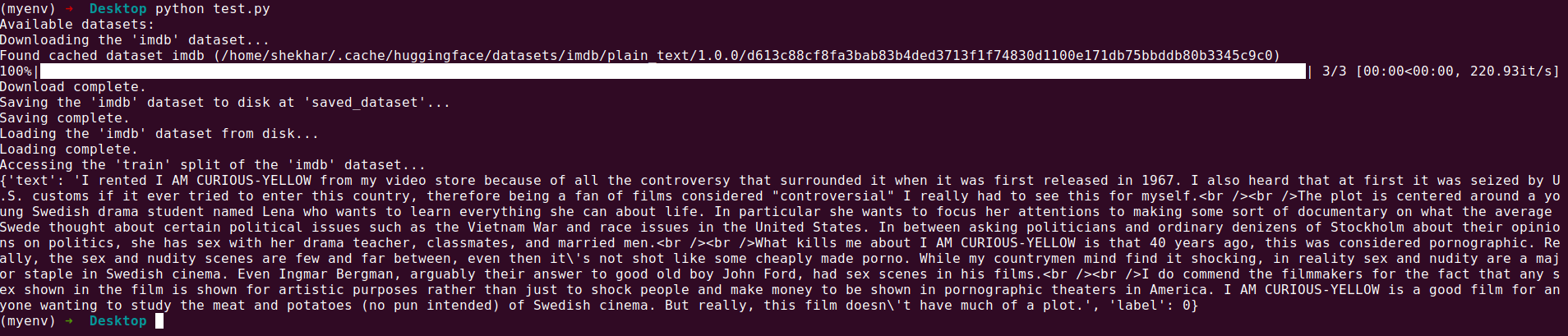

Output:

Code Explanation:

The program follows these main steps:

Check the Available Datasets: We start by listing the available datasets using the list_datasets() function from the huggingface_hub library. This step is optional but provides the users with information about the datasets that they can work with.

Download the Dataset: We choose a dataset to download which, in this case, is the IMDb dataset using the load_dataset() function from the datasets library. This function loads the dataset into the memory as a “DatasetDict” object.

Save the Dataset to Disk: After downloading the dataset, we save it to a specified directory on the disk using the save_to_disk() method. The default save format for the dataset is Apache Arrow (.arrow).

Load the Dataset from Disk: To demonstrate the process of loading a dataset from the disk, we use the load_from_disk() function from the datasets library. This function loads the saved dataset back into the memory as a “DatasetDict” object.

Access the Loaded Dataset: Finally, we access the loaded dataset by specifying the desired split (e.g., “train”) and print the first example from that split.

Conclusion

The provided Python program showcases how to use the Hugging Face Datasets library to download a dataset, save it to disk, and load it back into the memory. This process can be useful for working with large datasets or sharing and collaborating with others.

By following this example, you can easily integrate the Hugging Face Datasets library into your NLP projects, explore various datasets, and leverage the power of Hugging Face’s extensive collection of pre-processed datasets.

Feel free to modify and expand the program to suit your specific needs or integrate it into your own projects to work with different datasets and experiment with various natural language processing tasks. Happy coding!