Syntax

The state-of-the-art Hugging Face transformers have a vast variety of pre-trained models. These models can be applied to various language models that are listed in the following:

- These transformers may process the text in different languages and can perform different tasks on the text such as text classification, questioning and answering, translation of the text to different languages, and the generation of the text.

- We may also utilize these transformers in Hugging Face for vision-based classification tasks such as object detection and speech-based tasks, e.g. speaker classification or speech identification/recognition.

The transformers from Hugging Face include TensorFlow, PyTorch, ONNX, etc. For the syntax of installing the package for these transformers, we use the following command:

Now, we try to attempt different examples where we use the models from the Hugging Face transformer for different language processing tasks.

Example 1: Text Generation Using the Hugging Face Transformers

This example covers the method to use the transformers for the text generation. For the text generation, we utilize and import the pre-trained text generation model from the transformer. The transformer has a basic library which is known as “pipelines”. These pipelines work for transformers by doing all the pre and post necessary processing on the data that is required to be fed to the pre-trained models as the input.

We start coding the example by first installing the library package of the “transformers” in the Python terminal. To download the transformer’s package, use the “pip install with the name of the package, i.e. transformer”. Once we downloaded and installed the transformer package, we move forward by importing the “pipelines” package from the transformer. The pipeline is used to process the data before it is fed to the model.

We import the “pprint” from the pprint. This package is installed to print the output from the text generation model in a more readable, structured, and well-formatted form. Otherwise, if we use the “print()” function, it displays the output in a single line which is not well-formatted and easily readable. The text generation models help to generate or add more text to the text that we initially provided to the model as input.

To call the trained model from the transformer, we use the pipeline() function which has the two parameters as its input. The first one specifies the name of the selected task and the second one is the model’s name from a transformer. In this scenario, the selected task is the text generation. The pre-trained model that we utilize from the transformer is “gpt”.

After using the pipeline function, we decide the input that we want to give to our model to generate additional text for it. Then, we pass this input to the “task_pipeline()” function. This function creates the output for the model by taking in the input, the maximum length of the output, and the number of sentences that the output should have as its input parameters.

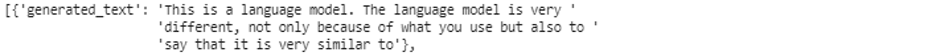

We give the input as “This is a language model”. We fix the maximum length of the output to “30” and the number of sentences in the output to “3”. Now, we simply call the pprint() function to display the results that are generated from our model.

from transformers import pipeline

from pprint import pprint

SELECTED_TASK = "text-generation"

MODEL = "gpt2"

task = pipeline(f"{SELECTED_TASK}", model = MODEL)

INPUT = "This is a language model"

OUt_put = task(INPUT, max_length = 30, num_return_sequences=3)

pprint(OUt_put)

From the previously-mentioned code’s snippet and output, we can see that the model generates the additional information/text that is relevant to the input that we fed to it.

Example 2: Text Classification Using Pipelines from the Transformers

The previous example covered the method to generate the additional text that is relevant to the input using the transformers and their package pipelines. This example shows us how to perform the text classification with the pipelines. Text classification is the process of identifying the input that is being fed to the model as a member of a specific class, e.g. positive or negative.

We first import the pipelines from the transformers. Then, we call the “pipeline()”function. We pass the model’s name which, in our case, is “text classification” to its parameters. Once the model is specified using the pipeline, we may now name it as “classifier”. Until this point, the default model for the text classification is downloaded to our host machine. Now, we can use this model for our task.

So, import the Pandas as “pd”. We want to import this package because we want to print the output from the model in the form of the DataFrame. Now, we specify the text which we want to give to our model as input to classify it as a positive or a negative sentence. We set the text as “I’m a good guy”. We pass this text to the classifier() model that we just created in this example and save the results in a “output” variable.

To display the output, we call the prefix of Pandas, i.e. pd as “.Dataframe()” and pass the output from the classifier model to this function. It now displays the results from the classifier model as shown in the following output snippet. Our classifier model classifies the text as the positive class.

from transformers import pipeline

import pandas as pd

classifier = pipeline("text-classification",model = "textattack/distilbert-base-uncased-CoLA")

text = 'i am a good guy'

result = classifier(text)

pprint(result)

df = pd.DataFrame(result)

Conclusion

This guide covered the transformer architecture from Hugging Face. We discussed the “pipeline” library from the Hugging Face transformer. Then, with the help of this library, we utilized the pre-trained transformers models for text generation and classification tasks.