In this tutorial, you will be learning to write code to detect faces in images, videos, and motion.

To avoid all sorts of errors and problems, we will download the opencv file from GitHub at https://github.com/opencv/opencv. We will be using some of the files within in order to complete the code.

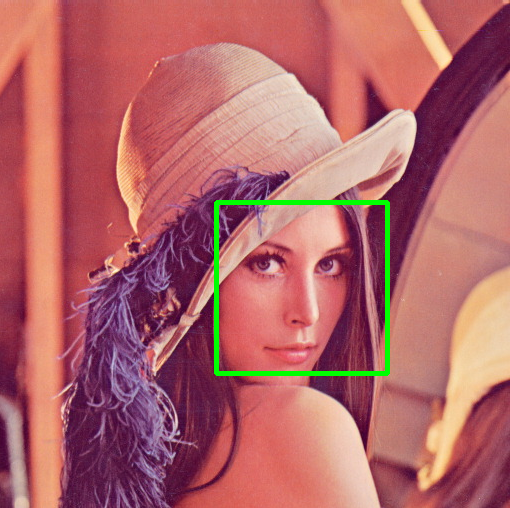

Face Detection using Images

Within the GitHub OpenCV file, there’s a sub-directory (opencv-master\samples\data) called data where sample pictures and videos to work with are available. We will be using photos and videos found within this directory. In particular, I will be using the lena.jpg file. I will copy and paste it into my PyCharm working directory (in my case, it’s C:\Users\never\PycharmProjects\pythonProject). Now, let’s start face detection on this image.

First, let’s load up the modules we need:

import cv2

The file we will be using is located at opencv-master\data\haarcascades\haarcascade_frontalface_default.xml of the file downloaded from GitHub. We need to put a link to the haarcascade file as follows:

Load the photo to carry out the face detection using the cv2.imread() method.

Our next goal is to turn the photo into grayscale. The latter is done using the cv2.cvtColor() method. This method takes two arguments. The first argument is the name of the file to be converted, and the second argument is the conversion format. In this case, we will use cv2.COLOR_BGR2GRAY to convert it to a grayscale format.

We then use the detectMultiScale() function to detect objects or, in this case, faces. Here, we will tell python face_cascade.detectMultiScale(), which will detect faces since that is what in the face_cascade parameter. The function detectMultiScale() takes a few arguments, the image, a scaling factor, the minimum number of neighbors, flags, minimum size, and maximum size.

To place a rectangular box around the face, we need to use the cv2.rectangle() method. Using this method, we need to give it a few arguments. The first argument is the image you want this on, the second argument is the start point of the rectangle, the third argument is the endpoint of the rectangle, the fourth argument is the color of the rectangle, and the fifth argument is the thickness of the line. In this case, w is for width, h is for height, and x and y are the starting point.

cv2.rectangle(image, (x,y), (x+w,y+h), (0,255,0), 3)

Lastly, we show the image using the cv2.imshow() method. We also use the cv2.waitKey(0) to set an infinite wait time and use the cv2.destroyAllWindows() method to close the window.

cv2.waitKey(0)

cv2.destroyAllWindows()

Face Detection using Videos/Webcam

In this case, we’re going to detect faces in real-time using a webcam or a video. Once again, we start by importing the required modules.

import cv2

Next, we need to specify the location of the haarcascade files. We do this as follows (exactly like for the image):

Now, we need to specify the video we want to deal with using the cv2.VideoCapture() method. In my case, I have chosen to deal with a video I had and inputted the name of the video. If you want to deal with webcams, you’d put a 0 instead of the name of the video file.

We then begin a while loop. In while True, we ask the program to detect the faces until we put a stop to it. In the first instance, we read the video file using the read() function.

ret, image = video.read()

Just like in the previous section, we need to turn the images or frames to grayscale for ease of detection. We use the cv2.cvtColor() method to change the frames to gray.

To detect the faces, we use the detectMultiScale() function. Once again, it takes the same parameters as in the previous section.

In order to place rectangles around the faces, we use the cv2.rectangle() method. This is similar to the previous section.

cv2.rectangle(image, (x, y), (x+w, y+h), (255, 0, 0), 2)

We then show the frames using the cv2.imshow() method. This method takes two arguments, the first is the name of the frame, and the second is the frame to display.

We then put a clause, if the user presses the ESC key (or 27), then the code will break out of the loop.

break

Finally, we release the video using the release() function.

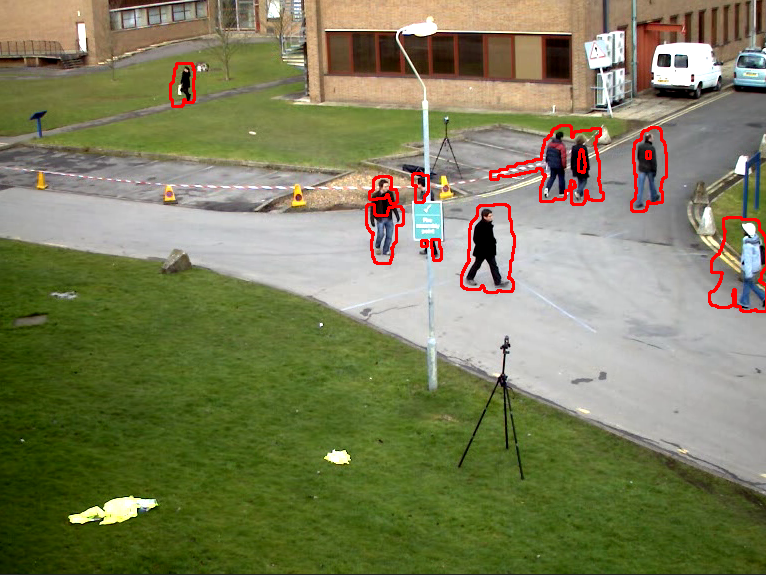

Motion Detection

Motion detection is great! What it means is that with python and a good webcam, we can create our own security camera! So, let’s begin.

import cv2

I will be picking a video from the samples (opencv-master\samples\data) of the GitHub file.

In order to detect motion, what we basically rely on is the difference in the pixel values of two images, a reference image, and a second image or frame. So, we create two images, frame1 and frame2.

ret, frame2 = video.read()

While the video is opened or using the isOpened() function, we begin a loop.

We first calculate the absolute difference between frame1 and frame2 using the cv2.absdiff() method. Obviously, it takes two arguments, the first and second frames.

As things are easier in black and white, we will turn the difference into grayscale using the cv2.cvtColor() method. The cv2.cvtColor() method takes two arguments, the first is the frame or image, and the second is the transformation. In this case, we will use cv2.COLOR_BGR2GRAY.

Once the image is in grayscale, we next need to blur the image to remove noise using the cv2.GaussianBlur() method. The cv2.GaussianBlur() method takes a few arguments- the source image to blur, the output image, the gaussian kernel size, kernel standard deviation along the x-axis, the kernel standard deviation along the y-axis, and border type.

Next, we place a threshold value using the cv2.threshold() method. This technique will isolate the movement by segmenting the background and the foreground (or movement). The cv2.threshold() method takes four arguments: the image, the threshold value, the max value to use with THRESH_BINARY and THRESH_BINARY_INV, and the thresholding type.

Next, we dilate using the cv2.dilate() method which takes 6 arguments maximally: the image, the kernel, the anchor, the iterations, the border type, and the border value.

The cv2.findContours() method does exactly what it signifies, it finds contours. It takes three arguments: the source image, the retrieval mode, and the contour approximation method.

The cv2.drawContours() method is used to draw the contours. It takes a few arguments: the image, the contours, the contourIdx (this value is negative if all contours are drawn), the color, thickness, line type, hierarchy, max level, and offset.

At last, we show the image using the cv2.imshow() method.

Now, we set the initial frame 2 as the first frame, and read the video for a new frame which we place into the frame2 parameter.

ret, frame2 = video.read()

If the “q” key is pressed, break out of the loop:

break

video.release()

The code as a whole for motion detection would look something like this:

import cv2

video = cv2.VideoCapture("vtest.avi")

ret, frame1 = video.read()

ret, frame2 = video.read()

while video.isOpened():

difference = cv2.absdiff(frame1, frame2)

gray = cv2.cvtColor(difference, cv2.COLOR_BGR2GRAY)

blur = cv2.GaussianBlur(gray, (5,5), 0)

_, threshold = cv2.threshold(blur, 20, 255, cv2.THRESH_BINARY)

dilate = cv2.dilate(threshold, None, iterations=3)

contour, _ = cv2.findContours(dilate, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

cv2.drawContours(frame1, contour, -1, (0, 0, 255), 2)

cv2.imshow("image", frame1)

frame1 = frame2

ret, frame2 = video.read()

if cv2.waitKey(40) == ord('q'):

break

video.release()

It’s just that simple! A few lines of code, and we can make our own face recognition and motion detection programs. A few additional lines, and we can even get them to talk (say using pttsx3) and create our own security cameras!

Happy Coding!