Natural Language Processing (NLP) cannot operate on the raw form of data. For example, if you are performing tokenization, the model you will be using will have a specific code that performs tokenization using specific techniques. This model is trained on huge datasets to provide correct outputs, accurate prediction, and analysis.

This article provides a comprehensive guide to how to programmatically download Hugging Face models using Model Hub.

How to Programmatically Download Hugging Face Models Using Model’s Hub?

In Hugging Face, Model Hub consists of models for performing different tasks such as image classification, audio conversion, speech conversions, vision classifying, multi-models, computer vision, text classification, etc. The models are public and free to use. Furthermore, they are open-source and users can contribute to them too.

Members of the Hugging Face Community can share their models for usage, discovery, and simple storage. Model Hub consists of 120K models. Here are some steps mentioned in which we can use Model Hub to programmatically download Hugging Face models:

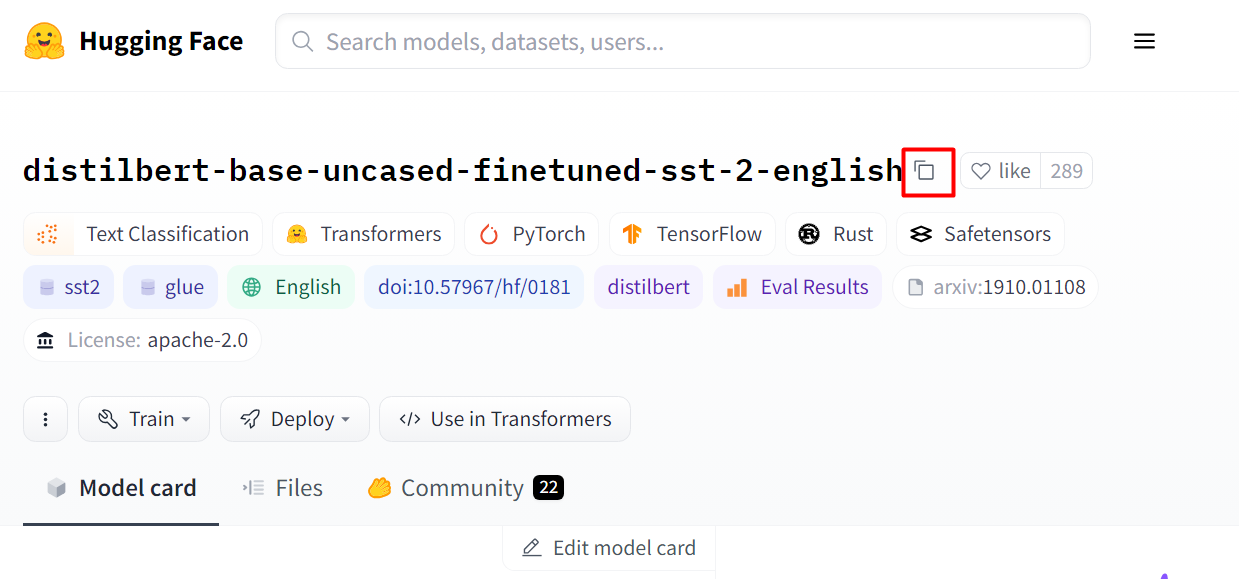

Step 1: Select Model from Hugging Face

Hugging Face has provided a public GitHub repository where a user can download any model for its requirements. Visit the Model Hub and search for the model. Here, we have selected the “distill-bert” model which contains textual data:

Step 2: Copy the Model Link

After selecting the model, click on the “Copy” button as highlighted below to copy the link of the model:

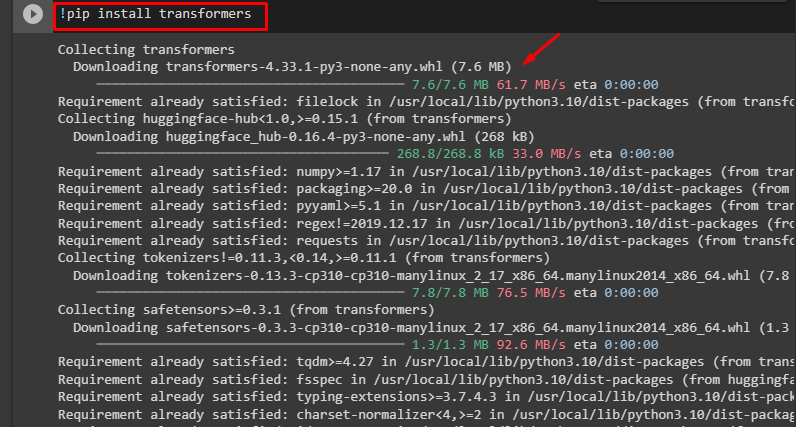

Step 3: Install Transformers

We will require a “transformers” library to import pipeline() functions. For installing the “transformers” library, execute the following mentioned:

Learn more about pipeline function by referring to this article: “How to Utilize the pipeline() Function in Transformers?”.

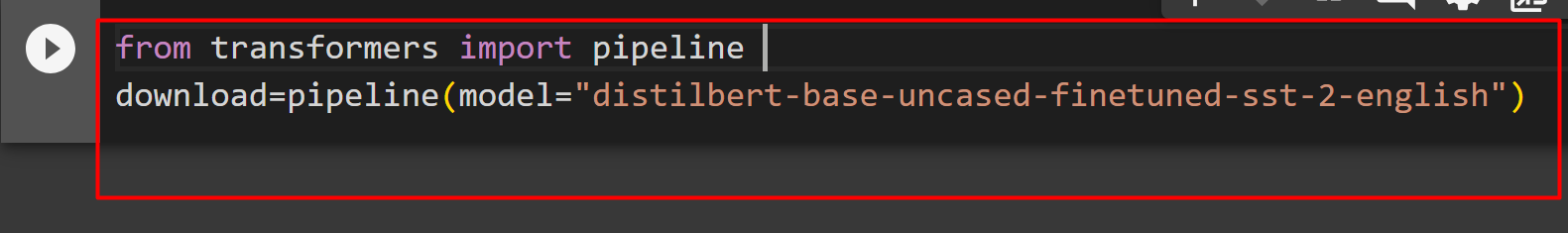

Step 4: Download Model

To download a model, we will import the pipeline class from the “transformers” library. After that, we will provide the model link that we have copied from earlier to the pipeline() function for it to download the specific model:

download=pipeline(model="distilbert-base-uncased-finetuned-sst-2-english")

This will take some time to download the model:

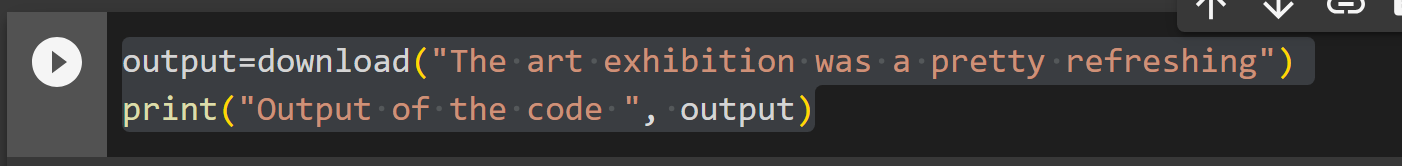

Step 5: Verification

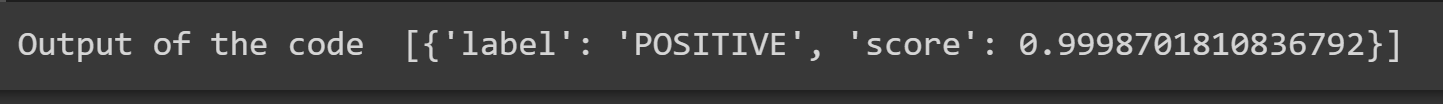

After the model is downloaded, we can test its functionality by using the following code:

print("Output of the code ", output)

The output of the above code is as follows:

This indicates that the model has been successfully downloaded. That is all from this guide.

For the above Google Colab link, refer to this link.

Conclusion

To download models in Hugging Face, copy the link of the model from Model’s Hub by clicking on the “Copy” button and then providing the name to the pipeline() function. Any model can be downloaded through the pipeline() function by specifying the name of the model. This article has provided a step-by-step guide on how to programmatically download Hugging Face models using the Model’s Hub.