The “datasets” library from Hugging Face provides a convenient way to work with and manipulate the datasets for natural language processing tasks. One useful function that is offered by the library is the concatenate_datasets() which allows you to concatenate multiple datasets into a single dataset. The following is a brief overview of the concatenate_datasets() function and how to use it.

concatenate_datasets()

Description:

Hugging Face’s “datasets” library provides the concatenate_datasets() function. It is used to concatenate multiple datasets, combining them into a single dataset along a specified axis. This function is particularly useful when you have multiple datasets that share the same structure and you want to merge them into a unified dataset for further processing and analysis.

Syntax:

concatenated_dataset = concatenate_datasets(datasets, axis=0, info=None)

Parameters:

datasets (list of Dataset): A list of datasets that you want to concatenate. These datasets should have compatible features which means that they have the same schema, column names, and data types.

axis (int, optional, default=0): The axis along which the concatenation should be performed. For most NLP datasets, the default value of 0 is used which means that the datasets are concatenated vertically. If you set the axis=1, the datasets are concatenated horizontally, assuming that they have different columns as features.

info (datasets.DatasetInfo, optional): The information about the concatenated dataset. If not provided, the info is inferred from the first dataset in the list.

Returns:

concatenated_dataset (Dataset): The resulting dataset after concatenating all the input datasets.

Example:

# You can install it using pip:

# !pip install datasets

# Step 2: Import required libraries

from datasets import load_dataset, concatenate_datasets

# Step 3: Load the IMDb movie review datasets

# We'll use two IMDb datasets, one for positive reviews

#and another for negative reviews.

# Load 2500 positive reviews

dataset_pos = load_dataset("imdb", split="train[:2500]")

# Load 2500 negative reviews

dataset_neg = load_dataset("imdb", split="train[-2500:]")

# Step 4: Concatenate the datasets

# We concatenate both datasets along axis=0, as they have

the same schema (same features).

concatenated_dataset = concatenate_datasets([dataset_pos, dataset_neg])

# Step 5: Analyze the concatenated dataset

# For simplicity, let's count the number of positive and negative

# reviews in the concatenated dataset.

num_positive_reviews = sum(1 for label in

concatenated_dataset["label"] if label == 1)

num_negative_reviews = sum(1 for label in

concatenated_dataset["label"] if label == 0)

# Step 6: Display the results

print("Number of positive reviews:", num_positive_reviews)

print("Number of negative reviews:", num_negative_reviews)

# Step 7: Print a few example reviews from the concatenated dataset

print("\nSome example reviews:")

for i in range(5):

print(f"Review {i + 1}: {concatenated_dataset['text'][i]}")

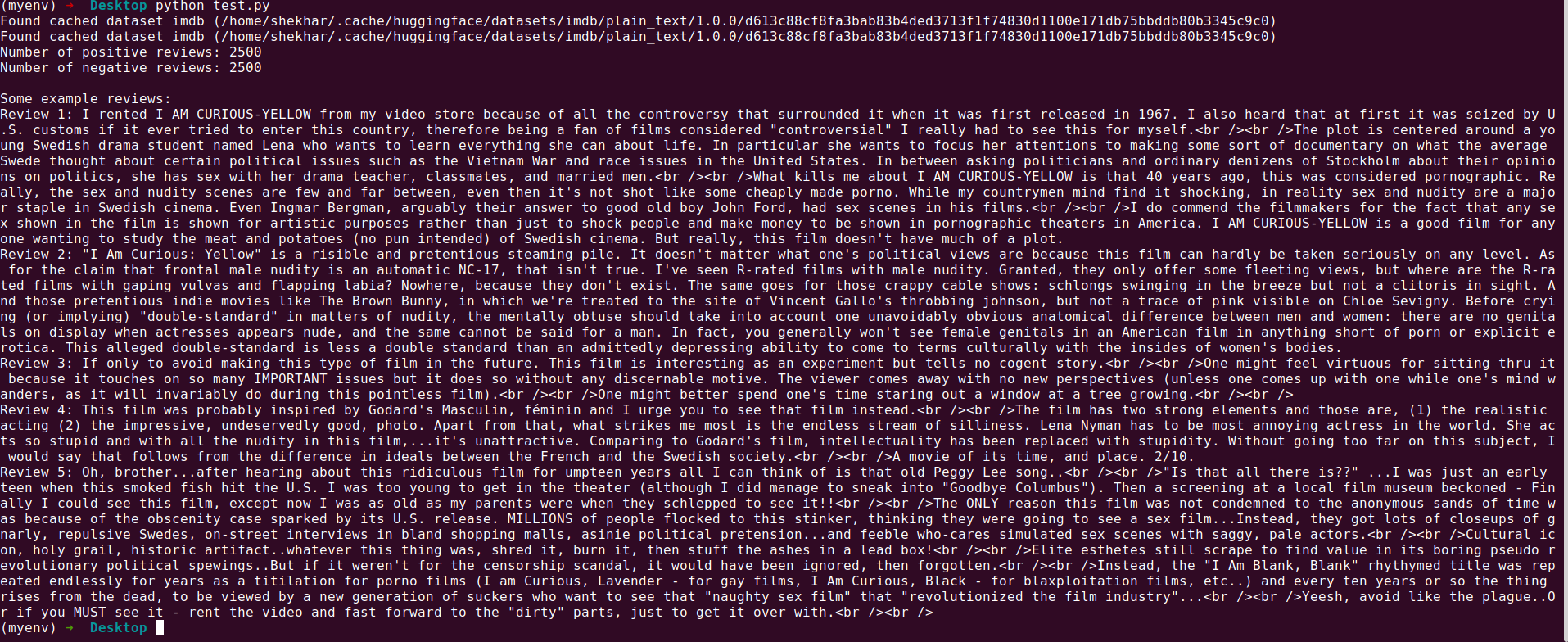

Output:

The following is the explanation for the Hugging Face’s “datasets” library program that concatenates two IMDb movie review datasets. This explains the purpose of the program, its usage, and the steps involved in the code.

Let’s provide a more detailed explanation of each step in the code:

from datasets import load_dataset, concatenate_datasets

In this step, we import the necessary libraries for the program. We need the “load_dataset” function to load the IMDb movie review datasets, and the “concatenate_datasets” to concatenate them later.

# Load 2500 positive reviews

dataset_pos = load_dataset("imdb", split="train[:2500]")

# Load 2500 negative reviews

dataset_neg = load_dataset("imdb", split="train[-2500:]")

Here, we use the “load_dataset” function to fetch two subsets of the IMDb dataset. The “dataset_pos” holds 2500 positive reviews and the “dataset_neg” contains 2500 negative reviews. We use the split parameter to specify the range of examples to load which allows us to select a subset of the entire dataset.

concatenated_dataset = concatenate_datasets([dataset_pos, dataset_neg])

In this step, we concatenate the two subsets of the IMDb dataset into a single dataset called “concatenated_dataset”. We use the “concatenate_datasets” function and pass it with a list that contains the two datasets to concatenate. Since both datasets have the same features, we concatenate them along axis=0 which means that the rows are stacked on top of each other.

num_positive_reviews = sum(1 for label in

concatenated_dataset["label"] if label == 1)

num_negative_reviews = sum(1 for label in

concatenated_dataset["label"] if label == 0)

Here, we perform a simple analysis of the concatenated dataset. We use the list comprehensions along with the “sum” function to count the number of positive and negative reviews. We iterate through the “label” column of the “concatenated_dataset” and increment the counts whenever we encounter a positive label (1) or a negative label (0).

print("Number of positive reviews:", num_positive_reviews)

print("Number of negative reviews:", num_negative_reviews)

In this step, we print the results of our analysis – the number of positive and negative reviews in the concatenated dataset.

print("\nSome example reviews:")

for i in range(5):

print(f"Review {i + 1}: {concatenated_dataset['text'][i]}")

Finally, we showcase a few example reviews from the concatenated dataset. We loop through the first five examples in the dataset and print their text content using the “text” column.

This code demonstrates a straightforward example of using the Hugging Face’s “datasets” library to load, concatenate, and analyze the IMDb movie review datasets. It highlights the library’s ability to streamline the NLP dataset handling and showcases its potential for building more sophisticated natural language processing models and applications.

Conclusion

The Python program that uses Hugging Face’s “datasets” library successfully demonstrates the concatenation of two IMDb movie review datasets. By loading the subsets of positive and negative reviews, the program combines them into a single dataset using the concatenate_datasets() function. It then does a simple analysis by counting the number of positive and negative reviews in the combined dataset.

The “datasets” library simplifies the process of handling and manipulating the NLP datasets, making it a powerful tool for researchers, developers, and NLP practitioners. With its user-friendly interface and extensive functionalities, the library enables an effortless data preprocessing, exploration, and transformation. The program that is showcased in this documentation serves as a practical example of how the library can be leveraged to streamline the data concatenation and analysis tasks.

In real-life scenarios, this program can serve as a foundation for a more complex natural language processing tasks such as sentiment analysis, text classification, and language modeling. Using the “datasets” library, researchers and developers can efficiently manage the large-scale datasets, facilitate experimentation, and accelerate the development of state-of-the-art NLP models. Overall, the Hugging Face “datasets” library stands as an essential asset in the pursuit of advancements in natural language processing and understanding.