Method 1: Building JSON Schemas with Pydantic with Step-by-Step Example

In the dynamic era of software development, ensuring data integrity and consistency is important. JSON (JavaScript Object Notation) has become a universal format for exchanging and storing data due to its simplicity and versatility. As data complexity grows, maintaining structured data can be challenging. This is where Pydantic, a Python library, comes to the rescue. Pydantic provides a decent solution for defining and validating data structures, helping developers avoid errors and improve the overall quality of their applications.

Think of building a web application that accepts user registrations. Each registration contains fields like username, email, and date of birth. Ensuring that the incoming data adheres to the expected format is important to prevent invalid inputs and the application’s security. This is where Pydantic can help us. We will start by defining a model using Pydantic’s functionality, which allows Pydantic to create data models that serve as schemas for our JSON data. Let’s do a step-by-step example to understand how to build JSON schemas using Pydantic.

Before we define the data model, we need to install the Pydantic library. We may accomplish this by executing a particular command with pip, the Python package manager:

from pydantic import BaseModel

For creating a Pydantic model, consider the user registration example mentioned earlier. To define a Pydantic model, we need to create a Python class that inherits from the Pydantic BaseModel. Every class attribute matches a field in our JSON data.

username: str

email: str

date_of_birth: str

In this example, we’ve defined a simple UserRegistration model with three fields: username, email, and date_of_birth.

For data validation, Pydantic models provide automatic data validation. To validate JSON data against the defined schema, we simply create an instance of our model and pass the JSON data as a dictionary to it.

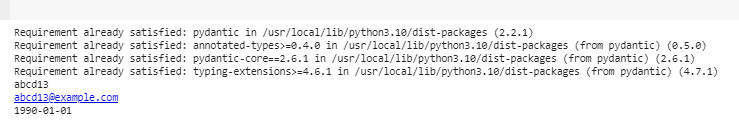

"username": "abcd13",

"email": "[email protected]",

"date_of_birth": "1990-01-01"

}

user = UserRegistration(**data)

If the data doesn’t match the defined schema, Pydantic will raise a Pydantic ValidationError with detailed information about the validation error.

To access the validated data, once the data is successfully validated, we can access the validated values through the model instance attributes.

print(user.email)

print(user.date_of_birth)

Full source code for copy:

!pip install pydantic

from pydantic import BaseModel

class UserRegistration(BaseModel):

username: str

email: str

date_of_birth: str

data = {

"username": "abcd13",

"email": "[email protected]",

"date_of_birth": "1990-01-01"

}

user = UserRegistration(**data)

print(user.username)

print(user.email)

print(user.date_of_birth)

The advanced usage of Pydantic goes beyond basic data validation and provides a range of advanced features to handle more complex scenarios. We will describe them below.

Data Transformation and Parsing

Pydantic allows us to define custom validation and parsing logic for our fields. For instance, we can use the @field_validator decorator to implement transformations on the data before validation.

from pydantic import BaseModel

from pydantic import field_validator

class UserRegistration(BaseModel):

username: str

email: str

date_of_birth: str

@field_validator("email")

def validate_email(cls, value):

return value.lower()

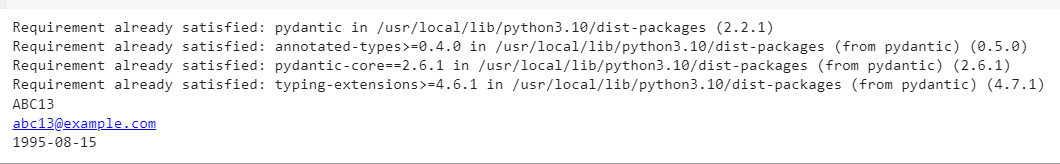

data = {

"username": "ABC13",

"email": "[email protected]",

"date_of_birth": "1995-08-15"

}

user = UserRegistration(**data)

print(user.username)

print(user.email)

print(user.date_of_birth)

In this example, the validate_email method converts the email to lowercase before validation.

Nested Models

JSON data often contains nested (data structure in data structure) structures. Pydantic supports nested models, allowing us to easily build complex data structures.

from pydantic import BaseModel

class Coordinates(BaseModel):

latitude: float

longitude: float

class DetailedWeatherForecast(BaseModel):

location: str

temperature: float

conditions: str

coordinates: Coordinates

By defining a nested address model and incorporating it into the UserProfile model, we can effortlessly validate complex JSON data structures.

Default Values

Pydantic also lets us define default values for fields in our models.

from pydantic import BaseModel

class UserRegistration(BaseModel):

username: str

email: str

date_of_birth: str = "1990-01-01"

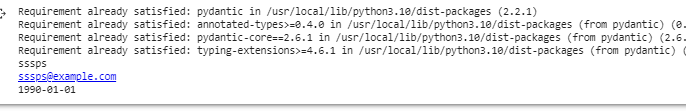

data = {

"username": "sssps",

"email": "[email protected]",

}

user = UserRegistration(**data)

print(user.username)

print(user.email)

print(user.date_of_birth)

In this example, if the date_of_birth field is missing from the input data, it will default to “1990-01-01”.

In the era of modern software development, where data quality and consistency are essential, Pydantic emerges as a powerful tool. Pydantic enables developers to construct more dependable and maintainable systems by providing a simple yet rapid approach to creating and validating JSON schemas. With its advanced features, Pydantic handles everything from basic data validation to complex nested structures, making it the best and most versatile tool in any Python developer’s toolkit.

Method 2: Constructing JSON Schemas for Real Life Applications

Imagine we want to create a weather app that fetches and displays data from various sources. To avoid mess, we need a structured format for the incoming data. Pydantic is our trusted tool to create clear and fast data structures that ensure data consistency.

Creating our Pydantic layout uses Pydantic’s ability to define data models that double as schemas for our JSON data.

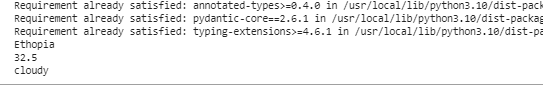

To begin, we must install the Pydantic library. This is as simple as using pip, a tool that fetches Python libraries, by running the following command:

from pydantic import BaseModel

For designing a model, let’s consider the weather app example. We want to structure data for each weather forecast. To achieve this, we will create a Pydantic model. Think of this model as a layout for the data’s structure.

location: str

temperature: float

conditions: str

In this case, we have built a WeatherForecast model detailing the expected fields like “location,” “temperature,” and “conditions.”

Pydantic rocks when it comes to data validation. To ensure incoming data adheres to the model’s structure, we will create an instance of the pre-defined WeatherForecast model and provide the JSON data as input.

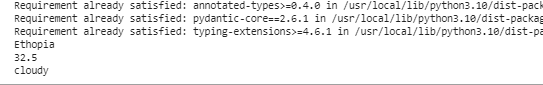

"location": "Ethopia",

"temperature": 32.5,

"conditions": "cloudy"

}

forecast = WeatherForecast(**data)

If the data doesn’t match the model’s structure, Pydantic will raise an error, indicating what is missing.

After successful validation, we can easily access the validated data through the model instance’s attributes.

print(forecast.temperature)

print(forecast.conditions)

Custom Validation and Data Transformation

Pydantic empowers us to define custom validation logic using decorators. This means we can preprocess data before validation, increasing its flexibility.

from pydantic import BaseModel

class WeatherForecast(BaseModel):

location: str

temperature: float

conditions: str

from pydantic import field_validator

@field_validator("temperature")

def validate_temperature(cls, value):

if value < -30 or value > 50:

raise ValueError("Temperature out of range")

return value

data = {

"location": "Ethopia",

"temperature": 32.5,

"conditions": "cloudy"

}

forecast = WeatherForecast(**data)

print(forecast.location)

print(forecast.temperature)

print(forecast.conditions)

In this example, the validate_temperature method ensures the temperature is within a logical range.

Handling Nested Data

Real-world data often exists in complex structures. Pydantic handles this effortlessly by supporting nested models.

from pydantic import BaseModel

class Address(BaseModel):

street: str

city: str

state: str

zip_code: str

class UserProfile(BaseModel):

name: str

email: str

address: Address

We can tackle complex data structures effortlessly by integrating a nested coordinates model.

Conclusion

In software development, Pydantic stands out as a powerful library package by python. By enabling developers to define, validate, and manipulate JSON schemas with ease, Pydantic transforms data into a structured, error-free script. From basic validation to complex nested structures, Pydantic’s abilities ensure that our data remains organized, consistent, and reliable. As we move and proceed into our coding adventures, Pydantic awaits to simplify our data management journey.