This content is thoroughly adapted to daily language and optimized for anyone without previous interaction with AI or knowledge on it, to be able to get a complete understanding on AI mechanisms and features.

The simplicity that is applied in this writing describes the algorithms behind AI without the need of programming knowledge or solving mathematical formulas.

Descriptive diagrams are included for an easy understanding on artificial neural networks structure.

After reading this, the readers will not only know the mechanisms behind AI, but they will also be able to go deeper into more technical aspects.

Easy-to-Understand Introduction to Neural Networks

An AI (Artificial Intelligence) is an artificial neural network which imitates a biological neural network. In other words, it is a network of interconnected individual artificial neurons.

An AI individual neuron is the main component of an artificial neural network. An artificial neuron can be defined as a programming concept, a set of mathematical algorithms, or concretely a piece of code (part of a bigger code and algorithm which is the neural network), with given autonomous capabilities consisting of:

1. INPUT

It is the ability to receive data or information (input) through the neurons that are located in the first neural network layer (input layer). The initially received data (first input) is entered through the first neural layer which is known as input layer. Neural networks include three layer types: an input layer through which the initial data/information is received, many hidden layers where all calculation/processes that we describe take place, and an output layer which delivers the final result and returns back the feedback to the previous hidden layers.

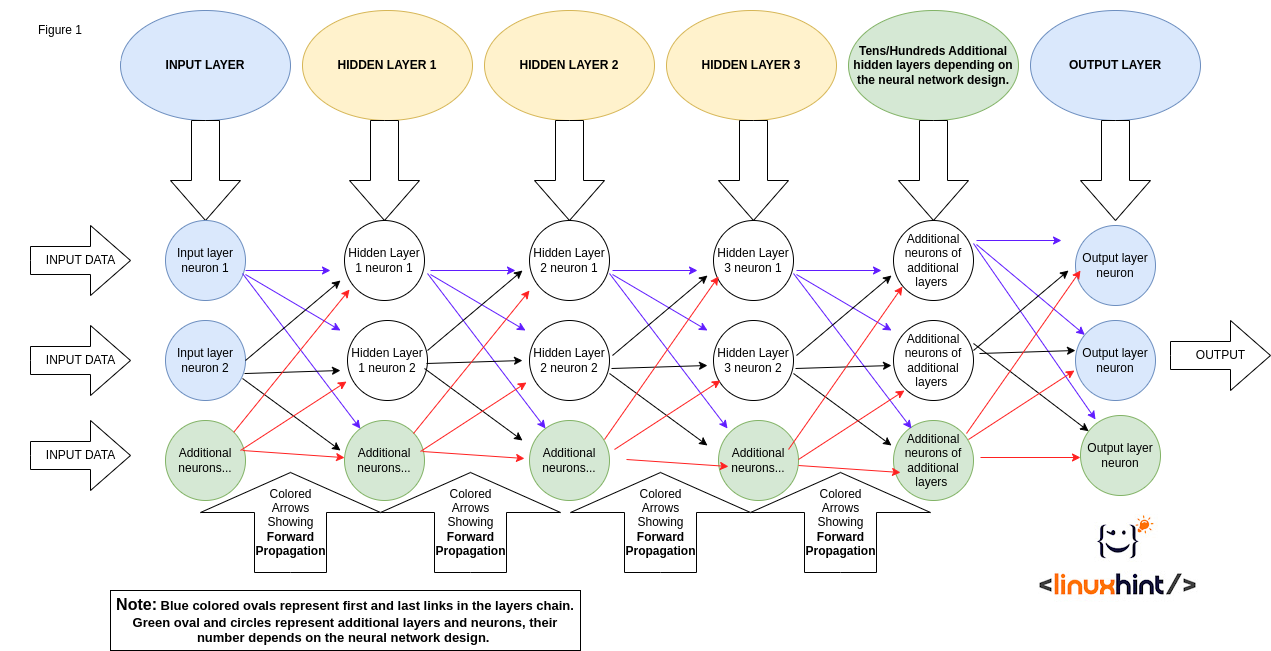

All layers can contain any number of neurons depending on the neural network design (see the structure of a neural network in Figure 1).

2. ATTENTION

It is the ability to identify the importance that each part of the received or processing data (input parts) deserves for an optimal calculation, by focusing on the relevant parts of the data and discarding the irrelevant pieces of the data.

Depending on the neural network design, this task is done through a process which is known as attention. Attention is the capability to identify and focus on the relevant information/data to complete the task while ignoring the rest.

3. SYNAPTIC WEIGHT

It is the ability to identify the most relevant contribution of different neuron groups interaction.

In other words, the neural networks can realize the influence level of specific neuron interactions on the final result compared to the influence level of other neuron interactions.

To reach a result or complete a task, the contribution that is made by the interaction between a group of neurons is more important than the contribution that is made by the interaction between other neuron groups. Some neuron combinations provide more important contributions than others to reach a result, but all of them are necessary independently of their participation level.

The importance level or influence level over the result of neural connections is known as Synaptic Weight.

The synaptic weight is the term that is given to the importance level of the connection between specific neurons. This weight or importance is decided by the influence percentage that they contribute with the output/result.

For example, the work that is made by the neuron X interacting with the neuron Y may produce 30% of the result, while the work that is made by the neuron W in connection with the neuron Z apports 10% of the result.

Despite having the neurons X and Y contributing a bigger percentage when working together compared to the interconnected neurons W and Z, with a more relevant influence in the result, both connections are complemented and contribute to the same task.

Thus, a stronger synaptic weight does not replace a weaker synaptic weight. The synaptic weight just represents the participation level of different neuron interactions to reach a common goal.

For example, in a soccer match, a player named Ronaldo passed the ball to a player named Neymar who passed the ball to the player known as Mbapee. Mbapee then passed the ball to a player known as Messi who finally scored a goal against the opponent. Let’s say the ball that is passed from Mbapee to Messi followed by the score was the biggest contribution to the success. But the first two players’ contribution, although with less remarkable relevance, was part of the result. The interaction between Mbapee and Messi does not replace Neymar and Ronaldo’s interactions; they complement each other as part of the same team for a common goal.

The same happens with neuron interactions. Some interactions are more relevant or remarkable than the others, but as part of the same neural network, all of them contribute with more or less, but necessary participation (synaptic weight).

This is important to understand because some information sources on AI suggest that stronger synaptic weights replace the weaker ones.

4. BIAS

Normally, the BIAS term is applied to a trend that prevents AI from being fully objective or fully independent. The BIAS is a factor which affects the AI decision making by orienting it to a specific direction which is unrelated to the synaptic weight, attention, or other AI characteristics.

We can think about BIAS as both a deviation from a fully objective conclusion or a result by a trend, or as a useful way to adjust AI for a specific task.

For example, if the AI was nourished or trained with non-neutral data or with small quantities of data, it may be influenced by the low number of data or by the data partiality, affecting the neural network neutrality which leads it to wrong conclusions.

On the other hand, BIAS can also be implemented to get a desired orientation or trend for a specific task. It can be used as a method to manipulate or adjust a neural network. For example, the implementation of BIAS can be useful to detect the malicious data.

5. ACTIVATION

In neural networks, the ACTIVATION term is the definition of a calculation result which decides if a neuron within the neural network is useful or not to contribute with the required task. Activation means the incorporation of the neuron to the neural network task.

When a neuron receives the data or information (input) to process, before being activated and participating in the neural network task, it carries out a calculation based on the receiving or entered data/information (input) and the utility potential of its output (contribution). If the calculation is made by the neuron itself which concludes that the contribution (output) won’t be useful to the task that is given to the network, the neuron will remain deactivated and marginalized from the task. But if the entered data (input) that is compared to the output that the neuron generates is a useful contribution within the network, the neuron is ACTIVATED. When it is activated, it sends the outputs to the next neuron layer.

In other words, a neuron can be useful for task X, while other neurons can be useful for task Y. If the neural network is required to complete task X, the proper neuron will be activated while the useless neuron for the same task will remain deactivated.

While the previously explained synaptic weight is the measure of interconnected neuron contribution, the activation represents a positive decision regarding the inclusion of the neuron in the task.

It is important to clarify that the activation is not a decision making about the neuron participation, but the decision itself when positive (if negative, the neuron is deactivated).

The decision on neuron activations is based on a calculation which applies the attention, the synaptic weight, and the Bias as factors. Depending on these factors, some neurons are activated or deactivated.

The activation of a neuron is followed by a process which is known as Forward Propagation or PassForward which consists of interaction with next layer neurons. If the neuron is deactivated, it won’t send signals to the next layer neurons; it will not produce propagation.

6. FORWARD PROPAGATION

It is the delivery of data that is processed by an activated neuron to the neurons that are located in the next layer.

The forward propagation is the beginning of connection between activated neurons that are located in a previous link layer within the neural network chain, with the neurons located in the next layer. Thus, when a calculation results in a neuron activation, it generates the forward propagation from the neuron.

Therefore, forward propagation is both a part of the consequence and of the cause of neuron activation.

7. BACK PROPAGATION

The back propagation is a learning algorithm consisting of feedback that is sent by the neurons from the output layer to the other neurons that are located in previous layers within the neural network chain. For example, when the neurons that are located in the Output layer send a feedback to the neurons that are located in previous hidden layers, this communication from the neurons to the previous layer neurons is useful for AI to learn and improve because the sent information is a feedback.

In order to improve and learn, the neural network identifies the feedback patterns. Patterns help AI to incorporate the useful information to improve its features like the synaptic weight or bias weight.

The algorithm that is used in the back propagation to improve the synaptic weight and bias by minimizing their errors is known as Descending Gradient.

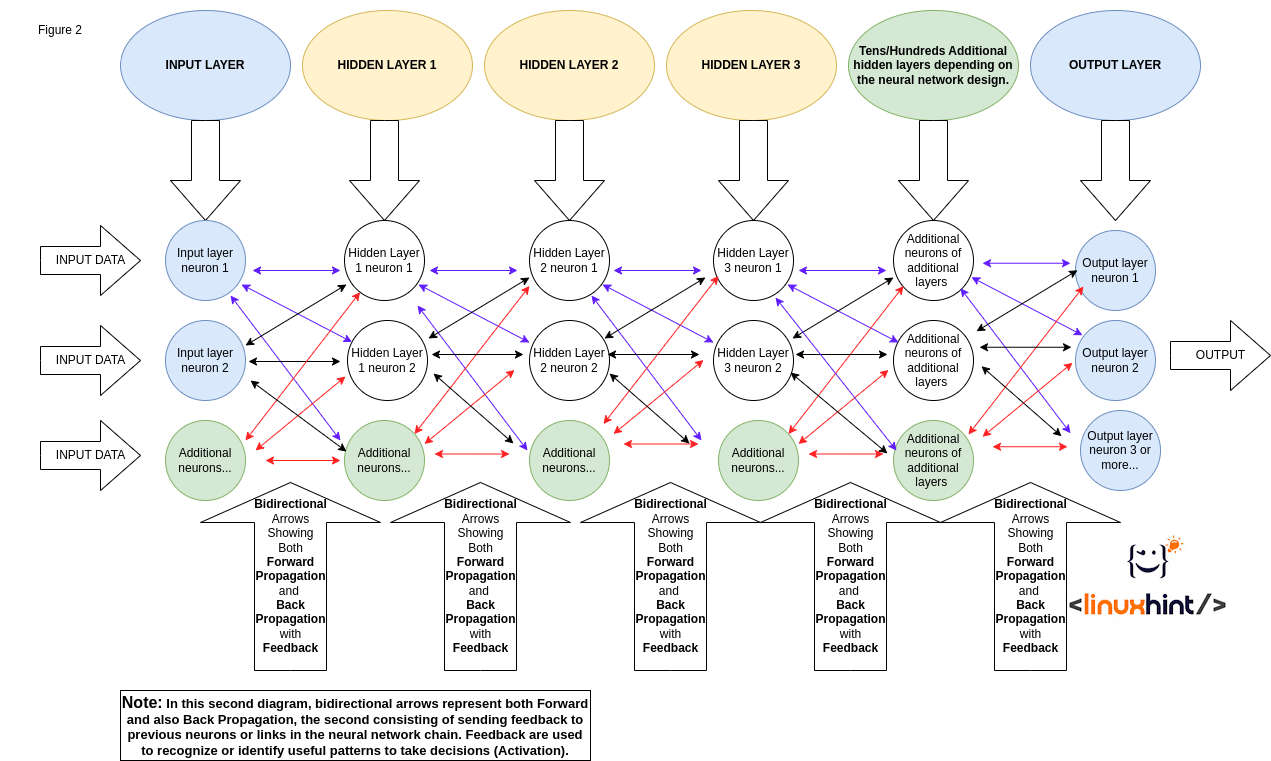

The previous diagram (Figure 1) shows the structure of a neural network and the colored arrows that represent the forward propagation. In Figure 2, the colored arrows are bidirectional which shows also the back propagation which begins from the output layer to the previous hidden layers.

Neural Networks’ Concepts Summary

- Input: Input is the definition for the information that is received by a neuron or layer (It can be the output of another neuron or layer). For the sending neuron(s), it’s an output. For the receiving one(s), it’s an input.

- Input Layer: The first layer which contains the neurons that receive the initial input data. Different neurons in this layer are useful for different input types.

- Synaptic Weight: It is the relevance or importance level of the connection between neurons to get the result. This helps AI to learn what neuron interactions make a more important contribution for a specific task. For example, it allows the AI to learn for the requested task that the interconnected neurons A and B contributes a bigger part of the result than the interconnected neurons C and D.

- Bias: A trend affecting objective decisions in the neural network, by orienting it to a specific direction unrelated to the neural network objective conclusions. This can decrease the AI performance or can be useful to adjust it to specific needs. It can be an undesired or consciously implemented limit to AI independence.

- Attention: This is the capability to focus on important data and ignore the irrelevant data.

Activation: It is when a neuron takes part in the task that is requested to the neuron network. Some neurons are useful for specific tasks while others are useful for different tasks. If it’s useful for the given task, it gets activated and becomes an active part of the work. If it is not useful, it remains deactivated and unused for the task. - Hidden Layers: The layers between the input and output layers whose neurons make the calculations and decisions.

- Forward Propagation: The delivery of data from neurons to neurons from the next layer.

- Output: The data that is sent from a neuron to another, or from the output layer to the final destinatary. For the sender or sending neurons, the data is the output. While for the receiver or receiving neurons, the same data is the input.

- Output layer: The final layer in the neuronal network from which the final output is sent to the destination and from which the back propagation begins.

- Back Propagation: The delivery of feedback from neurons back to the previous layer links neurons in the neural network chain which allows them to learn and improve by identifying or recognizing the feedback patterns.

- Descending Gradient: This is the algorithm that is used in the back propagation process to improve the synaptic weight and bias for a better performance. A learning mechanism which works by recognizing the mistakes that are made by the neural network and directing it to a more accurate direction by adjusting the synaptic weight and bias.

Simplified Practical Example on How the Neural Networks Work

For example, let’s say we request AI to give us information about dogs and cats raising. The request itself is the INPUT that we enter to the neural network through the INPUT LAYER that contains the input neurons.

Then, the request is passed to the first HIDDEN LAYER where, depending on the neural network design, the AI starts applying the ATTENTION algorithm which allows it to focus on relevant information to solve the given task in the INPUT (providing information on raising dogs and cats) like focusing on the words “dogs”, “cats”, and “raising”.

In this simple example, the BIAS may be considered as the limit that is given to the neural network to tell the ATTENTION algorithm to remain limited to information about dogs and cats.

AI can also identify what neuron interconnections contribute with more or less percentage of the desired result (the answer to the input which is the question about dogs and cats raising) by weighing their percentage of influence or contribution in the final answer. This recognition of influence or participation level of the neuron groups’ interactions in the answer is the SYNAPTIC WEIGHT.

Through calculations which are known to lead to neurons ACTIVATION, with the ATTENTION, and the BIAS and SYNAPTIC WEIGHTS as mathematical factors, some neurons get ACTIVATED. These are the neurons that are implied in the task, producing the FORWARD PROPAGATION to next layer neurons.

In this example, when the algorithms consider the attention, bias, and synaptic weights based on the input, they are likely to activate the neurons related to dogs and cats raising which propagate forward the initial request to additional neurons that generate the activation of more neurons that are also related to dogs and cats.

After the hidden layers complete the task, the neurons send the answer to the OUTPUT LAYER. This is the layer which exports the answer to the user who made the cats and dogs related question.

Finally, based on the final answer provided by the AI in the OUTPUT LAYER, the BACK PROPAGATION begins by sending the feedback to the neural network previous layers which have the capability to detect the patterns in feedback, the patterns from which the AI can learn and improve by using them as corrections. This learning mechanism generates new calculations which lead to synaptic weight modifications, making it more accurate. For example, the feedback that is sent in the back propagation can update the neural network conclusion on the synaptic weight of a group of neurons or the relevance of bias.

Conclusion

As you can see, after reading this overview on artificial intelligence and neural networks, the mechanisms behind this technology are very complex but not impossible to fully understand them.

While this document intentionally excluded all mathematical formulas behind the algorithms, most relevant and representative algorithms were described and included.

If you fully read this content, you probably understand how AI works, how the algorithms behind it works, and you are ready to be introduced to this technology.

This complexity that is described here allows us to realize the almost unlimited possibilities and consequences of the AI incursion in our lives which are still unpredictable.

To end this work, we want to thank you for reading this article which is written carefully for everyone to understand how neural networks behind the artificial intelligence work.