In this article, we discuss about Ingress and how we set-up up Ingress in Kubernetes. If you are new to this place and wants to learn about the Ingress concept in Kubernetes, you are at the right place. Please review our previous Kubernetes-related content for a better understanding. Ingress is an object that allows us an access to Kubernetes’ services from the outer side of the Kubernetes cluster. We will explain every point that is related to Ingress in detail with the help of examples or content screenshots, respectively, as we break the process down into different steps to explain the Ingress configuration or set-up in Kubernetes.

What Is Ingress in Kubernetes?

As a set of guidelines on how the incoming traffic should be forwarded to the services within a cluster, Kubernetes’ Ingress feature is implemented as an Ingress resource. An Ingress resource has typically one or more Ingress controllers attached to it. These controllers are in charge of carrying out the rules which are specified in the resource. Ingress is a Kubernetes resource that allows us to configure a load balancer for our application.

Why Do We Use Ingress in Kubernetes?

In this session, we discuss the usage of Ingress in Kubernetes. The traffic from the Internet can be forwarded to one or more services in your cluster using Ingress. The multiple services that are exposed using the same external IP address may also be given with an external access using it. When offering different services that are a component of a bigger application or different iterations of the same service, this can be helpful. As a result, because Ingress is built as a Kubernetes resource, it can be handled similarly to other resources in the cluster. This includes the ability to build, edit, and delete the Ingress resources using the Kubernetes API as well as the ability to use the configuration files to indicate the intended state of Ingress.

Prerequisites:

The latest version of Ubuntu must be installed on your system. To run the Linux or Ubuntu systems on Windows, the user must install the Virtual Box. Users must have a 64-bit operating system. The users must have an idea of Kubernetes clusters and the kubectl command-line concept.

Here, we start our process which we divide into different steps for your better understanding and to be more conciseness to increase readability. Let’s explore about Ingress in the coming session of this article.

Step 1: Launch the Kubernetes Cluster on Your Local Machine

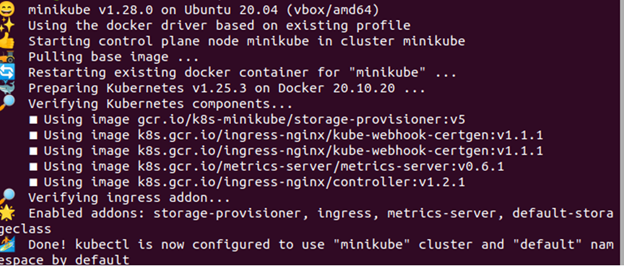

In this step, we run the command to launch the Kubernetes cluster on our system after installing the Windows. We run the minikube in Kubernetes first. The command is as follows:

Upon the command execution, the Minikube Kubernetes is successfully clustered on the system, locally. In this cluster, we perform the Ingress function in the next step.

Step 2: Install the YAML File of Ngnix Ingress Controller in Kubernetes

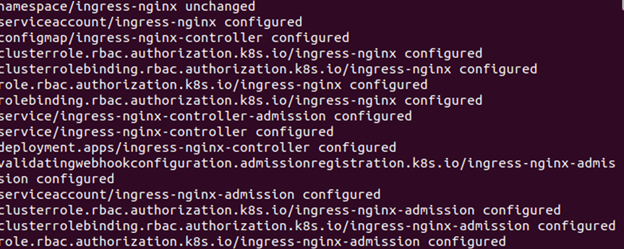

In this step, we will learn the way through which we install the Ngnix controller in Kubernetes. We create the files for deployment and service in our Kubernetes application. The deployment confirms that there are several replicas of our application and service that always provide us with a stable and reliable network endpoint for our application. We run the following command for the deployment of the Nginx Ingress controller in the cluster:

When the command is executed, the output that appears is attached as a screenshot. Here, we can see that the namespace is ingress-ngnix, and the service account is created and configured. After that, the Configmap as ingress-ngnix-controller is also configured. Along with this, the cluster role, cluster role binding, and more functions are configured in our Kubernetes successfully with the Ingress controller.

Step 3: Create an Ingress Resources in Kubernetes

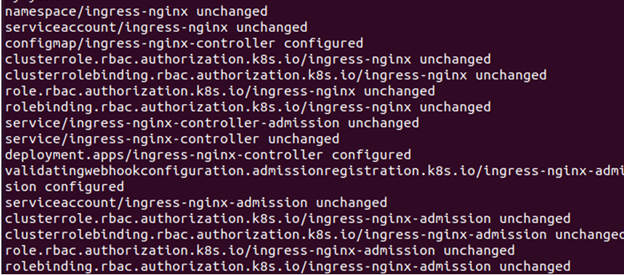

In this step, we create the new resources for Ingress in Kubernetes. We create a YAML file for Ingress resources in Kubernetes. Run the command on the kubectl terminal:

When the command is executed, the output of the command is shown as the previous screenshot in Kubernetes. Read the output carefully. Here, we create the Ingress resource, and we start a service through which we deploy the Nginx Ingress on a Kubernetes cluster.

Step 4: Configure a Load Balancer in Kubernetes

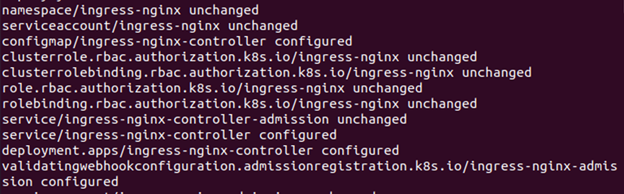

In this step, we will see the configuration of the load balancer in Kubernetes. We implement the Ingress resources using a load balancer such as an NGNIX. We configure the load balancer in Kubernetes for traffic routing. Here, we run the following command:

Upon the command execution, we create a YAML file and deploy the ingress resources in Kubernetes with the help of a load balancer.

Step 5: Enlist the Running Pods in Kubernetes

In this step, we will get the list of pods that are currently running in our Kubernetes application. We will check for Ingress pods in Kubernetes. Run the command on kubectl:

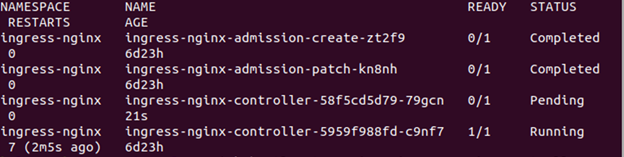

The list of running pods is shown in the previous image as an output after the command execution. We see all those pods whose namespace is ingress-ngnix in our list. The names of these pods are also included in the list. All of these pods are finished and they remain in their original state.

Step 6: Enlist the Running Services in Kubernetes

In this step, we will learn how to get or know about the running services in Kubernetes. We run the command here to get Kubernetes services. Run the following command:

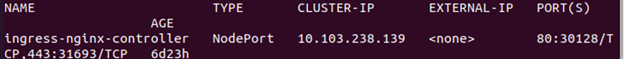

Upon the command execution, the list of running services related to the ingress Nginx controller is shown. In the previously-attached screenshot, the name, type, cluster-Ip, external-Ip, Ports, and age of services are shown.

Conclusion

We noted that Ingress Nginx is used in Kubernetes instances that involve a single node. Through the process, we check the traffic routing of the cluster. Here, we check the Ingress by accessing the application from outside the cluster using the load balancer`s external IP address. We described every step of the Ingress setting in Kubernetes very clearly. Hopefully, this article and its examples are helpful for Kubernetes applications.