What is Kubernetes Load Balancer?

Load balancers distribute incoming traffic over a group of hosts to guarantee optimal workloads and high availability. Due to its underlying design, a Kubernetes cluster’s distributed architecture relies on multiple instances of services, which poses challenges in the absence of appropriate load allocation.

A load balancer is a traffic controller that routes client requests to the nodes that can serve them promptly and efficiently. The load balancer redistributes the workload across the remaining nodes when one of the hosts fails. When a new node enters a cluster, on the other hand, the service automatically begins sending requests to the PODs associated with it.

A Load Balancer service in a Kubernetes cluster does the following:

- Distributing network loads and service requests across numerous instances in a cost-effective manner

- Enabling autoscaling in response to fluctuations in demand.

How to Add a Load Balancer to a Kubernetes Cluster?

A load balancer can be added to a Kubernetes cluster in two ways:

By the Use of a Configuration File:

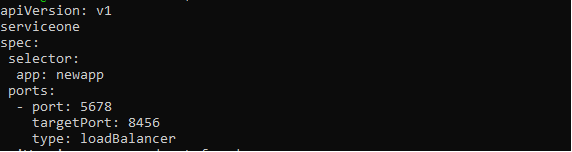

The load balancer is enabled by specifying LoadBalancer in the type field of the service configuration file. The cloud service provider manages and guides this load balancer, which sends traffic to back-end PODs. The service configuration file should resemble the following:

kind: Service

metadata:

name: new-serviceone

spec:

selector:

app: newapp

ports:

- port: 5678

targetPort: 8456

type: loadBalancer

Users may be able to assign an IP address to the Load Balancer depending on the cloud provider. The user-specified loadBalancerIP tag can be used to set this up. If the user does not provide an IP address, the load balancer is allocated an ephemeral IP address. If the user specifies an IP address that the cloud provider does not support, it is disregarded.

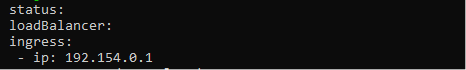

The.status.loadBalancer property should be used if the user wants to add more information to the load balancer service. See the image below to set the Ingress IP Address.

loadBalancer:

ingress:

- ip: 192.154.0.1

By Using Kubectl:

The —type=loadBalancer: parameter can also be used to construct a load balancer with the kubectl expose command.

--name=new-serviceone --type=LoadBalancer

The command above creates the new service and connects the new POD to a specific port.

What is Garbage Collecting Load Balancers?

When a LoadBalancer type Service is destroyed, the associated load balancer resources in the cloud provider should be cleaned away as soon as possible. However, it is well known that cloud resources can become orphaned if the related Service is removed in a variety of situations. To prevent this from occurring, Finalizer Protection for Service LoadBalancers was developed.

If a Service is of the type LoadBalancer, the service controller will add a finalizer named service.kubernetes.io/load-balancer-cleanup to it. The finalizer will be erased after the load balancer resource has already been cleaned up. Even in extreme instances, such as when the service controller crashes, this prevents dangling load balancer resources.

Different Ways to Configure Load Balancer in Kubernetes

For handling external traffic to pods, Kubernetes load balancer methods and algorithms are available.

Round Robin

A round robin approach distributes new connections to qualified servers in sequential order. This technique is static, which means it does not take into consideration specific server speeds or performance concerns, therefore a sluggish server and a better-performing server will both receive the same number of connections. As a result, round robin load balancing is not always the best choice for production traffic and is better suited to simple load testing.

Kube-proxy L4 Round Robin

The Kube-proxy collects and routes all requests delivered to the Kubernetes service.

Because it is a process and not a proxy, it uses a virtual IP for the service. It then adds architecture as well as complexity to the routing. Each request adds to the latency, and the problem gets worse as the number of services grows.

L7 Round Robin

Sometimes, routing traffic directly to pods avoids the Kube-proxy. This may be accomplished with a Kubernetes API Gateway that employs an L7 proxy to handle requests among available Kubernetes pods.

Consistent Hashing/Ring Hash

The Kubernetes load balancer uses a hash based on a defined key to distribute new connections across the servers using consistent hashing techniques. This strategy is best for handling large cache servers with dynamic content.

Because the complete hash table does not need to be recalculated each time a server is added or withdrawn, this approach is consistent.

Fewest Servers

Rather than allocating all requests among all servers, a fewest server’s technique classifies the smallest amount of servers mandatory to fulfill the current client load. Excessive servers can be turned down or de-provisioned for the time being.

This technique operates by tracking variations in response latency when the load varies according to server capacity.

Least Connections

This load balancing algorithm in Kubernetes routes client requests to the application server with the fewest active connections at the time of the request. This method utilizes active connection load to account since an application server may be overburdened owing to longer-lived connections if application servers have equal requirements.

Conclusion

This article aimed to give readers a comprehensive understanding of Kubernetes load balancing, covering its architecture and numerous provisioning methods for a Kubernetes cluster. Load balancing is an important part of running an effective Kubernetes cluster and is one of the primary jobs of a Kubernetes administrator. Tasks may be efficiently scheduled across cluster PODs and nodes using an optimally supplied Load Balancer, enabling High Availability, Quick Recovery, and Low Latency for containerized applications operating on Kubernetes.