What is Kubernetes?

Kubernetes or k8s is a free, open-source platform for managing containerized application environments and services. Kubernetes allows you to create portable and highly extensible containerized applications which are easy to deploy and manage. It is commonly used alongside Docker to develop better control of containerized applications and services.

Features of Kubernetes

The following are the essential features offered by Kubernetes:

- Automated rollouts and rollbacks in case errors occur.

- Auto scalable infrastructure.

- Horizontal scaling

- Load balancers

- Automated health checks and self-healing capabilities.

- Highly predictable infrastructure

- Mounts and storage system to run applications

- Efficient resource usage

- Each Kubernetes unit is loosely tied with each other, and each can act as a standalone component.

- Automatic management of security, network, and network components.

Kubernetes Architecture

Understanding the Kubernetes architecture will help you gain a deeper knowledge of how to work with Kubernetes.

The following are hardware components of the Kubernetes architecture:

A node is a representation of a single machine in a Kubernetes cluster. The node represents a single worker machine, either in a virtual machine or physical hardware.

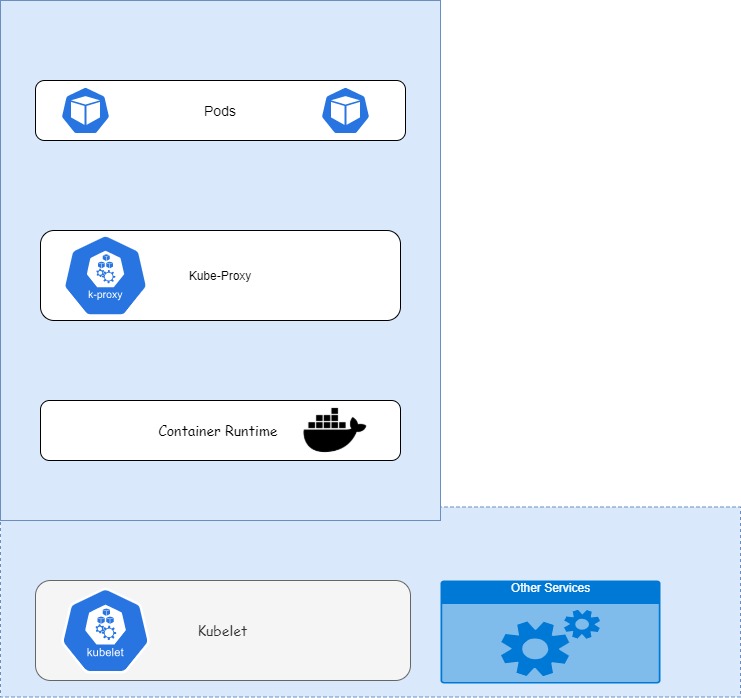

Each node in Kubernetes comprises various Kubernetes software components such as Pods, Kubulet, kube-proxy, and the container runtime such as Docker.

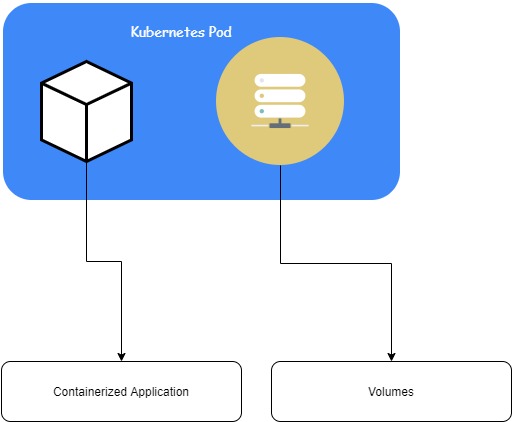

A pod refers to one or more containerized applications bundled together. Kubernetes manages the respective pods instead of the containers and creates replicas if one of them fails. Pods provide shared resources such as network interfaces and storage devices.

Container/Container-Runtime

A container is an isolated, self-contained software package. A container contains everything required to run an application, including the code, system libraries, and other dependencies. Once a container is deployed, you cannot change the code as they are immutable. By default, Kubernetes container runtime is provided by Docker.

Kubelet

The kubelet is a simple application that allows communication between the node and the master node. It is responsible for managing the pods and the containers. The master node uses the kubectl to perform the necessary actions to the specific node.

Kube-Proxy

The kube-proxy is a network proxy that is available in all Kubernetes nodes. It manages network communications inside and outside of the cluster.

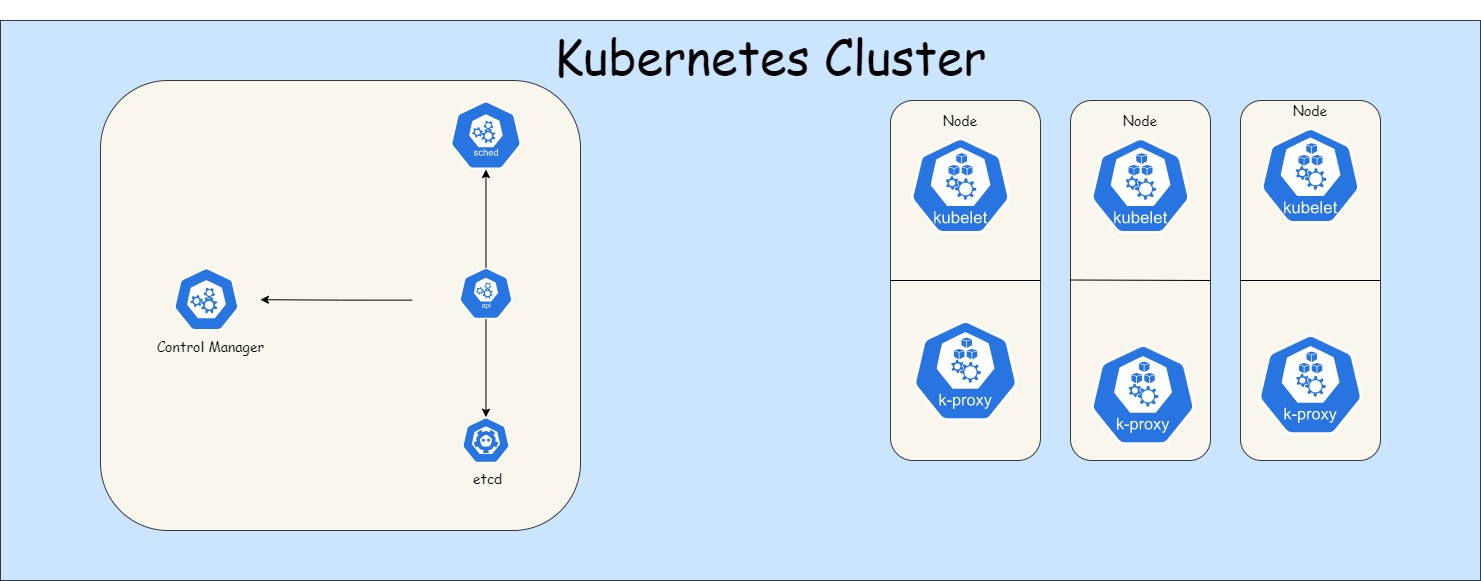

Cluster

A cluster is a collection of Kubernetes nodes which aggregate their resources to create a powerful machine. Resources shared by the Kubernetes nodes include memory, CPU, and disks.

A Kubernetes cluster is made up of one master node and other slave nodes. The master node controls the Kubernetes cluster, including scheduling and scaling applications, pushing and applying updates, and managing the cluster states.

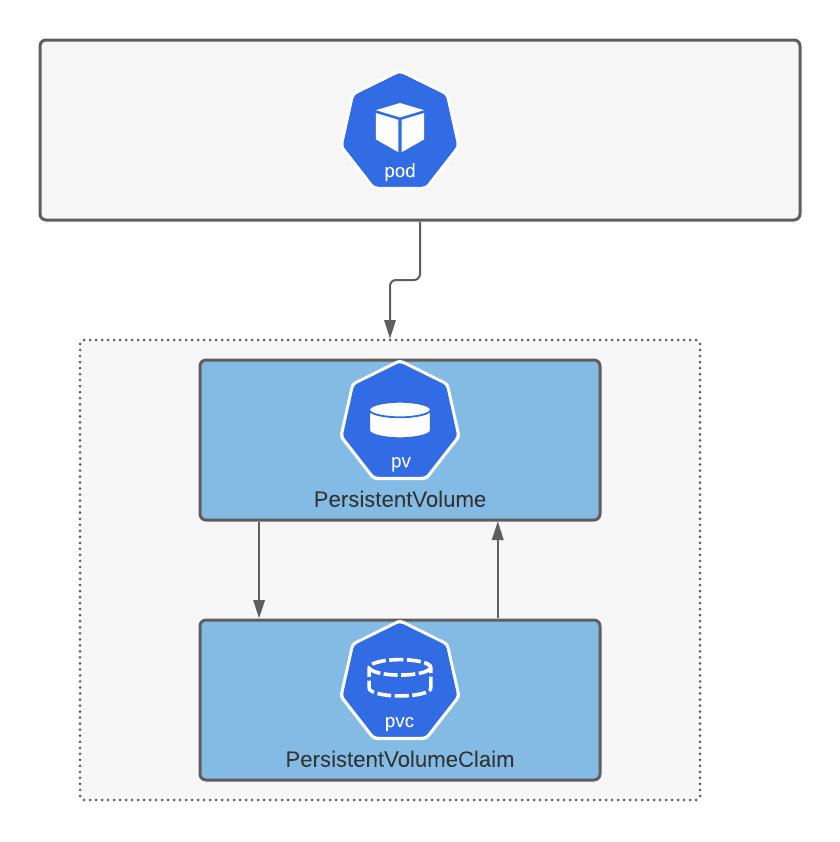

Persistent Volumes

Persistent volumes are used to store data in a Kubernetes cluster. Persistent volumes are made of o various volumes from the cluster nodes. Once a node in the cluster is removed or added, the master node distributes the work efficiently.

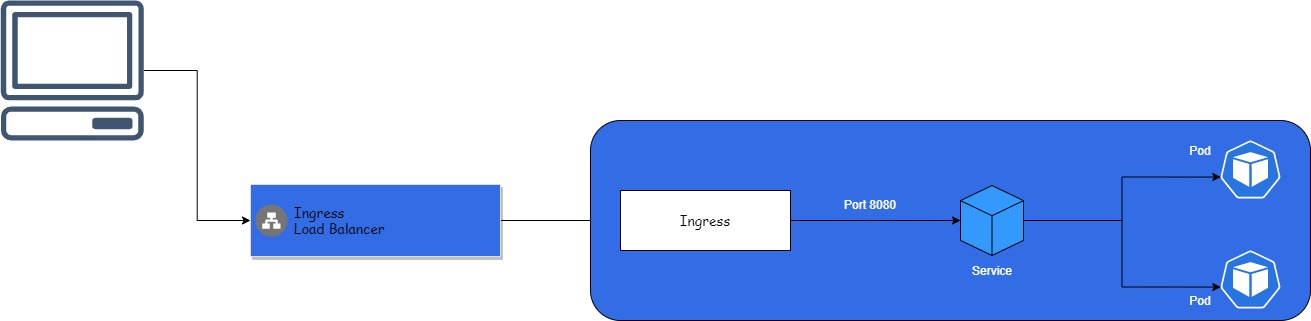

Ingress

The Kubernetes Ingress is an API object that allows access to the Kubernetes service outside the cluster. Ingress typically uses HTTP/HTTPS protocol to expose the services. An ingress is implemented to a cluster using an ingress controller or a load balancer.

Master

The master, also known as the control plane, refers to the central control component for the Kubernetes architecture. It is responsible for managing the workload and interfacing communication between the cluster and its members.

The master is comprised of various components. These include:

- Control manager

- Scheduler

- API server

- ETCD

Control manager

The control manager or kube-control-manager is responsible for running and managing the cluster. The control daemon collects information about the cluster and reports them back to the API server.

Scheduler

The kube-scheduler or simply the scheduler is responsible for the distribution of the workload. It performs functions such as determining if the cluster is healthy, creation of container, and more.

It keeps track of resources such as memory and CPU and schedules pods to the appropriate compute nodes.

API Server

The kube-apiserver is a front-end interface to the Kubernetes master. It allows you to talk to the Kubernetes cluster. Once the API server receives a request, it determines if the request is valid and processes it if true.

To interact with the API server, you need to use REST calls via the command-line control tools such as kubectl or kubeadm.

ETCD

The ETCD is a key-value database responsible for storing configuration data and information about the state of the Kubernetes cluster. It receives commands from other cluster components and performs the necessary actions.

Running Kubernetes

This section covers how to get started with Kubernetes. This illustration is tested on a Debian system.

Launch the terminal and update your system.

sudo apt-get upgrade

Next, install various requirements as shown in the command below:

Install Docker

Next, we need to install Docker as Kubernetes will use it as the container runtime. The instructions below are for Debian operating system. Learn how to install docker on your system.

Add the Docker Official GPG key:

gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg

Next, setup the Docker repositories to the sources as shown in the command:

"deb [arch=amd64 signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/debian \

$(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

Next, update and install Docker:

Finally, start and enable the Docker service

sudo systemctl start docker.service

Install Kubernetes

Next, we need to install Kubernetes on the system. Similarly, the instructions in this guide are tested on a Debian system.

Learn how to install Kubernetes on your system

Start by downloading the Google Cloud signing key:

Next, add Kubernetes repository:

Finally, update and install Kubernetes components

sudo apt-get update

Initialiaze Kubernetes Master Node

The next step is to start the Kubernetes master node. Before doing this, it is recommended to turn off the swap.

To do this, use the command:

Once you turn off swap, initialize the master node with the commands:

Once the command executes successfully, you should get three commands.

Copy and run the commands as:

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config \

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Deploy Pod Network

The next step is to deploy a Pod network. In this guide, we will use the AWS VPC CNI for Kubernetes.

Use the command as:

Upon completion, ensure the cluster is up and running by running the command:

It would be best if you got output as shown:

CoreDNS is running at https://192.168.43.29:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To show all the running nodes, use the command:

Deploy an Application

Let us deploy a MySQL application and expose the service on port 3306. Use the command as shown:

sudo kubectl expose deployment mysql --port=3306 --name=mysql-server

To show the list of deployments, use the command:

To get information about the pods, use the command:

To Sum Up

Kubernetes is a robust container deployment and management tool. This tutorial only scratches the surface of Kubernetes and its capabilities.