The practice of acclimating your infrastructure to its original condition is known as Scaling. If you have too much load, you scale up to allow the environment to respond and evade node-crash quickly. When things settle down, and there isn’t too much load, you can then scale down to optimize your costs. You can perform scaling in two ways: Vertical Scaling and Horizontal Scaling.

In Vertical Scaling, you maximize your resources. For instance, extra memory, extra CPU cores, disks speed, etc. Horizontal scaling can be performed when you add many occurrences using the same hardware specification. Like, a web application contains two occurrences at usual times and 4 when it is busy. Keep in mind depending on your case; you can use either vertical/horizontal or both methods.

However, the issue is when to scale. Previously, the number of resources the cluster should have or the total number of nodes laid were design-time choices. The decisions were a consequence of lots of testing and error. Once the app is successfully released, a human-centric approach observes it from different angles. The most important metric is the CPU. After examining the CPU, they decide whether scaling is performed or not. After the arrival of cloud computing, scaling became quite simpler and handy. But still, you can also perform it manually. Kubernetes can automatically do scaling up or down based on CPU consumption and many other tailored application metrics that you can outline. So, in this tutorial, you’ll learn what kubectl scale deployment is and how to implement it with the help of commands.

In simpler means, deployment is an object of Kubernetes that handles the pod’s production via ReplicaSets. So, if you want to organize a set of similar NGINX pods to your cluster. With the help of deployment, you can instantly scale those pods to meet the requirements.

Scaling can be performed by minimizing or maximizing the number of identical in a deployment, also known as Scaling out and Scaling in. A deployment scaling out will ensure pods are formed and arranged to nodes with existing resources. Scaling in will minimize the number of Pods to the specified state.

Pre-requisites

If you want to make this work, you want an executing Kubernetes cluster. For kubectl scale deployment, you need to install minikube, and once the cluster is up and executing, you’re all set to proceed further.

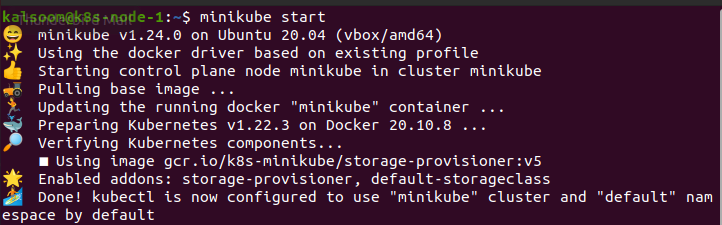

Minikube Start

Simply hit the “Ctrl+Alt+T” shortcut keys concurrently to launch the terminal. Here in the terminal, simply write the “minikube start” command and wait until minikube successfully gets started.

Create Deployment File:

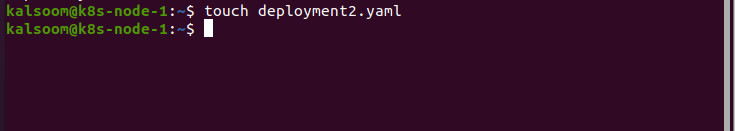

To scale up deployment, the very first thing is to create a deployment in Kubernetes. The below command with the “touch” keyword shows the creation of a file in Ubuntu 20.04.

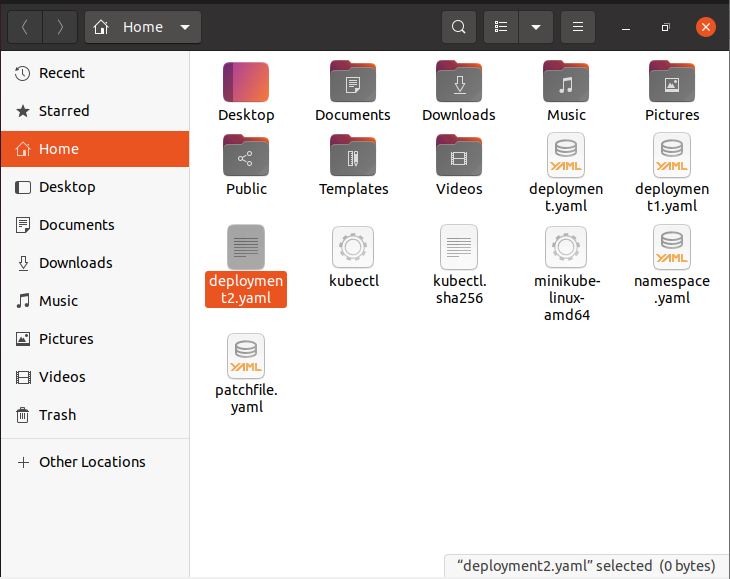

Now run the touch command and head over to the home page, where you’ll view the file named “deployment2.yaml” successfully created.

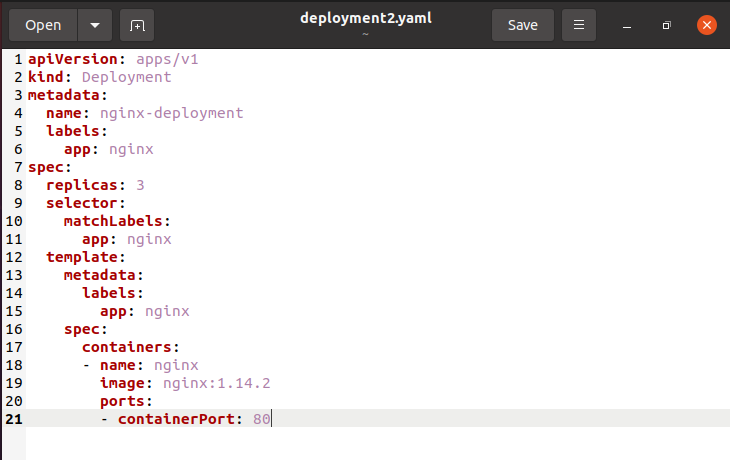

The listed screenshot is an illustration of a Deployment.

The below screenshot states that:

- The “.spec.replicas” specified that nginx-deployment creates 3 similar Pods.

- If a deployment doesn’t know which pods to manage, then the “.spec.selector” field is used for this purpose.

- The Pod template holds the appended sub-fields:

- The labeled applications are Pods: nginx using the .metadata.labels field.

- The field “.template.spec” highlights that Pods run one container which executes the 1.14.2 version.

- With the help of “.spec.template.spec.containers[0].name field.” It forms one container and outlines its name

Create Deployment

Now our next task is to create the deployment for kubectl scale deployment. So, issue the below-mentioned command.

Check Pods Availability

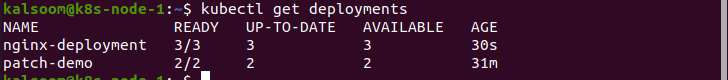

If you want to check the accessibility of ready pods, then issue the appended command. The output shows that nginx-deployment is itemized with 3/3 ready Pods. Also, you can view the name, ready, up-to-date, available, and age parameters.

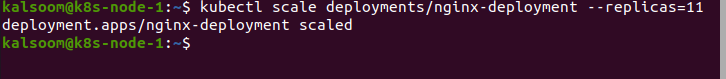

Scaleup Pods – Scale the Deployment

As we’ve already created the deployment, now it’s time to scale it up. Like if you want to scale the NGINX pods from 3 to 5. There are two methods to do this. You can either make changes in the YAML file and alter the line from replica 3 to replica 5, or you can do this via CLI. In our case, we prefer the CLI approach. In this approach, you don’t need to change the YAML file but issue the kubectl scale command just like we did in the screenshot below.

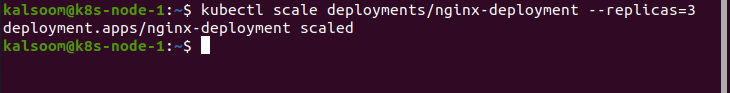

Scaledown Pods

If you want to scale down the pods, you can also do this in the same way you did earlier. Just made changes in the issued kubectl command from 11 to 3:

Conclusion

This article is all about the basic concept of scaling Kubernetes deployments, i.e., Scaleup and Scale down. However, the above-cited examples are very easy to understand and quite handy to implement. You can also use this for more complex deployments and scale them up or down to meet your upward container needs.