Pre-requisites:

For running the commands in Kubernetes, we have to install Ubuntu 20.04. Here we use the Linux operating system to execute the kubectl commands. Now we install the Minikube cluster to run Kubernetes in Linux. Minikube offers an extremely smooth understanding as it provides an efficient mode to test the commands and applications.

Let’s see how to use kubectl dry run:

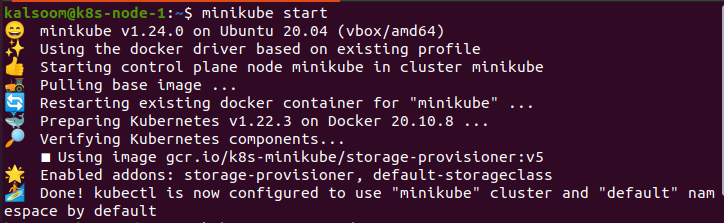

Start Minikube:

After installing the minikube cluster, we start the Ubuntu 20.04. Now we have to open a terminal for running the commands. For this purpose, we press the combination of ‘Ctrl+Alt+T’ from the keyboard.

In the terminal, we write the command ‘minikube start’, and after this, we wait a while till it becomes effectively started. The output of this command is given underneath.

When updating a current item, kubectl apply sends only the patch, not the complete object. Printing any current or original item in dry-run mode is not completely correct. The result of the combination would be printed.

Server-side application logic must be available on the client-side for kubectl to be capable of exactly imitating the outcomes of the application, but this is not the goal.

The existing effort is focused on affecting application logic to the server. After then we have added the ability to dry-run on the server-side. Kubectl apply dry-run does the necessary work by producing the outcome of the apply merge deprived of actually maintaining it.

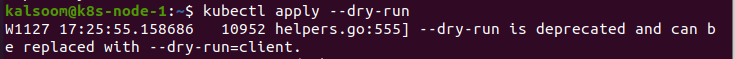

Perhaps we upgrade flag help, issue a notice if Dry-run is used when appraising items using Apply, document Dry-run’s limits, and use server dry-run.

The kubectl diff should be as same as the kubectl apply. It shows the differences between the sources in the file. We can also utilize the selected diff program with the environment variable.

When we utilize the kubectl to apply service to a dry-run cluster, the result appears like the form of the service, not the output from a folder. The returned content must comprise local resources.

Construct a YAML file using the annotated service and relate it to the server. Modify the notes in the file and execute the command ‘kubectl apply -f –dry-run = client’. The output shows server-side observations instead of modified annotations. This will authenticate the YAML file but not construct it. The account we are utilizing for validation has the requested read permission.

This is an instance where –dry-run = client is not appropriate for what we are testing. And this particular condition is often seen when multiple people take CLI access to a cluster. This is because no one seems to constantly recollect applying or creating files after debugging an application.

This kubectl command delivers a brief observation of the resources saved by the API server. Numerous fields are saved and hidden by Apiserver. We can utilize the command by the resource outcome to generate our formations and commands. For example, it is difficult to discover a problem in a cluster with numerous namespaces and placements; however, the following instance utilizes the raw API to test all distributions in the cluster and has a failed replica. Filter simply the deployment.

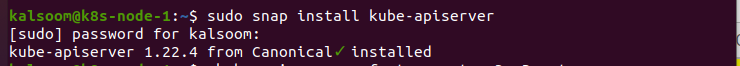

We execute the command ‘sudo snap install kube-apiserver’ to install apiserver.

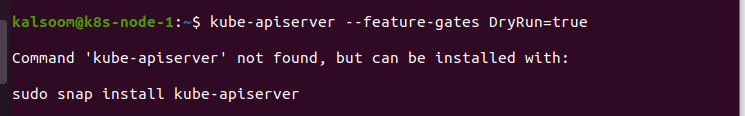

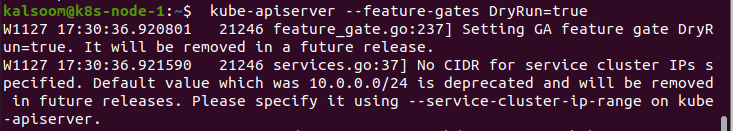

Server-side dry-run is activated through functional gates. This feature would be assisted by default; however, we may enable/disable it using the command “’kube-apiserver –feature-gates DryRun = true’.

If we are using a dynamic access controller, we need to fix it in the following ways:

- We eliminate all side effects after specifying dry-run constraints in a webhook request.

- We state the belongings field of the item to specify that the item has no side effects during dry-run.

Conclusion:

The requested role depends on the permission module that consents to the dry-run in the account to mimic the formation of a Kubernetes item without bypassing the role to be considered.

This is certainly outside the description of the current role. As we know, nothing is formed/removed/patched in the commission run regarding the actions performed in the cluster. However, we also allow this to distinguish between –dry-run = server and –dry-run = no output for the accounts. We can utilize the kubectl apply –server-dry-run to activate a function from kubectl. This will elaborate the demand through the dry-run flag and reoccurrence of the item.