What Is OOMKilled Error?

OOMKilled, to put it simply, is a Kubernetes error that occurs when a pod or container uses more memory than is allotted to it. The OOM stands for Out of Memory. Killed signifies the end of the process.

Increasing the memory allotment is an easy way to solve this recurrent issue. This simple technique, though, only works if the memory is infinitely abundant and the resources are boundless. Let’s find out more about the OOMKilled error, its main causes, how to fix it, and how to balance the memory allocations right in the following sections.

Types of OOMkilled Error

In Kubernetes, OOMKilled errors come in two different variations. One is OOMKilled: Limit Overcommit and the second is OOMKilled: Container Limit Reached.

Let’s learn more about these errors in more depth.

OOMKilled: Limit Overcommit Error

When the aggregate of the pod limit exceeds the node’s available memory, an error may occur. Therefore, if a node has 6 GB of available memory, for example, you can get six pods where each require 1 GB of memory. However, you run the risk of running out of memory if even one of those pods is set up with a limit of, say, 1.1 gigabytes. All it takes for Kubernetes to start murdering the pods is for that one pod to experience a spike in traffic or an unidentified memory leak.

OOMKilled: Container Limit Reached

Kubernetes terminates an application with an “OOMKilled—Container limit reached” error and Exit Code 137 if it has a memory leak or attempts to consume more memory than the allotted limit.

This is by far the most elementary memory error that can happen within a pod. When the container limit is reached normally, it only impacts one pod, unlike the Limit Overcommit error, which has an impact on the node’s total RAM capacity.

Common Causes of OOMKilled Error

You can find the typical causes of this error in the following list. Note that there are numerous additional reasons why OOMKilled errors occur and that many of these are challenging to identify and resolve:

- When the container memory limit is reached, the application experiences a load which is higher than normal.

- The application has a memory leak as a result of the container memory limit being reached.

- Node is overcommitted, which means that the total amount of memory consumed by pods exceeds the memory of the node.

How to Identify the OOMKilled Error

The Pod status can be checked to see if an OOMkilled error occurs. Then, to learn more about the problem, use the describe or get command. The get pods command’s output, as seen in the following, lists any Pod crashes that involve an OOMkilled fault.

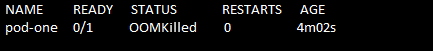

Run the “kubectl get pods” command to find the error. The pod status is shown as Terminating. See the following command and screenshot:

The name of the pod, its status, how many times it started, and the age of the pod are obtained by the “get pods” command. Here, you can see that if a pod breaks because of an OOMKilled issue, Kubernetes makes the error very obvious in the Pod status.

How to Solve the OOMKilled Error?

Let’s now examine a solution to the OOMKilled error.

First of all, we gather the data and save the file’s content for later use. To do so, we first perform the “kubectl describe pod” command. The executed command is attached as follows:

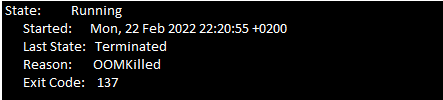

You must now look through the pod events for Exit Code 137. Look for the following message (see the following screenshot) in the event section of the text file of the file.

Due to memory constraints, the container is terminated with Exit Code 137.

There are two most significant reasons for the OOMKilled error. The first reason is when the pod is terminated because of container limit and the second reason is when the pod is terminated because of overcommit on the node. You need to examine the events of the pod’s recent history to try to determine what caused the problem.

The previous section helps you identify the OOMKilled error. Once you’re done with that, the following considerations are necessary to apply.

If the pod is terminated when the container limit is reached, these points should be kept in mind:

- Analyze whether your application needs more memory. For instance, if the application is a website that gets more traffic, it can require more memory than was first planned. In this case, increasing the container’s memory limit in the pod specification solves the issue.

- A memory leak may occur in the program if the memory usage increases unexpectedly. You can easily fix the memory leak and debug the application. In this situation, increasing the memory limit is not a recommended solution because the application consumes many resources on the nodes.

If the pod termination reason is node overcommit, you can follow these guidelines:

- Overcommitment on a node can also occur when the pods are permitted to organize on a node.

- It is important to find out the reason why Kubernetes terminates the pod with the OOMKilled error. Make updates with the memory requests and limit values to avoid the node from being overcommitted.

Conclusion

To summarize, pod crashes are caused by a very simple OOMkilled error. Having an appropriate resource allocation plan for the Kubernetes installations is the best way to handle this problem. By carefully analyzing the application’s resource utilization and the resources’ availability in the K8s cluster, the users can define the resource limitations that won’t influence the functionality of the program or node.