We will talk specifically about the various Kubernetes restart policies in this article. Let us first discuss the various policies that are used when Kubernetes has to be restarted. You can use these Policies to stop a certain workload from being deployed in the cluster. While imposing stringent standards in the cluster is typically done to ensure compliance, cluster administrators should also follow several best practices that have been suggested.

What is the Kubernetes Restart Policy?

Each Kubernetes pod adheres to a specific lifecycle. It begins in the “pending” stage and, if one or more of the primary containers launched successfully, transitions to the “running” stage. Depending on whether the containers in the pod succeed or fail, the process then moves on to the “succeeded” or “failed” phase.

To restart policy at the level of the containers applied, three options can be used:

Always

Every time a container terminates, Kubernetes produces a new one since the pod needs to be active at all times.

OnFailure

If the container exits with a return code other than 0, it only restarts once. Restarting is not necessary for containers that return 0 (success).

Never

The container failed to restart.

Now, in the following section, we will discuss how you can restart a pod.

How to Restart a Pod in Kubernetes?

To restart a Kubernetes pod, issue commands using the kubectl tool. It will connect with the KubeAPI server. Let us explore the available options:

Restarting a Container within a Pod

A pod may hold several containers. On the other hand, you essentially connect to the primary container within a pod when you connect to it. You can connect to each container that you have defined in a case if you have defined more than one.

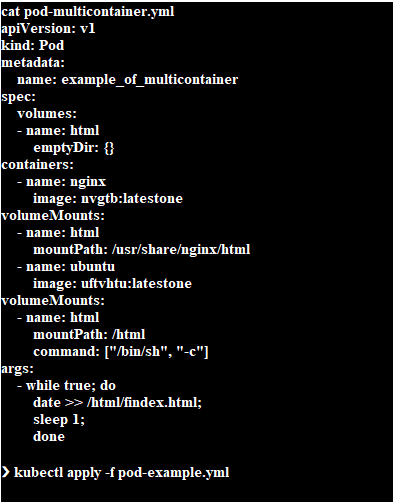

You can see below a multi-container pod specification example:

This describes a shared volume and two containers. The HTML file will be served by the NGINX container and every second the Ubuntu container will add a date stamp to the HTML file.

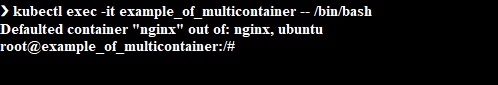

Since you did not specify which container to connect to, it will automatically choose the first one (NGINX) when you attempt to connect to that pod. The screenshot is attached below:

You can now attempt to terminate the PID 1 process inside the currently active container. Run the following commands as root to accomplish this:

You can also make use of the kubectl tool described below:

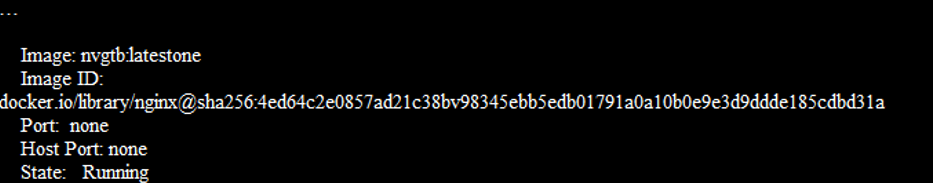

According to the pod specification, K8s will now attempt to restart the destroyed container. For that, the “describe” command is used as follows:

Here is the result of the above command:

The present state is “going,” while the previous state was “terminated.” This means that the container was restarted, according to this. However, not all containers can access root credentials. This is why this method might not be very useful.

Restarting a Pod by Scaling

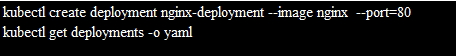

Scaling a pod’s replica count to 0 and then scaling it up to 1 is the simplest way to restart it. You must instead construct a Deployment because the scale command cannot be used on pods. Here’s an easy way to accomplish that:

Scale to 0 and then to 1 after that. By doing this, the pod will be terminated and then redeployed to the cluster:

The replicas are set to 1 as you can see in this image.

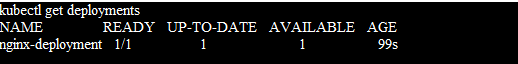

To view the deployment details, we have now used “kubectl get deployments.” The following is a list of both the command and the result:

Restarting a Pod by Deleting It and Redeploying It

Using the “kubectl delete” command, you can delete a pod and then redeploy it. However, this approach is rather disruptive, therefore it is not advised.

Restarting a Pod Using Rollout

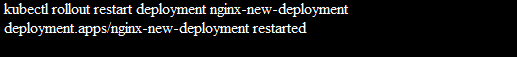

To restart a pod using the manner described above, you must either destroy the existing pod and then create a new one, or scale the replica count down and then up. With Kubernetes version 1.15, you can restart a Deployment in a rolling fashion. This is the suggested procedure for restarting a pod. Simply enter the following command to get started:

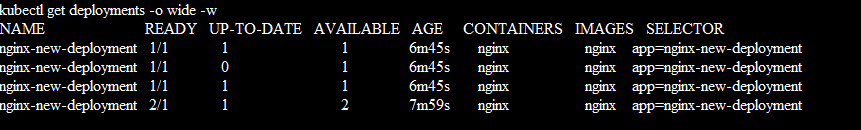

Now, if you keep an eye on the deployment status on a different terminal, you will notice the flow of events as follows:

If it is healthy, it will scale down the previous replica of the Deployment and spin up a new replica of the pod. The outcome is the same, except in this approach, the underlying orchestration was handled by Kubernetes.

How Can Kubernetes Pods Be Restarted in Different Ways?

Let us first begin with the docker container. With the following command, Docker containers can be restarted:

But in Kubernetes, there no comparable command to restart pods, especially if there is no specified YAML file. As an alternative, you can restart Kubernetes pods using kubectl commands. The following commands are listed:

The Kubectl Set Env Command

One method is to use the kubectl scale command. This will modify the number of replicas of the pod that needs to be restarted. Below is an example command on how to set the replicas in the pod to be two:

Rollout Restart Command

Here, we will demonstrate how to use the rollout restart command to restart Kubernetes pods:

The controller is told to exterminate each pod individually by the command. It then scales up new pods using the ReplicaSet. Until every new pod is more recent than every current pod when the controller resumes, this process continues.

The Delete Pod Command

This section will go over how to use the remove command to restart Kubernetes pods. You can notice that we used the next command to get rid of the pod API object in this image:

The expected one is contradicted by deleting the pod object because the Kubernetes API is declarative. To keep consistency with the anticipated one, the pod is therefore recreated.

One pod can be restarted at a time using the previous command. Refer to the attached command to restart several pods:

The aforementioned command restarts each pod by deleting the entire ReplicaSet of pods and then creating it from scratch.

Conclusion

This post provided information on the various Kubernetes restart policies. We illustrated each stage with the aid of sample examples. Also, try out these commands and see what output they generate.