Wget and curl in Linux are powerful commands to download and upload files. Wget is a simple yet reliable utility that allows you to download the files. It offers features like setting the speed and bandwidth restrictions, simultaneous downloads, interaction with REST APIs, encrypted downloads, and more.

On the other hand, curl is a more versatile utility for downloading files of various data formats such as CSV, JSON, and XML. Along with file downloading, it also allows the users to upload the files and interact with APIs. So, if you want to use wget and curl to download the files from the command line, this tutorial is for you. Here, you will get the brief details on the “wget” and “curl” commands in Linux.

How to Use Wget and Curl to Download the Files from the Command Line

We divided this section further into different parts to learn an in-depth information about the “wget” and “curl” commands:

1. How to Use Wget to Download the Files in Linux

With the “wget” command line utility, you can deal with slow downloading speed, interrupted downloads, unstable internet connections, and other issues. For instance, if your download is interrupted, wget can resume it and retrieve the file without restarting the download. To use wget, go through the following steps:

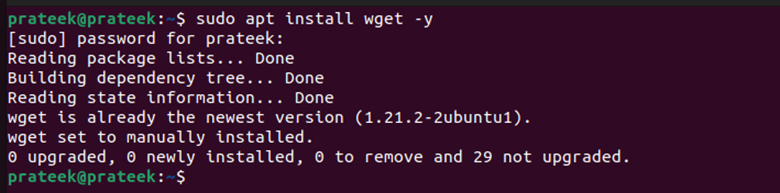

First, ensure that your system contains the “wget” utility. You can install it by running the following command:

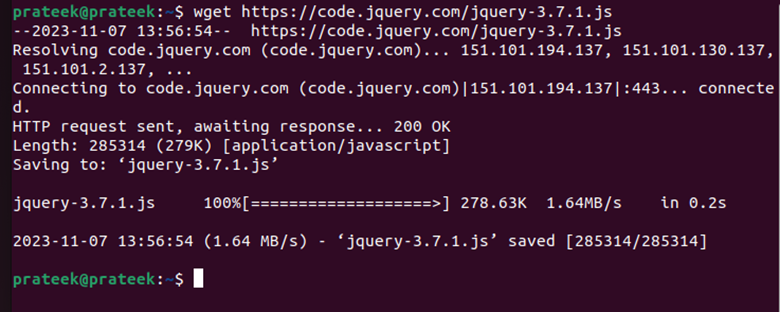

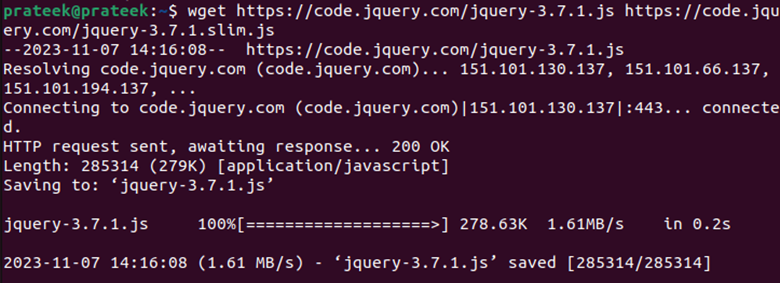

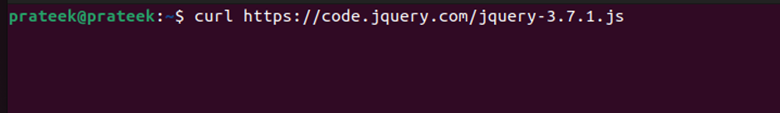

To download a file, copy its URL and enter it in the terminal using the previous syntax. For example, let’s download the latest version of “jQuery” using the following command:

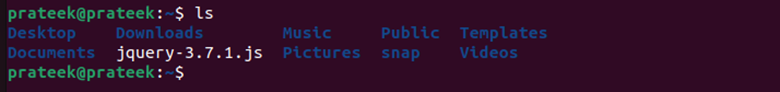

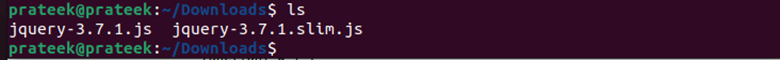

You can check the download file by running the following command:

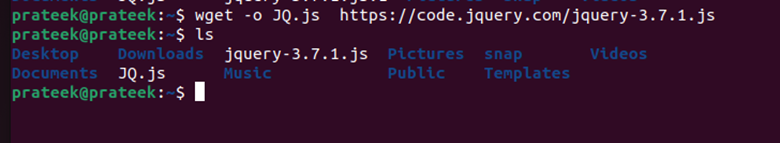

To set a filename when you download a file, use “-o <Filename>” in between the command.

Wget allows downloading multiple files simultaneously by running the following command:

For instance, let’s download two versions of “jQuery” by executing the following command:

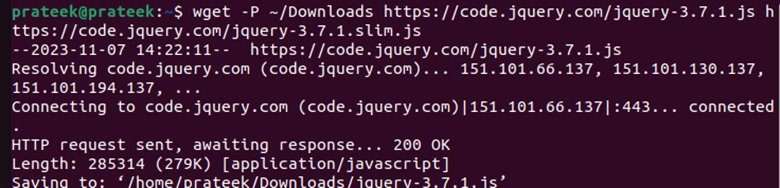

In case you want to choose a specific directory to store the files, run the following command:

If a download is interrupted due to some issue, you can always resume it as long as you download it in the same folder as the incompletely downloaded file. Just type in the “-c” option as follows:

This will re-establish the user-client connection to resume the download.

-

-

2. How to Use Curl in Linux

The “curl” command lets you upload and download the data from servers using protocols like HTTPS, SFTP, SMTP, FTP, TFTP, IMAP, SCP, LDAP, TELNET, FTPS, and others. Furthermore, curl has an automation feature which you can automate queries and increase your productivity.

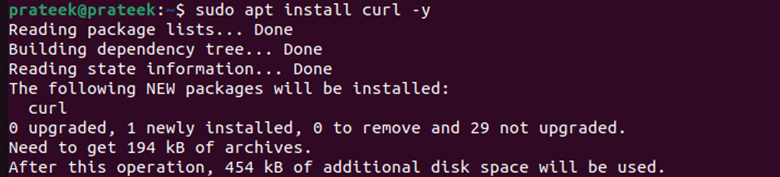

To use the “curl” command, you must install it by running the following command:

Downloading a file using curl is similar to downloading a website’s data as shown in the previous section.

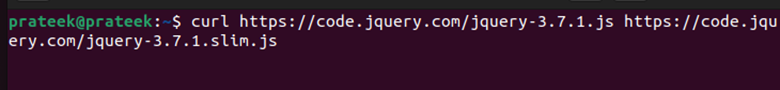

To get the data from multiple URLs, specify those URLs separated by a space in a single “curl” command:

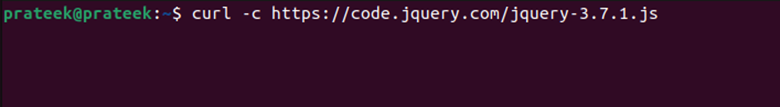

You can resume the interrupted downloads by adding “-c” in the “curl” command. Just ensure to keep the location of the incompletely downloaded file unchanged.

Conclusion

Wget and curl are both excellent tools to get and transfer the data from one source to another, and both offer unique features. That’s why we used different examples to explain both wget and curl briefly. Besides downloading a single file, we explained the simple commands to download multiple files and resume the incomplete downloads. Moreover, you might prefer to use curl if you work in cloud computing.