Building Large Language Models or LLMs can be done using the LangChain framework and its dependencies. These models require a lot of data in natural language for training so they can understand the complexities of the language and generate text accordingly. The training phase is very critical as it can have a huge impact on the performance on the working of the model.

This guide will illustrate the process of using transformation chains from LangChain.

How to Use Transformation Chains in LangChain?

The data transformation is very useful as the users do not have the time to read complete documents so the model can display the smaller chunks of the document. The model can also generate a summary of the complete document so the user can get an idea of the content written in the document.

To learn the process of using the transformation chains in LangChain, simply go through the following guide:

Step 1: Install Modules

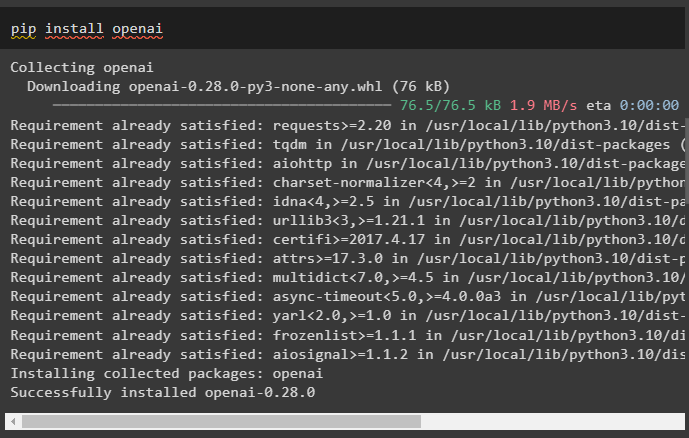

First, install the LangChain framework to start the process by getting its dependencies for building the transformation chains:

Install the OpenAI module to get the OpenAI libraries for using the transformation chains in LangChain:

Now, set up the OpenAI environment by getting the API key from its account and pasting it here:

import getpass

os.environ["OPENAI_API_KEY"] = getpass.getpass("OpenAI API Key:")

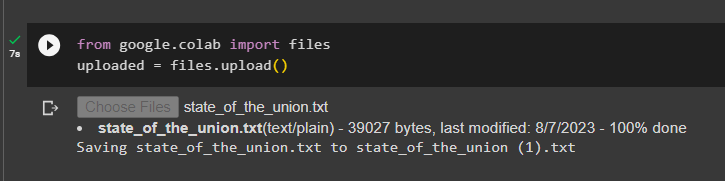

After setting up the environment to build the chains in LangChain, simply upload the documents using the files library:

uploaded = files.upload()

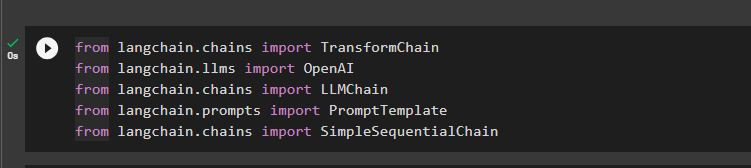

Step 2: Import Libraries

Now, import the required libraries like TransformChain, OpenAI, etc. to use transformation chains:

from langchain.llms import OpenAI

from langchain.chains import LLMChain

from langchain.prompts import PromptTemplate

from langchain.chains import SimpleSequentialChain

Step 3: Using Transformation Chains

Load the documents in the model that was uploaded earlier to use it for splitting into small chunks:

state_of_the_union = f.read()

Build a transformation chain using the TransformChain() with the input and output variables to display the result on the screen:

text = inputs["text"]

shortened_text = "\n\n".join(text.split("\n\n")[:3])

return {"output_text": shortened_text}

#building the chain which can be used to transform the documents

transform_chain = TransformChain(

input_variables=["text"], output_variables=["output_text"],

#configuring the TransformChain() using the query and response variables

transform=transform_func

)

Step 4: Building Prompt Template

After loading the data, simply configure the template for the prompts to build the LLMChain() using the OpenAI and the template/structure of the query:

{output_text}

Summary:"""

prompt = PromptTemplate(input_variables=["output_text"], template=template)

llm_chain = LLMChain(llm=OpenAI(), prompt=prompt)

Initialize the sequential_chain variable with the transformation chain model to get the output from the model using the documents that are used in the model:

Step 5: Running the Chain

In the end, execute/run the chain using the name of the documents loaded earlier to get its smaller chunks or summary on the screen:

That is all about using transformation chains in LangChain.

Conclusion

To use transformation chains in LangChain, simply install the required modules like LangChain and OpenAI and set up the environment. After that, load the documents to train the model and build sequential chains to convert the document into smaller chunks. These smaller chunks are easy to manage and they can help the user to understand the document better. This post has explained how to use the transformation chains in LangChain.