LangChain allows developers to call the OpenAI functions that can generate text in natural language for the user. Calling the OpenAI functions to generate the text in JSON format which makes it more readable for the user. The chat models are built using agents that use tools to perform all the required activities for extracting information from different sources.

Toolkits contain all the required tools for building the language model and chat model using the LangChain. Toolkits with OpenAI means that one of the tools should be used to access and call the OpenAI functions. Using the OpenAI functions, the developers can build the Large Language Modle that can answer the questions for the users in human language.

Quick Outline

This post will demonstrate the following:

How to Use Toolkits With OpenAI Functions in LangChain

How to Use Toolkits With OpenAI Functions in LangChain?

LangChain enables the use of toolkits with OpenAI functions containing all the tools for the agent to get on with the process. Tools are used to perform different activities like getting input from the user, choosing the tool, fetching observations, and many more. To learn the process of using the toolkits with OpenAI functions in LangChain, follow this guide:

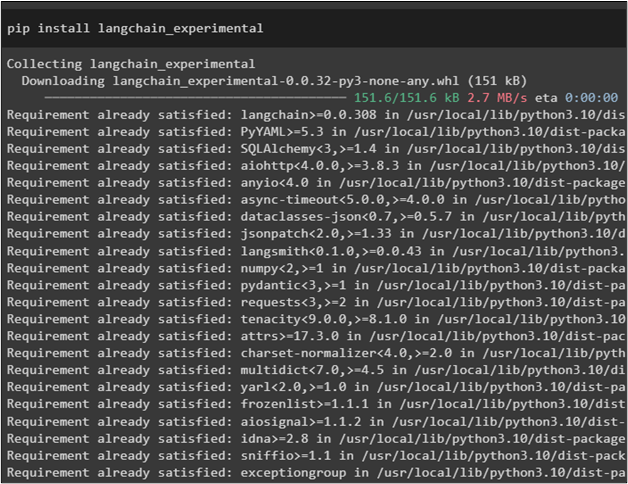

Step 1: Installing Frameworks

First of all, install the “langchain-experimental” using the following code to get its dependencies in Python:

Install the OpenAI module to get its dependencies for building the language model and calling its functions to get structured output:

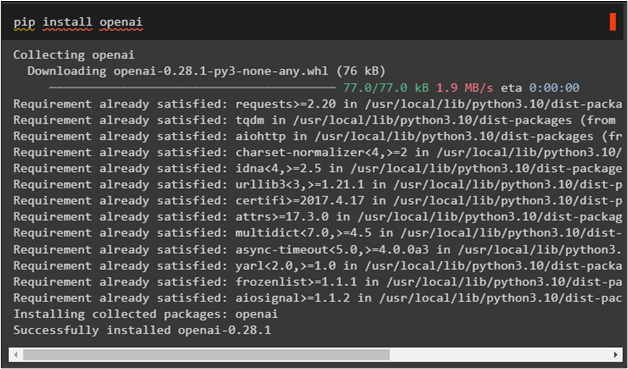

Step 2: Setting OpenAI Environment

The next step after installing the modules is to set up the environment using the API keys from the OpenAI and SerpAPi accounts:

import getpass

os.environ["OPENAI_API_KEY"] = getpass.getpass("OpenAI API Key:")

Step 3: Importing Libraries

Now, import the libraries from the LangChain dependencies to get started with the process of using the toolkits with OpenAI functions:

from langchain.llms import OpenAI

#get library to get the output from the Google search

from langchain.utilities import SerpAPIWrapper

from langchain.utilities import SQLDatabase

from langchain_experimental.sql import SQLDatabaseChain

#configure tools for the agent to get answers from database and internet

from langchain.agents import AgentType, initialize_agent, Tool

from langchain.chat_models import ChatOpenAI

from langchain.agents.agent_toolkits import SQLDatabaseToolkit

from langchain.schema import SystemMessage

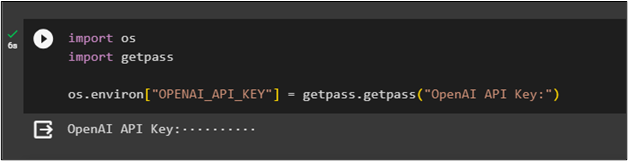

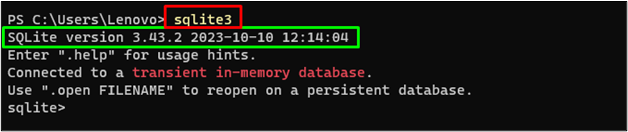

Step 4: Building Database

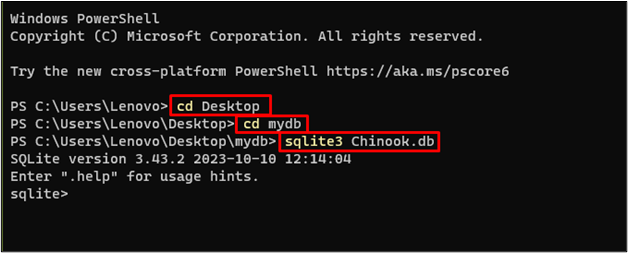

Install SQLite to build the database and confirm the process by executing the following command in the Windows terminal:

Head inside the directory from the local system and open the SQLite to build the database:

cd mydb

sqlite3 Chinook.db

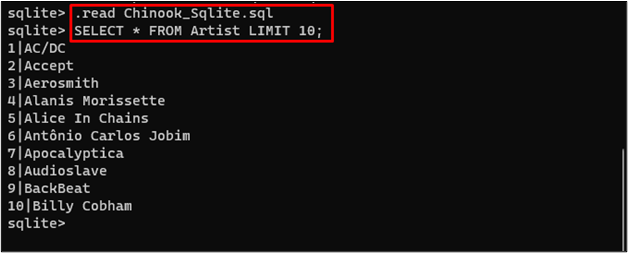

Inside the SQLite build the database using the following code by downloading the contents from the link:

SELECT * FROM Artist LIMIT 10;

The database has been successfully built as the following screenshot displays the data fetched from it:

Step 5: Uploading Database

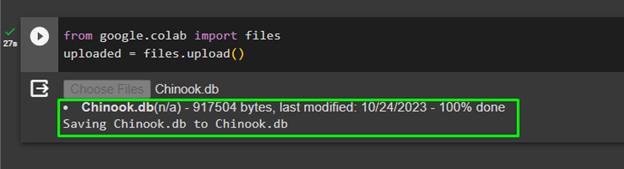

Once the database is built, upload it in the Python Notebook using the following code:

uploaded = files.upload()

Open the uploaded folder on the left pane from the Python Notebook to get the path of the uploaded file. After that, copy the file from the drop-down menu and it use in the next step:

Step 6: Configuring System for Toolkit

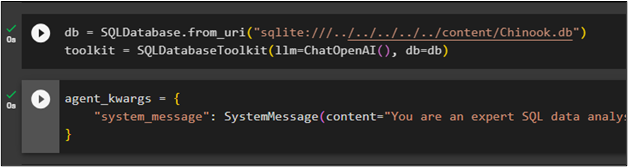

Define the “db” variable by calling the SQLDatabase() method with the path of the file. Only change the “content/Chinook.db” from the following code to add to your system. After that, configure the toolkit variable using the SQLDatabaseToolkit() method with the llm and db as its arguments:

toolkit = SQLDatabaseToolkit(llm=ChatOpenAI(), db=db)

Now, configure the system settings for the toolkit to add the contents with the agent:

"system_message": SystemMessage(content="You are an expert SQL data analyst.")

}

Step 7: Building the Agent

Configure the agent variable to call the toolkit() method with the llm, agent, verbose, and agent_kwargs arguments. The llm variable is configured with the name of the language model using the ChatOpenAI() method. The agent uses the initialize_agent() method with all the components as the parameters to perform their respective tasks:

agent = initialize_agent(

toolkit.get_tools(),

llm,

agent=AgentType.OPENAI_FUNCTIONS,

verbose=True,

agent_kwargs=agent_kwargs,

)

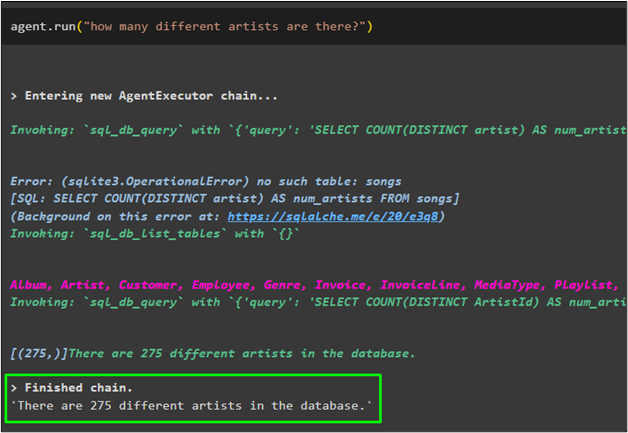

Step 8: Testing the Agent

After configuring all the components, simply test the agent by running it using the input query from the user:

The agent used the toolkit to extract the information from the database and stated the final answer on the screen:

That’s all about using the toolkit with OpenAI functions in LangChain.

Conclusion

To use the toolkits with the OpenAI functions in LangChain, install the langchain-experimental module to get its dependencies for importing libraries. After that, build the database and upload it in the Python notebook to integrate it with the model. Configure the toolkit system with the OpenAI language model and initialize the agent with all the components. In the end, test the agent by asking it to get the output from the different sources using the toolkit. This guide has elaborated on the process of using the toolkits with OpenAI functions in LangChain.