LangChain is the framework to build chat models and LLMs to get information from the dataset or the internet using the OpenAI environment. The structured output parser is used to get multiple fields or responses like the actual answer and some extra related information. The output parser libraries can be used with LangChain to extract data using the models built as LLMs or chat models.

This post demonstrated the process of using the structured output parser in LangChain.

How to Use Structured Output Parser in LangChain?

To use the structured output parser in LangChain, simply go through these steps:

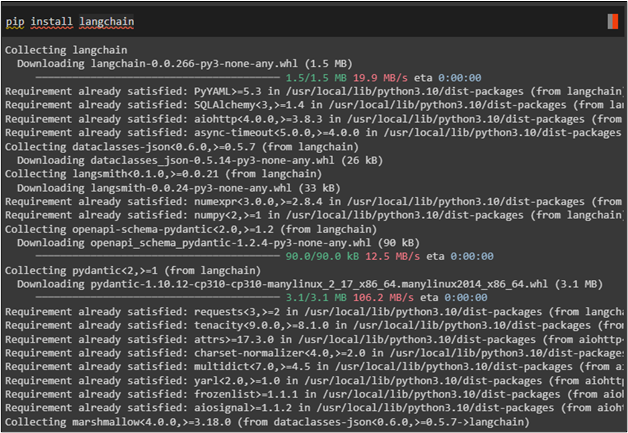

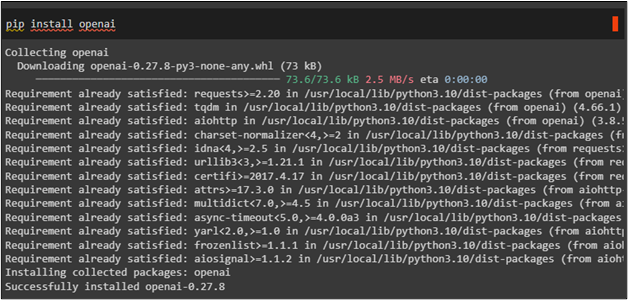

Step 1: Install Prerequisites

Start the process by installing the LangChain framework if it is not already installed in your Python environment:

Install the OpenAI framework to access its methods to build a parser in LangChain:

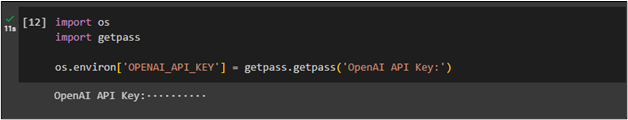

After that, simply connect to the OpenAI environment using its API key to access its environment using the “os” library and provide the API key using the “getpass” library:

import getpass

os.environ['OPENAI_API_KEY'] = getpass.getpass('OpenAI API Key:')

Step 2: Build Schema for the Output/Response

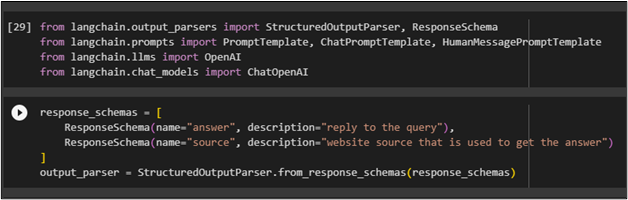

After getting the connection to the OpenAI, simply import the libraries to build the schema for generating the output:

from langchain.prompts import PromptTemplate, ChatPromptTemplate, HumanMessagePromptTemplate

from langchain.llms import OpenAI

from langchain.chat_models import ChatOpenAI

Specify the schema for the response as per the requirement so the model should generate the response accordingly:

ResponseSchema(name="answer", description="reply to the query"),

ResponseSchema(name="source", description="website source that is used to get the answer")

]

output_parser = StructuredOutputParser.from_response_schemas(response_schemas)

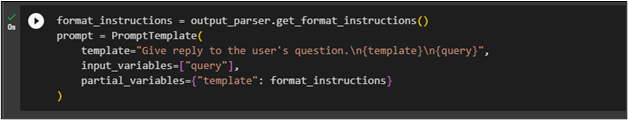

Step 3: Format Template

After configuring the schema for the output, simply set the template for the input in the natural language so the model can understand the questions before fetching the reply for it:

prompt = PromptTemplate(

template="Give reply to the user's question.\n{template}\n{query}",

input_variables=["query"],

partial_variables={"template": format_instructions}

)

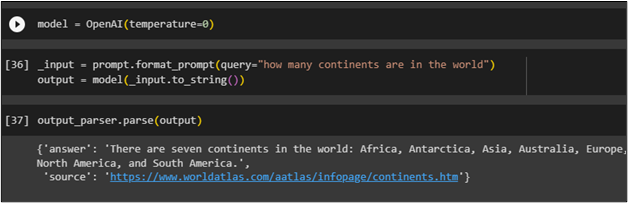

Method 1: Using the Language Model

After configuring the format templates for questions and answers, simply build the model using the OpenAI() function:

Set the prompt in the “query” variable and pass it to the format_prompt() function as the input and then store the answer in the “output” variable:

output = model(_input.to_string())

Call the parse() function with the output variable as its argument to get the answer from the model:

The output parser gets the answer for the query and displays a detailed reply with the link to the page of the website which is used to get the reply:

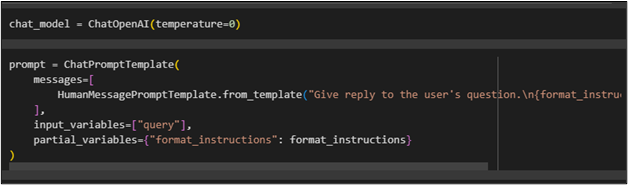

Method 2: Using the Chat Model

To get results from the output parser in LangChain, use the chat_model variable below:

To understand the prompt, configure the prompt template for the chat model. Then, generate the response according to the input:

messages=[

HumanMessagePromptTemplate.from_template("Give reply to the user's question.\n{format_instructions}\n{query}")

],

input_variables=["query"],

partial_variables={"format_instructions": format_instructions}

)

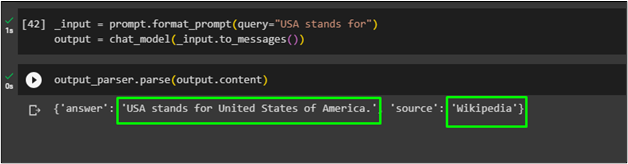

After that, simply provide the input in the “query” variable and then pass it to the chat_model() function to get the output from the model:

output = chat_model(_input.to_messages())

To get the response from the chat model, use the output_parser that stores the result from the “output” variable:

The chat model displayed the answer for the query and the name of the website which is used to get the answer from the internet:

That is all about using a structured output parser in LangChain.

Conclusion

To use the structured output parser in LangChain, simply install the LangChain and OpenAI modules to get started with the process. After that, connect to the OpenAI environment using its API key and then configure the prompt and response templates for the model. The output parser can be used with either a language model or a chat model. This guide explains the use of the output parser with both methods.