In this article, we will discuss how to use Softmax to obtain probabilities in PyTorch.

How to Use Softmax in PyTorch?

The “Softmax” function implements normalization on the model results as it reduces the sum of all probabilities to unity. The syntax for it is given as “torch.nn.functional.softmax()” because its functionality is contained within the Neural Network package “torch.nn”.

Follow the steps given below to learn how to use Softmax in PyTorch in the Colab IDE:

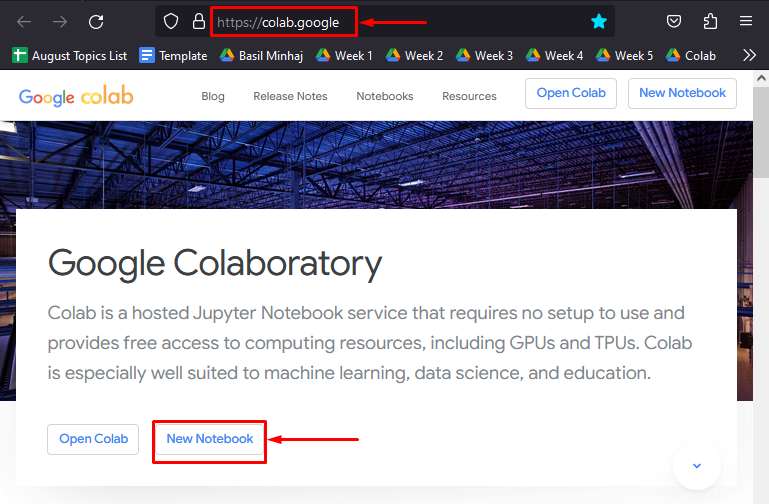

Step 1: Open Google Colaboratory

The first step in this tutorial is to set up a “New Notebook” in Google Colab as shown:

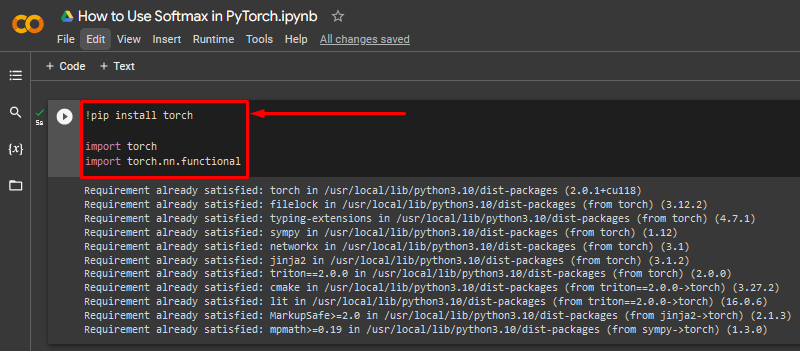

Step 2: Install and Import the Required Libraries

To start working with a PyTorch project, utilize the “!pip” installer to install essential modules such as “torch”. In order to import the modules, use the “import”:

import torch

import torch.nn.functional

The above code works as follows:

- The “pip” package installer for python is used to install libraries in a PyTorch project.

- Next, the “import” command is used to add libraries to the project.

- The “torch” library contains all the essential functionality of the PyTorch framework.

- The “torch.nn.functional” library contains the mathematical functions to be used with Neural Networks within PyTorch:

Step 3: Input a Sample Tensor

In this tutorial, a sample tensor will be inputted into the project and the softmax probability will be applied on it. Essentially, the softmax function calculates the probabilities of the “logits” generated by the neural network for a particular deep learning model. The code for the sample tensor is given below:

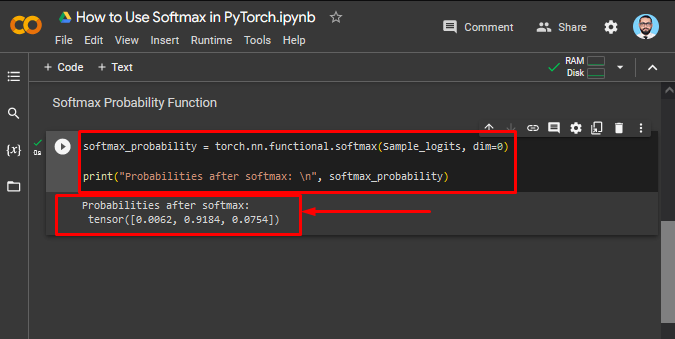

Step 4: Apply the Softmax Function

The last step of this tutorial is to apply the Softmax function to the previously defined sample tensor as shown:

print("Probabilities after softmax: \n", softmax_probability)

The above code works as follows:

- Define a custom variable for the softmax probability data as “softmax_probability”.

- Assign the “torch.nn.functional.softmax()” function to this custom variable and add the sample tensor “Sample_logits” as its argument along with the specification for “dimensions”.

- Lastly, use the “print()” method to showcase the calculated probabilities in the output.

Softmax Probabilities output is pointed below:

Note: You can access our Google Colab notebook using the softmax function at this link.

Pro-Tip

An important point to note with the softmax function is to not employ it within the training loop of the model because the calculation of the probabilities will take over a major part of the available hardware resources. This can directly affect the quality of results. Therefore, it is imperative that the softmax function only be used on already trained deep learning models.

Success! We have just shows you how to use the softmax mathematical function in PyTorch to calculate probabilities.

Conclusion

Use softmax in PyTorch by first importing the “torch.nn.functional” library and then, using the “torch.nn.functional.softmax()” function with the tensor as its argument. This softmax function calculates and presents the probability of each logit generated by the neural network. In this article, we demonstrated how to call the softmax function to act on a custom tensor and generate its probabilities.