This guide will illustrate the process of using the RouterChain from LangChain.

How to Use the RouterChain in LangChain?

To use the RouterChain library in LangChain, go through the following steps:

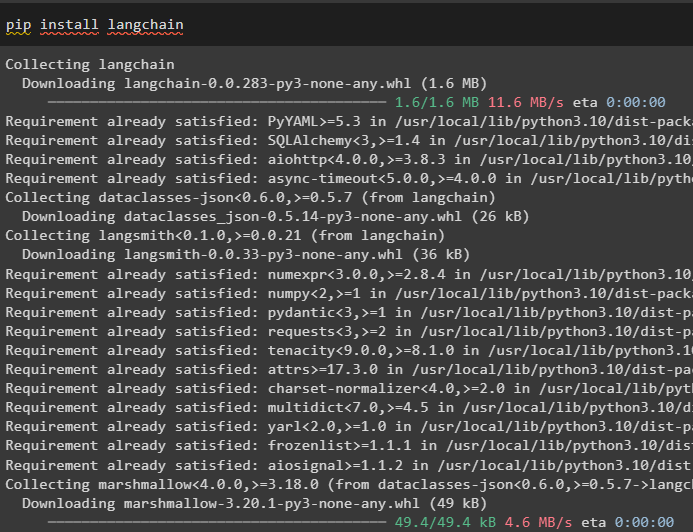

Step 1: Install Package

First, install the LangChain modules using the pip command to get its dependencies for using the RouterChain library:

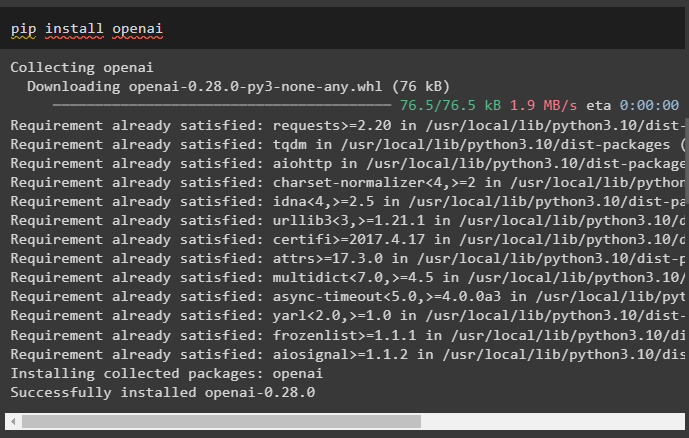

After that, install the OpenAI module to get its dependencies and environment for using the LLMs or chatbots:

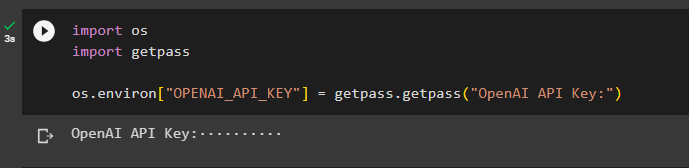

Now, set up the environment for the OpenAI using its API key which can be gathered from the OpenAI account:

import getpass

os.environ["OPENAI_API_KEY"] = getpass.getpass("OpenAI API Key:")

Step 2: Import Libraries

Once the setup is completed, simply import the libraries from the LangChain to start the process:

#importing libraries for building the LLMs

from langchain.llms import OpenAI

#importing libraries for building the LLMChain to use the Prompt Chain

from langchain.chains.llm import LLMChain

from langchain.prompts import PromptTemplate

from langchain.chains import ConversationChain

Step 3: Building Prompt Templates

After importing the libraries, simply configure the prompt templates to train the model on multiple documents:

You are a brilliant model to answer prompts about physics efficiently\

Whenever you do not have the answer to any command simply write I don't know

The following is the query:

{input}"""

math_template = """You are a brilliant machine for solving math \

You are a brilliant model to answer prompts about mathematics efficiently\

Whenever you do not have the answer to any command simply write I don't know

The following is the query:

{input}"""

Now, explain the dataset’s description and its type by defining the prompt_infos variable:

{

"name": "math",

"description": "Answering math questions efficiently",

"prompt_template": math_template,

},

{

"name": "physics",

"description": "Answering physics questions efficiently",

"prompt_template": physics_template,

},

]

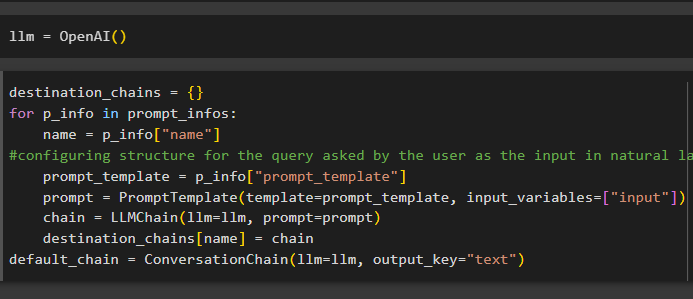

Step 4: Building LLM & Chains

Once the dataset is configured with the prompt templates, simply design the LLM using the OpenAI() method:

Chains are configured through the prompt template and input_variables for getting the prompt from the user:

for p_info in prompt_infos:

name = p_info["name"]

#configuring structure for the query asked by the user as the input in natural language

prompt_template = p_info["prompt_template"]

prompt = PromptTemplate(template=prompt_template, input_variables=["input"])

chain = LLMChain(llm=llm, prompt=prompt)

destination_chains[name] = chain

default_chain = ConversationChain(llm=llm, output_key="text")

Step 5: Using LLMRouterChain

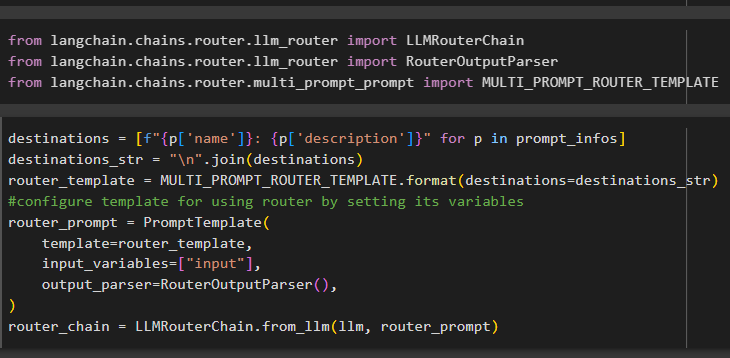

The next step uses the RouterChain with the LLMs and output parser by importing their respective libraries from the LangChain framework:

from langchain.chains.router.llm_router import RouterOutputParser

from langchain.chains.router.multi_prompt_prompt import MULTI_PROMPT_ROUTER_TEMPLATE

Configure the prompt template using the multiple arguments for the methods to define the variables using the LLMRouterChain library for building chains:

destinations_str = "\n".join(destinations)

router_template = MULTI_PROMPT_ROUTER_TEMPLATE.format(destinations=destinations_str)

#configure template for using router by setting its variables

router_prompt = PromptTemplate(

template=router_template,

input_variables=["input"],

output_parser=RouterOutputParser(),

)

router_chain = LLMRouterChain.from_llm(llm, router_prompt)

Configure the chain method using the MultiPromptChain() method to set the values for its parameters:

router_chain=router_chain,

destination_chains=destination_chains,

default_chain=default_chain,

verbose=True,

)

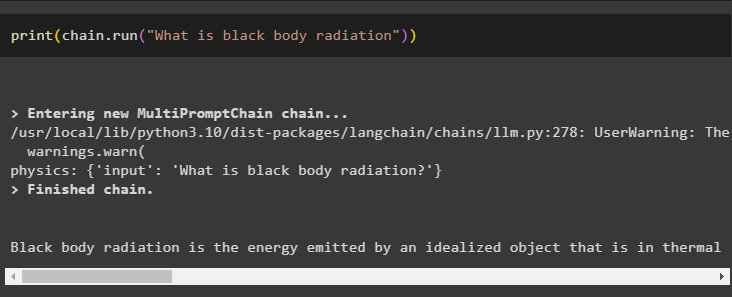

Step 6: Running Chains

Once all the configurations are complete, simply print the output by running the chain with the prompt inside it:

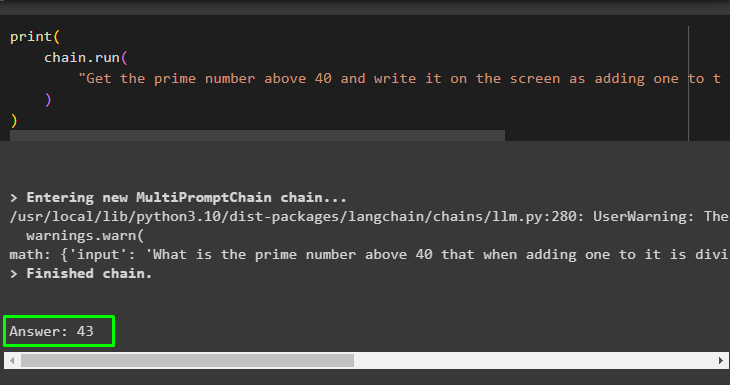

Use the input to extract the information using the chains using the LLMRouterChain() method:

chain.run(

"Get the prime number above 40 and write it on the screen as adding one to the output is divisible by 3"

)

)

That’s all about using the RouterChain through the LangChain module.

Conclusion

To use the RouterChain in LangChain, install the packages for setting up the environment using the API key from the OpenAI account. After that, import the required libraries for using the RouterChain() methods in LLMs and chat models after configuring the prompt template. The user can also design the LLMRouterChain() method for building chains that can select the next chain in the chat. This guide has elaborated on the process of using the RouterChain in LangChain.