This guide will demonstrate the process of using the Retry parser in LangChain.

How to Use Retry Parser in LangChain?

The parser output can display the error response meaning that the output is not according to the prompt. The Retry parser is used to fix the problems that are not fixable by looking at the output and the following guide explains the process in detail:

Setup Prerequisites

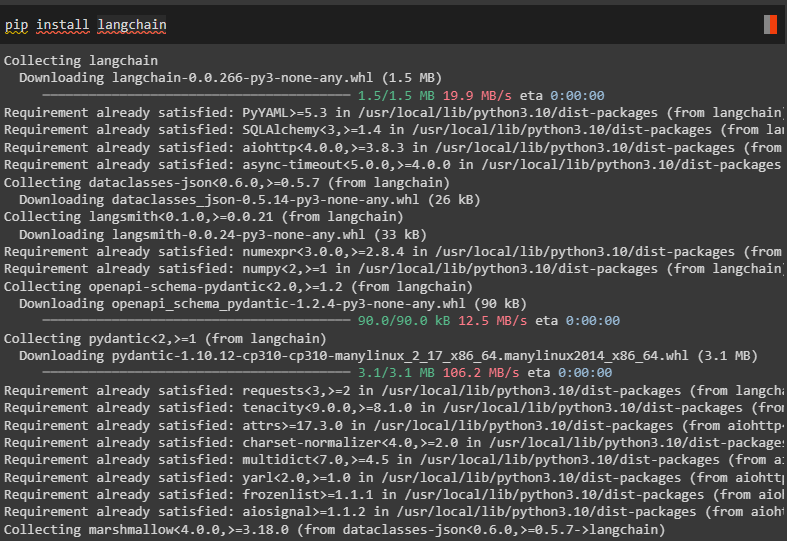

Install the LangChain module to get started with the process:

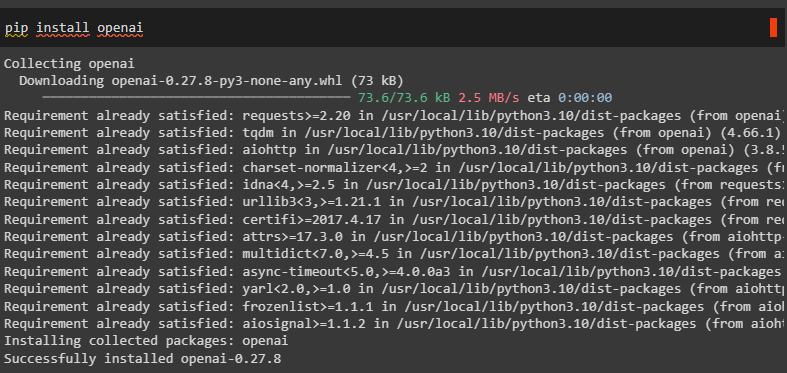

Another module required for the process is OpenAI which can be installed using the “pip install” command:

Use the “os” library to access the operating system and the “getpass” library to connect to the OpenAI using its API key:

import getpass

os.environ['OPENAI_API_KEY'] = getpass.getpass('OpenAI API Key:')

Import Libraries

After connecting to the OpenAI and installing LangChain, simply import the libraries required for this process like templates, parsers, etc.:

from langchain.prompts import (

ChatPromptTemplate,

PromptTemplate,

HumanMessagePromptTemplate,

)

from langchain.llms import OpenAI

from langchain.output_parsers import (

PydanticOutputParser,

OutputFixingParser,

RetryOutputParser,

)

from pydantic import BaseModel, Field, validator

from typing import List

Specify the Prompt Template

Build the template of the model using the Action class with BaseModel as its argument, however, the model is not complete to extract information:

{format_instructions}

Question: {query}

Response:"""

#Prompt template to let the model get the idea about the query it needs to understand

class Action(BaseModel):

action: str = Field(description="action to take")

action_input: str = Field(description="input to the action")

parser = PydanticOutputParser(pydantic_object=Action)

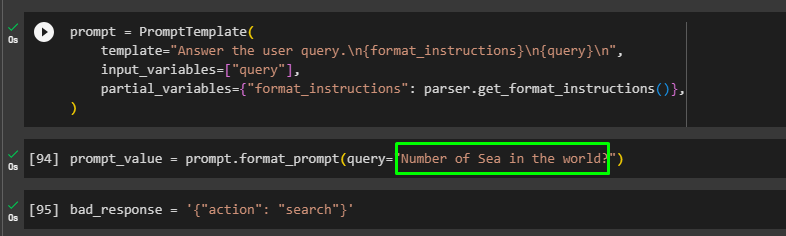

Set the prompt variable with the PromptTemplate() method containing the template for the query:

template="Reply the query\n{format_instructions}\n{query}\n",

input_variables=["query"],

partial_variables={"format_instructions": parser.get_format_instructions()},

)

Run the prompt/query using the format_prompt() function and store it inside the prompt_value variable:

Initialize the bad response with the action and search arguments to display it in case of error:

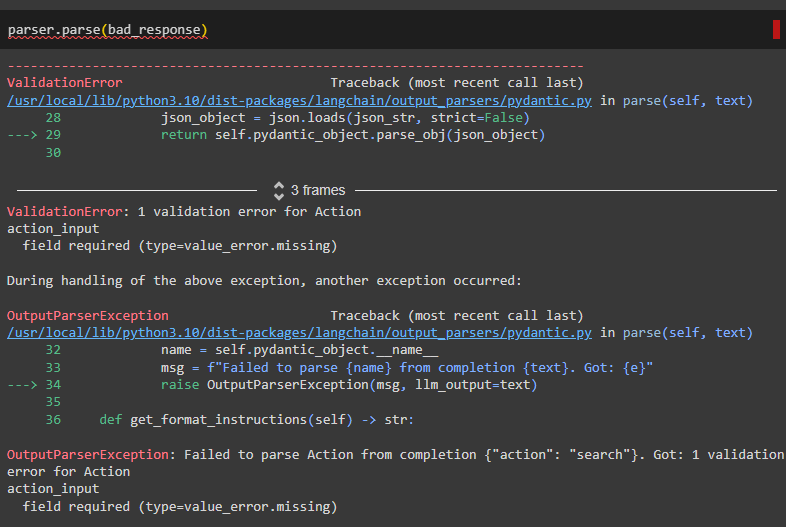

After that, simply use the parse() function containing the bad_response variable:

Executing the above command displays the error response as the format template was not correct or complete:

Using Retry Parser

After getting the error, simply import the RetryWithErrorOutputParser library from the LangChain parser:

Use the retry_parser variable containing the llm using the OpenAI environment:

parser=parser, llm=OpenAI(temperature=0)

)

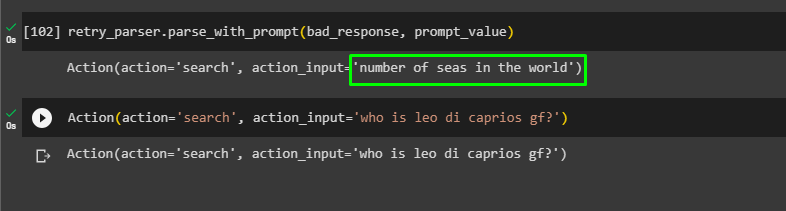

Now we have the LLM setup with the OpenAI that enables the model to understand the prompt provided by the user:

The user can change the prompts and place them in the action_input variable:

That is all about using the Retry parser in LangChain.

Conclusion

To use the Retry parser in LangChain, simply install LangChain and OpenAI modules to use their libraries and methods for completing the process. After that, connect to the OpenAI environment using its API key so it can be used in LLM for understanding the text in prompts. After that, import libraries and build the model using the prompt template to get a response using the Retry parser. This blog has illustrated the concept of using the Retry parser in LangChain.