LangChain allows the user to build AI models related to Natural language processing challenges that can understand and generate text using training data available in vector stores. Quadrant is one of the vector stores that can be used to store data that can be used to build LLM models to generate text related to queries asked in natural language. Quadrant is a similarity search engine that is built to efficiently search data from the database and scale it up and down automatically.

This guide will explain the process of using the quadrant self-querying in LangChain.

How to Use Qdrant Self-Querying in LangChain?

To use Qdrant self-querying in LangChain, simply follow this guide containing multiple steps to complete the process:

Install Modules

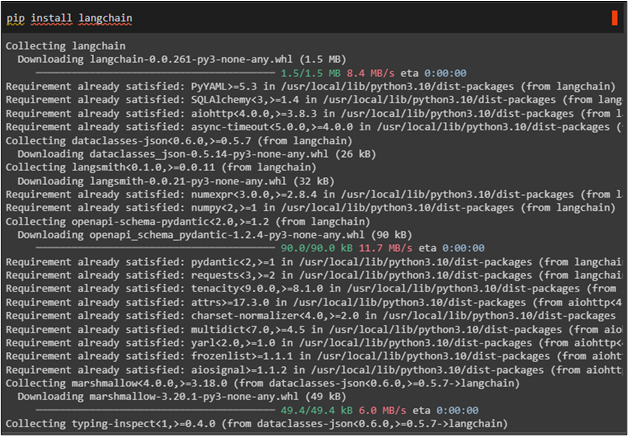

Install LangChain which is the framework containing all the necessary resources to build self-queries using different databases:

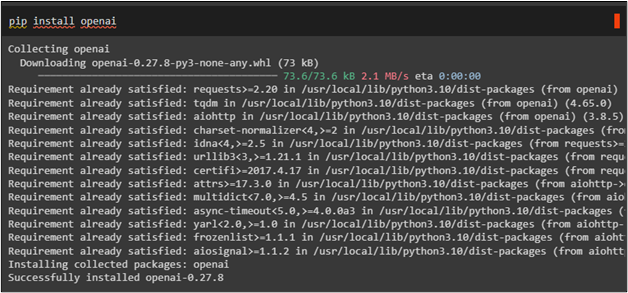

OpenAI modules can be installed using the following code that allows the user to build chatbots to extract data for the users:

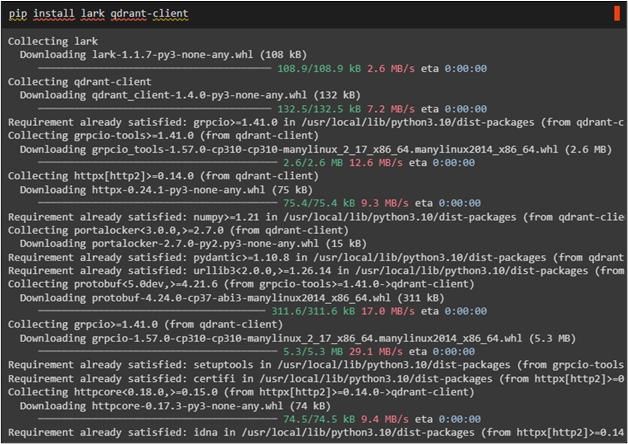

Lark for Qdrant client is also required for this process so simply install it using the code mentioned below:

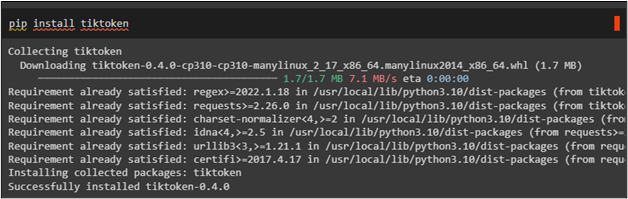

The last installation for this guide is the tiktoken tokenizer to create tokens containing small chunks of the text:

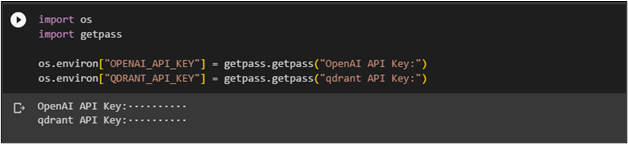

After that installation of the required modules, simply set up the API key for the OpenAI and Qdrant accounts to access their resources in this guide:

import getpass

os.environ["OPENAI_API_KEY"] = getpass.getpass("OpenAI API Key:")

os.environ["QDRANT_API_KEY"] = getpass.getpass("qdrant API Key:")

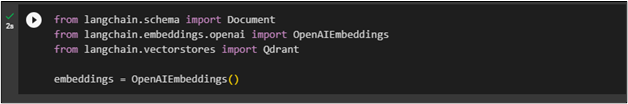

Import Libraries

Import Qdrant from vector stores of LangChain to access its data sets and OpenAIEmbeddings library to embed the text after splitting it into smaller parts:

from langchain.vectorstores import Qdrant

from langchain.embeddings.openai import OpenAIEmbeddings

embeddings = OpenAIEmbeddings()

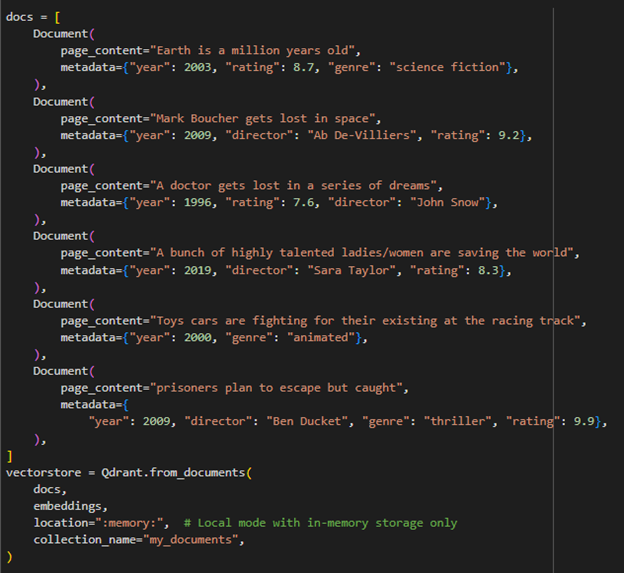

Insert Data in Qdrant Store

Simply create multiple documents of texts related to different movies and store them in the Qdrant database:

Document(

page_content="Earth is a million years old",

metadata={"year": 2003, "rating": 8.7, "genre": "science fiction"},

),

Document(

page_content="Mark Boucher gets lost in space",

metadata={"year": 2009, "director": "Ab De-Villiers", "rating": 9.2},

),

Document(

page_content="A doctor gets lost in a series of dreams",

metadata={"year": 1996, "rating": 7.6, "director": "John Snow"},

),

Document(

page_content="A bunch of highly talented ladies/women are saving the world",

metadata={"year": 2019, "director": "Sara Taylor", "rating": 8.3},

),

Document(

page_content="Toys cars are fighting for their existing at the racing track",

metadata={"year": 2000, "genre": "animated"},

),

Document(

page_content="prisoners plan to escape but caught",

metadata={

"year": 2009, "director": "Ben Ducket", "genre": "thriller", "rating": 9.9},

),

]

vectorstore = Qdrant.from_documents(

docs,

embeddings,

location=":memory:", # Local mode with in-memory storage only

collection_name="my_documents",

)

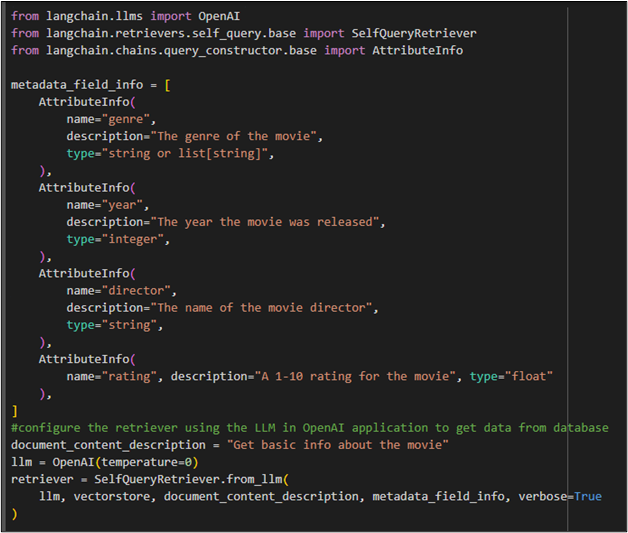

Create a Retriever

After inserting data into the Qdrant vector store, simply create a retriever using the dataset and the following code uses the templet for the prompt bar using the SelfQueryRetriever library:

from langchain.retrievers.self_query.base import SelfQueryRetriever

from langchain.chains.query_constructor.base import AttributeInfo

metadata_field_info = [

AttributeInfo(

name="genre",

description="The genre of the movie",

type="string or list[string]",

),

AttributeInfo(

name="year",

description="The year the movie was released",

type="integer",

),

AttributeInfo(

name="director",

description="The name of the movie director",

type="string",

),

AttributeInfo(

name="rating", description="A 1-10 rating for the movie", type="float"

),

]

#configure the retriever using the LLM in OpenAI application to get data from database

document_content_description = "Get basic info about the movie"

llm = OpenAI(temperature=0)

retriever = SelfQueryRetriever.from_llm(

llm, vectorstore, document_content_description, metadata_field_info, verbose=True

)

Test the Retriever

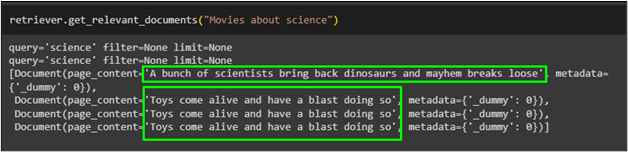

Run the retriever with the prompt in natural language about the dataset stored in the Qdrant vector store:

The retriever has fetched the data according to the prompt/question asked by the user as displayed in the following screenshot:

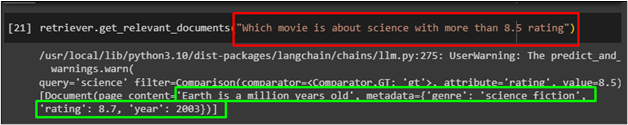

The user can combine filter and query in the prompt to ask for a specific result from the dataset:

The Retriever has fetched the movie from the science fiction genre with 8.7 ratings:

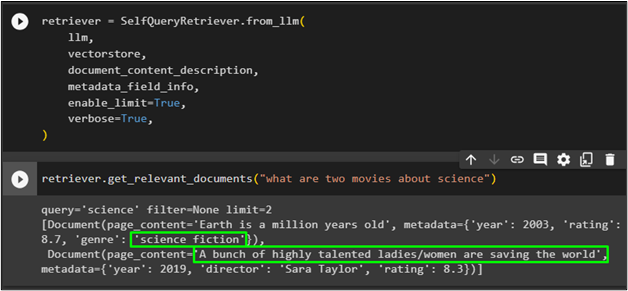

Using K Filter

Configure the Self Query to ask for a specific number of results by enabling the limit prompt:

#Configuring a self-query with K filter to limit the returned values

llm,

vectorstore,

document_content_description,

metadata_field_info,

enable_limit=True,

verbose=True,

)

Ask the query with a specific number of records to be fetched from the database:

The retriever has only retrieved two movies related to the query as limited by the prompter:

That is all about using the Qdrant database self-querying in LangChain.

Conclusion

To use the Qdrant self–querying in LangChain, simply install all the prerequisite modules like LangChain, Lark for Qdrant client, etc. After that, import libraries from LangChain to insert data in the Qdrant database and then create a retriever that can fetch data from the database. Test the retriever using the self-querying to fetch data using filters and limits in the prompt to get specific data. This guide has explained the process of using the Qdrant self-querying in LangChain.