This guide will illustrate the process of using prompt pipelining in LangChain.

How to Use Prompt Pipelining in LangChain?

Prompt pipelining techniques use multiple methods like string and chat prompt pipelining to integrate various parts of prompts. To learn how to use prompt pipelining in LangChain, simply go through the listed steps:

Step 1: Importing Modules

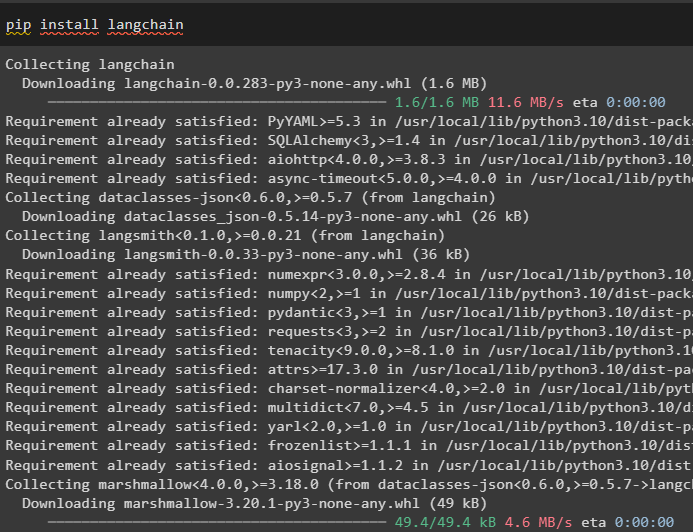

Start the process of using the prompt pipelining in LangChain by installing the LangChain module:

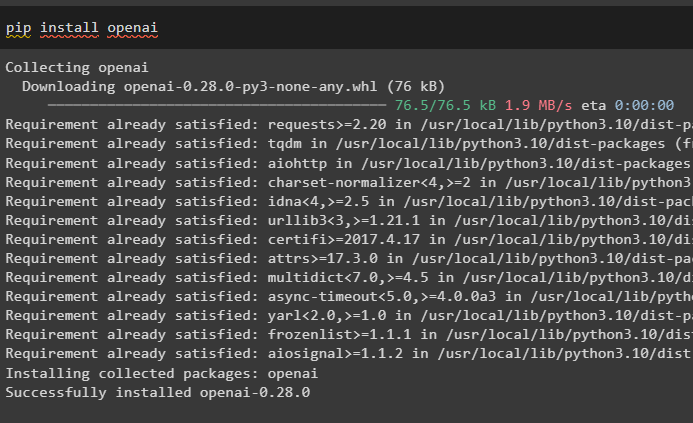

After that, install another module which is OpenAI that is required to perform text embedding:

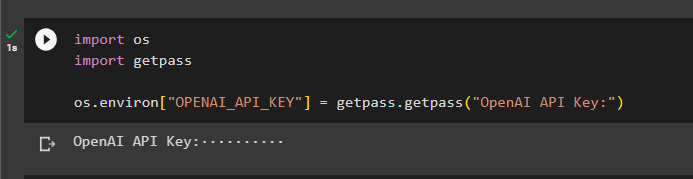

Set up the environment for the OpenAI using its API key from the OpenAI account:

import getpass

os.environ["OPENAI_API_KEY"] = getpass.getpass("OpenAI API Key:")

Step 2: Using String Pipelining

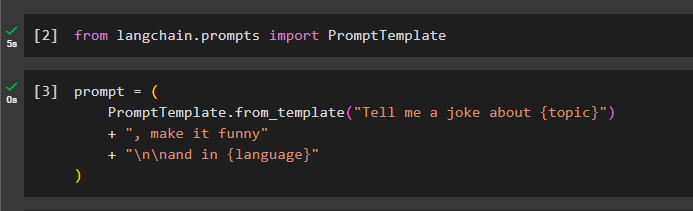

After setting the OpenAI environment, simply import the “PromptTemplate” library from LangChain:

Now, build the template for the prompt using the string to ask for a joke that is funny and using the given language as well:

PromptTemplate.from_template("Tell me a joke about {topic}")

+ ", make it funny"

+ "\n\nand in {language}"

)

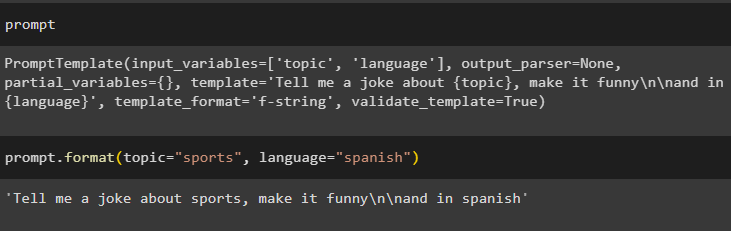

After building the prompt template, simply call the prompt variable that contains the template to print the given template:

Now, call the prompt.format() method with the values of topic and language parameters:

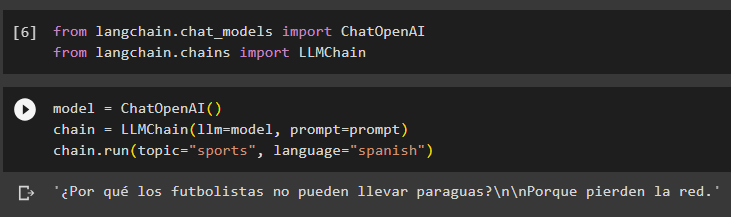

The string pipelining method is also used to build the LLMChains by importing the ChatOpenAI and LLMChain libraries:

from langchain.chains import LLMChain

Configure the LLMChain using the above-imported libraries and then call the chain.run() method with the values of the set parameters:

chain = LLMChain(llm=model, prompt=prompt)

chain.run(topic="sports", language="spanish")

The following screenshot displays that the LLM has displayed the joke in the Spanish language using the string prompt pipelining:

Step 3: Using Chat Prompt Pipelining

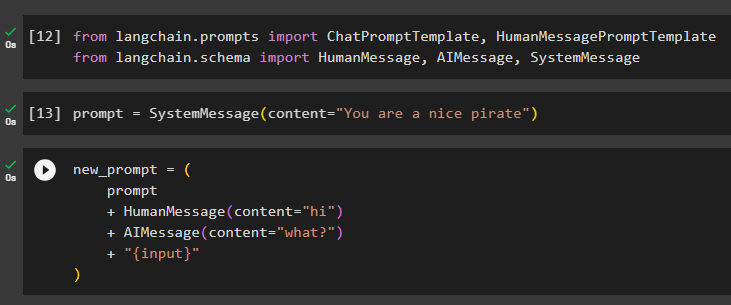

The next step in the guide is using the chat prompt pipelining method by importing its required libraries as displayed in the code block below:

from langchain.schema import HumanMessage, AIMessage, SystemMessage

Now, build the prompt template using chat prompt pipelining by calling the SystemMessage() method with the content as its parameter:

Configure the new_prompt variable with some more prompts that are to be combined as reuse accordingly with the previous command:

prompt

+ HumanMessage(content="hi")

+ AIMessage(content="what?")

+ "{input}"

)

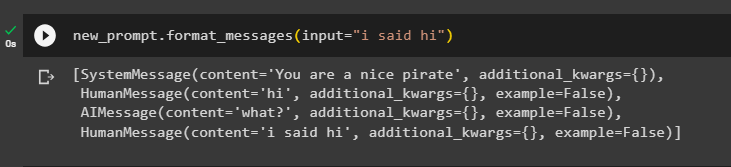

Call the prompt using the input message to train the model on the chat prompt pipelining:

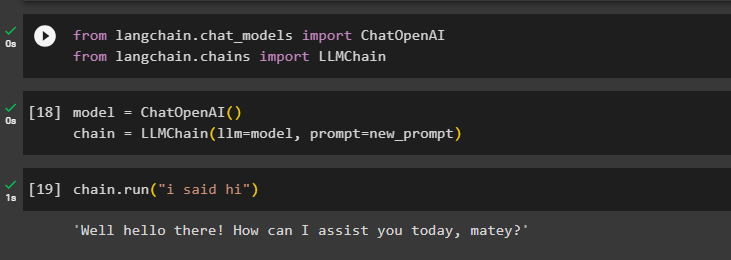

The developers can use the chat prompt pipelining to build LLMChains by importing the ChatOpenAI and LLMChain libraries:

from langchain.chains import LLMChain

Build the “model” using the ChatOpenAI() method and configure the “chain” variable with the LLMChain() method:

chain = LLMChain(llm=model, prompt=new_prompt)

Simply run the chain() method with the prompt to start the conversation with the model:

The model has recognized the message and understood it to generate the reply for us as displayed in the screenshot below:

That is all about using the prompt pipelining in LangChain.

Conclusion

To use the prompt pipelining in LangChain, start the process by installing the LangChain framework and then setting up the OpenAI environment using its API key. LangChain provides a couple of methods for using prompt pipelining such as string prompt pipelining and chat prompt pipelining. The user can also build LLMChains using both methods to get the answers from the model for evaluating it. This guide has illustrated the process of using the prompt pipelining in the LangChain framework.