This post illustrates the method of using the output parser functions and classes through the LangChain framework.

How to Use the Output Parser Through LangChain?

The output parsers are the outputs and classes that can help to get the structured output from the model. To learn the process of using the output parsers in LangChain, simply go through the listed steps:

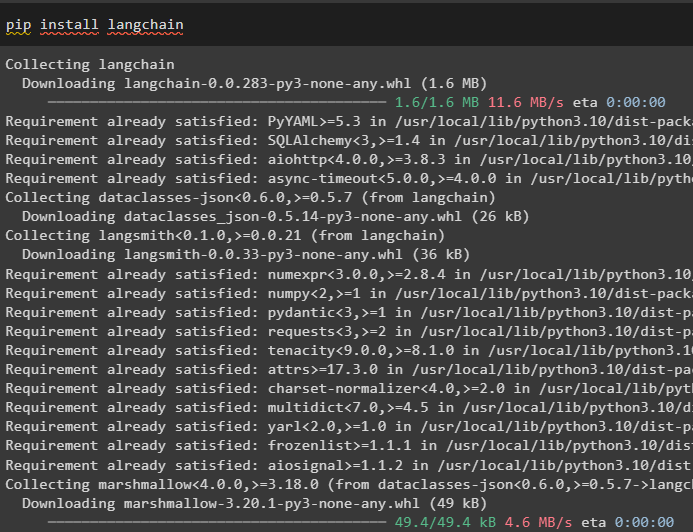

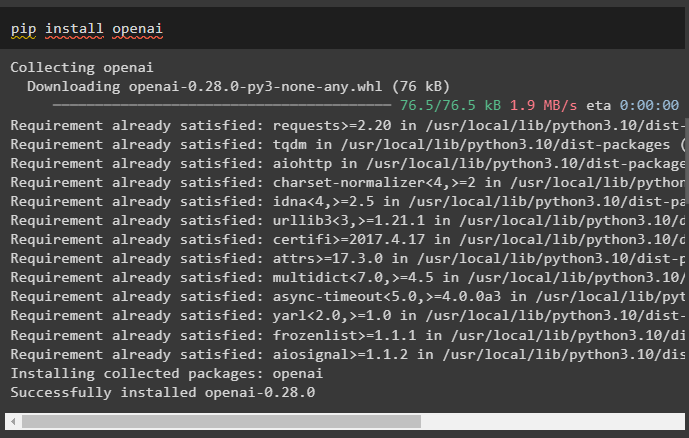

Step 1: Install Modules

Firstly, start the process of using the output parsers by installing the LangChain module with its dependencies to go through the process:

After that, install the OpenAI module to use its libraries like OpenAI and ChatOpenAI:

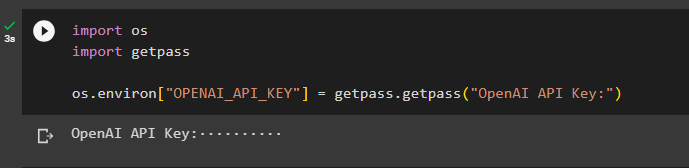

Now, set up the environment for the OpenAI using the API key from the OpenAI account:

import getpass

os.environ["OPENAI_API_KEY"] = getpass.getpass("OpenAI API Key:")

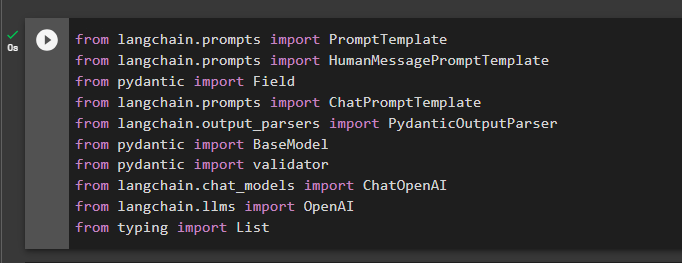

Step 2: Import Libraries

The next step is to import libraries from LangChain to use the output parsers in the framework:

from langchain.prompts import HumanMessagePromptTemplate

from pydantic import Field

from langchain.prompts import ChatPromptTemplate

from langchain.output_parsers import PydanticOutputParser

from pydantic import BaseModel

from pydantic import validator

from langchain.chat_models import ChatOpenAI

from langchain.llms import OpenAI

from typing import List

Step 3: Building Data Structure

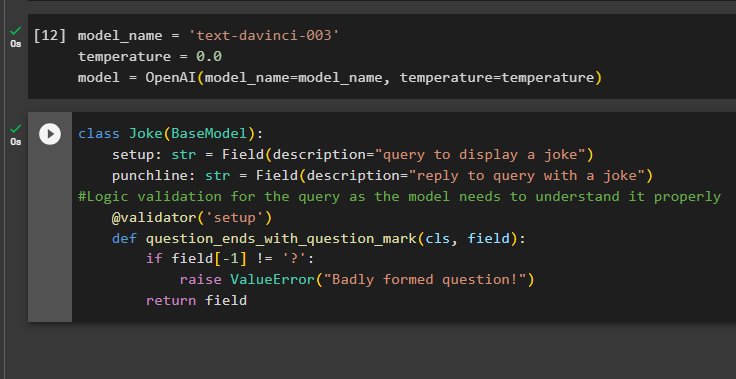

Building the structure of the output is the vital application of the output parsers in Large Language Models. Before getting to the data structure of the models, it is required to define the name of the model we are using to get the structured output from output parsers:

temperature = 0.0

model = OpenAI(model_name=model_name, temperature=temperature)

Now, use the Joke class containing the BaseModel to configure the structure of the output to get the joke from the model. After that, the user can add custom validation logic easily with the pydantic class which can ask the user to put a better-formed query/prompt:

setup: str = Field(description="query to display a joke")

punchline: str = Field(description="reply to query with a joke")

#Logic validation for the query as the model needs to understand it properly

@validator('setup')

def question_ends_with_question_mark(cls, field):

if field[-1] != '?':

raise ValueError("Badly formed question!")

return field

Step 4: Setting Prompt Template

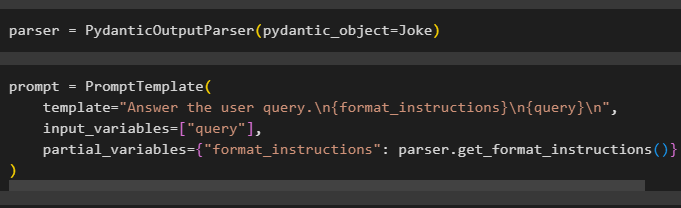

Configure the parser variable containing the PydanticOutputParser() method containing its parameters:

After configuring the parser, simply define the prompt variable using the PromptTemplate() method with the structure of the query/prompt:

template="Answer the user query.\n{format_instructions}\n{query}\n",

input_variables=["query"],

partial_variables={"format_instructions": parser.get_format_instructions()}

)

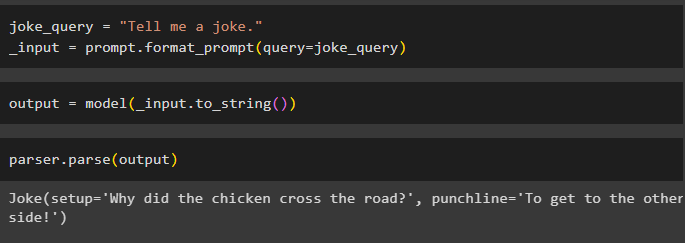

Step 5: Test the Output Parser

After configuring all the requirements, create a variable that is assigned using a query and then call the format_prompt() method:

_input = prompt.format_prompt(query=joke_query)

Now, call the model() function to define the output variable:

Complete the testing process by calling the parser() method with the output variable as its parameter:

That is all about the process of using the output parser in LangChain.

Conclusion

To use the output parser in LangChain, install the modules and set up the OpenAI environment using its API key. After that, define the model and then configure the data structure of the output with logic validation of the query provided by the user. Once the data structure is configured, simply set the prompt template, and then test the output parser to get the result from the model. This guide has illustrated the process of using the output parser in the LangChain framework.