LangChain is the framework that can be used to import libraries and dependencies for building Large Language Models or LLMs. The language models use memory to store data or history in the database as observation to get the context of the conversation. The memory is configured to store the most recent messages so the model can understand the ambiguous prompts given by the user.

This blog explains the process of using memory in LLMChain through LangChain.

How to Use Memory in LLMChain Through LangChain?

To add memory and use it in the LLMChain through LangChain, the ConversationBufferMemory library can be used by importing it from the LangChain.

To learn the process of using the memory in LLMChain through LangChain, go through the following guide:

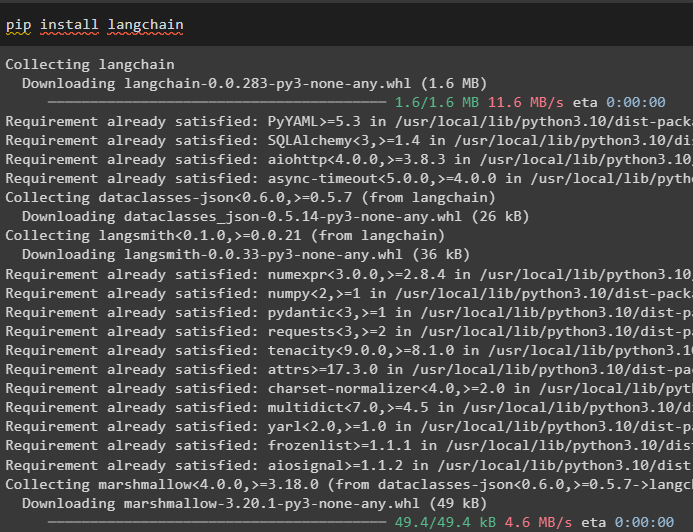

Step 1: Install Modules

First, start the process of using the memory by installing the LangChain using the pip command:

Install the OpenAI modules to get its dependencies or libraries to build LLMs or chat models:

Setup the environment for the OpenAI using its API key by importing the os and getpass libraries:

import getpass

os.environ["OPENAI_API_KEY"] = getpass.getpass("OpenAI API Key:")

Step 2: Importing Libraries

After setting up the environment, simply import the libraries like ConversationBufferMemory from the LangChain:

from langchain.llms import OpenAI

from langchain.memory import ConversationBufferMemory

from langchain.prompts import PromptTemplate

Configure the template for the prompt using variables like “input” to get the query from the user and “hist” for storing the data in buffer memory:

{hist}

Human: {input}

Chatbot:"""

prompt = PromptTemplate(

input_variables=["hist", "input"], template=template

)

memory = ConversationBufferMemory(memory_key="hist")

Step 3: Configuring LLM

Once the template for the query is built, configure the LLMChain() method using multiple parameters:

llm_chain = LLMChain(

llm=llm,

prompt=prompt,

verbose=True,

memory=memory,

)

Step 4: Testing LLMChain

After that, test the LLMChain using the input variable to get the prompt from the user in the textual form:

Use another input to get the data stored in the memory for extracting output using the context:

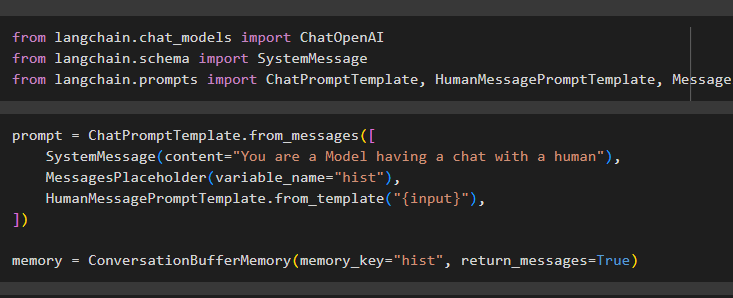

Step 5: Adding Memory to a Chat Model

The memory can be added to the chat model-based LLMChain by importing the libraries:

from langchain.schema import SystemMessage

from langchain.prompts import ChatPromptTemplate, HumanMessagePromptTemplate, MessagesPlaceholder

Configure the prompt template using the ConversationBufferMemory() using different variables to set the input from the user:

SystemMessage(content="You are a Model having a chat with a human"),

MessagesPlaceholder(variable_name="hist"),

HumanMessagePromptTemplate.from_template("{input}"),

])

memory = ConversationBufferMemory(memory_key="hist", return_messages=True)

Step 6: Configuring LLMChain

Set up the LLMChain() method to configure the model using different arguments and parameters:

chat_llm_chain = LLMChain(

llm=llm,

prompt=prompt,

verbose=True,

memory=memory,

)

Step 7: Testing LLMChain

At the end, simply test the LLMChain using the input so the model can generate the text according to the prompt:

The model has stored the previous conversation in the memory and displays it before the actual output of the query:

That is all about using memory in LLMChain using LangChain.

Conclusion

To use the memory in LLMChain through the LangChain framework, simply install the modules to set up the environment to get the dependencies from the modules. After that, simply import the libraries from LangChain to use the buffer memory for storing the previous conversation. The user can also add memory to the chat model by building the LLMChain and then testing the chain by providing the input. This guide has elaborated on the process of using the memory in LLMChain through LangChain.