This guide will illustrate the process of using entity memory in LangChain.

How to Use Entity Memory in LangChain?

The entity is used to keep the key facts stored in the memory to extract when asked by the human using the queries/prompts. To learn the process of using the entity memory in LangChain, simply visit the following guide:

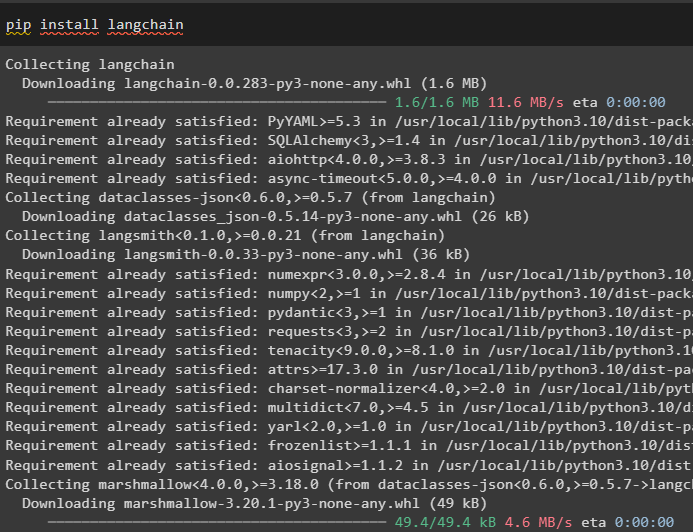

Step 1: Install Modules

First, install the LangChain module using the pip command to get its dependencies:

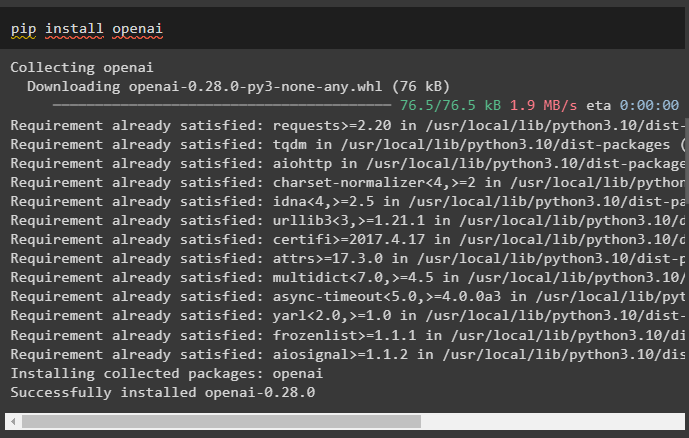

After that, install the OpenAI module to get its libraries for building LLMs and chat models:

Setup the OpenAI environment using the API key which can be extracted from the OpenAI account:

import getpass

os.environ["OPENAI_API_KEY"] = getpass.getpass("OpenAI API Key:")

Step 2: Using Entity Memory

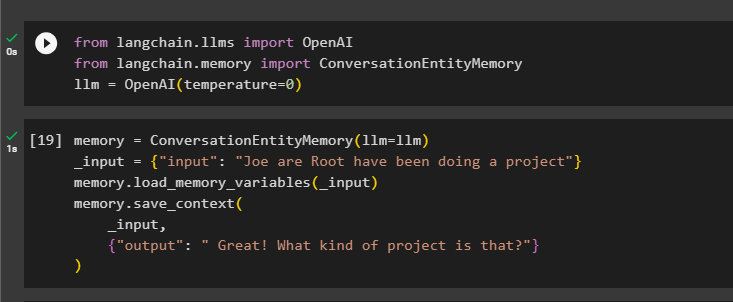

To use the entity memory, import the required libraries to build the LLM using the OpenAI() method:

from langchain.memory import ConversationEntityMemory

llm = OpenAI(temperature=0)

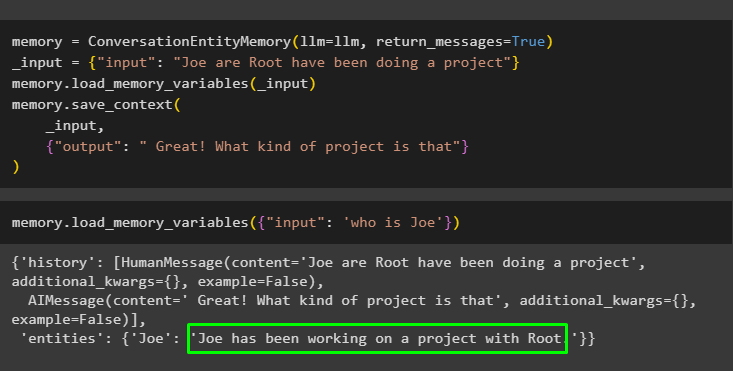

After that, define the memory variable using the ConversationEntityMemory() method to train the model using the input and output variables:

_input = {"input": "Joe are Root have been doing a project"}

memory.load_memory_variables(_input)

memory.save_context(

_input,

{"output": " Great! What kind of project is that?"}

)

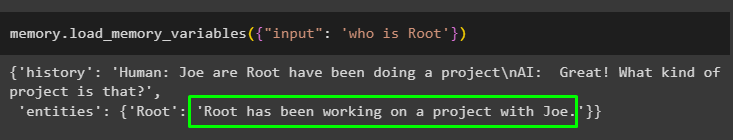

Now, test the memory using the query/prompt in the input variable by calling the load_memory_variables() method:

Now, give some more information so the model can add a few more entities in the memory:

_input = {"input": "Joe are Root have been doing a project"}

memory.load_memory_variables(_input)

memory.save_context(

_input,

{"output": " Great! What kind of project is that"}

)

Execute the following code to get the output using the entities that are stored in the memory. It is possible through the input containing the prompt:

Step 3: Using Entity Memory in a Chain

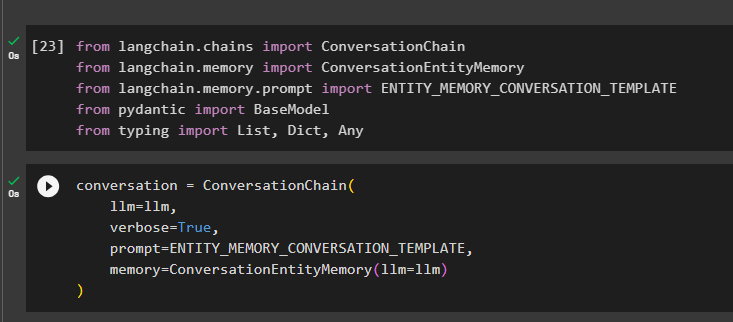

To use the entity memory after building a chain, simply import the required libraries using the following code block:

from langchain.memory import ConversationEntityMemory

from langchain.memory.prompt import ENTITY_MEMORY_CONVERSATION_TEMPLATE

from pydantic import BaseModel

from typing import List, Dict, Any

Build the conversation model using the ConversationChain() method using the arguments like llm:

llm=llm,

verbose=True,

prompt=ENTITY_MEMORY_CONVERSATION_TEMPLATE,

memory=ConversationEntityMemory(llm=llm)

)

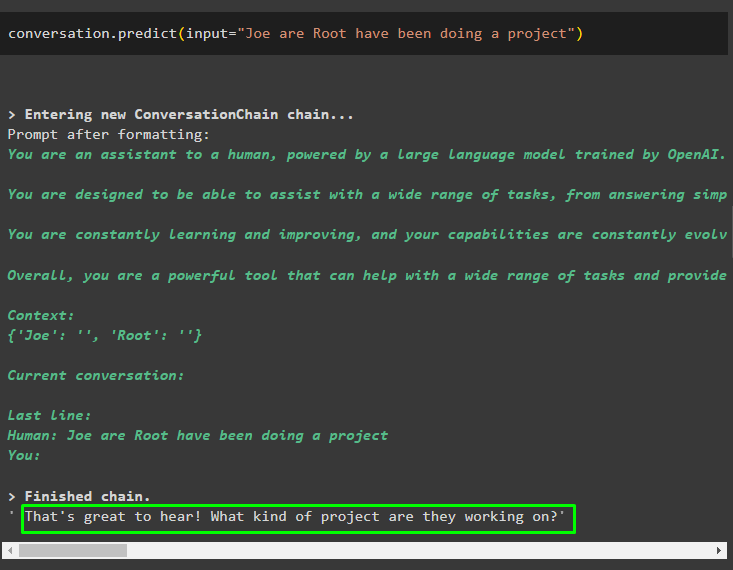

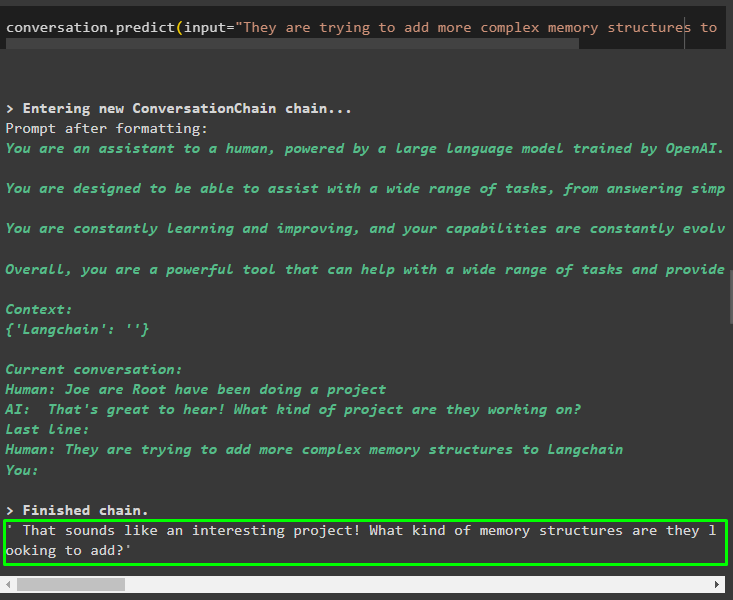

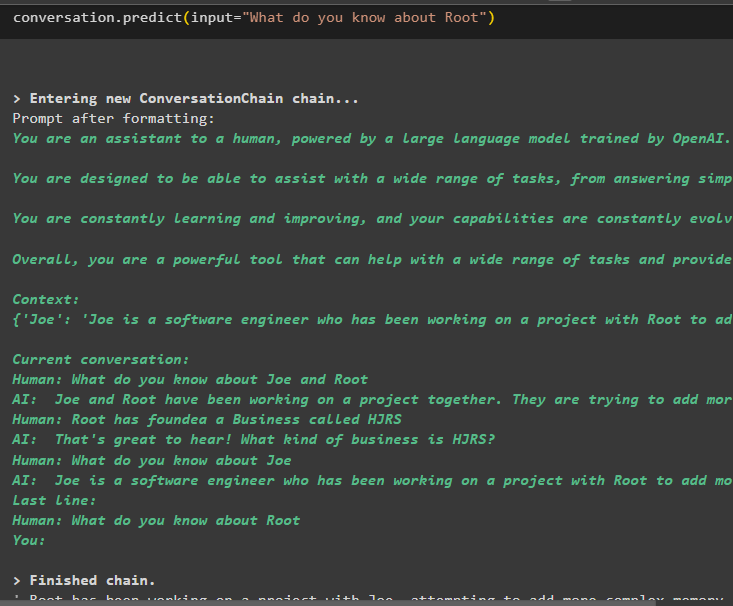

Call the conversation.predict() method with the input initialized with the prompt or query:

Now, get the separate output for each entity describing the information about it:

Use the output from the model to give the input so the model can store more information about these entities:

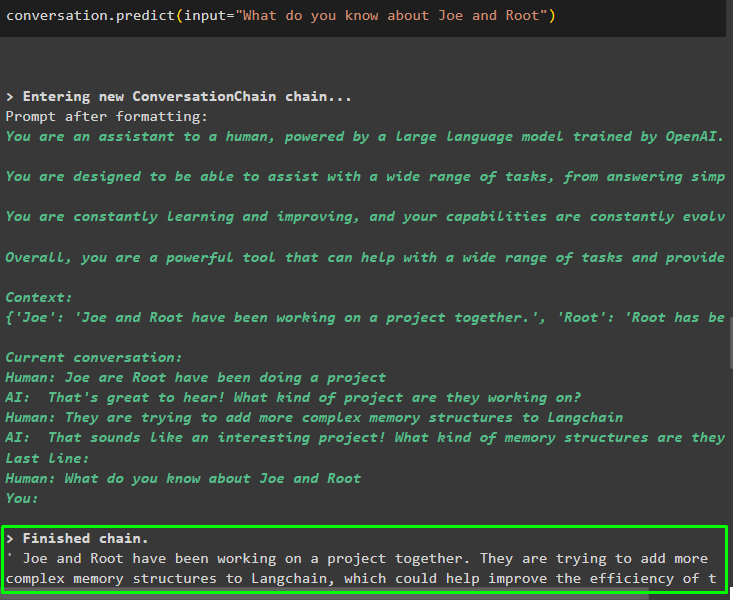

After giving the information that is being stored in the memory, simply ask the question to extract the specific information about entities:

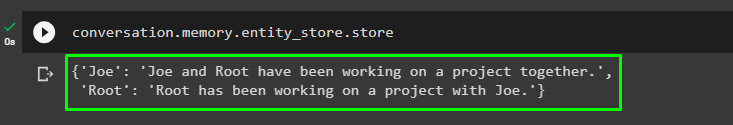

Step 4: Testing the Memory Store

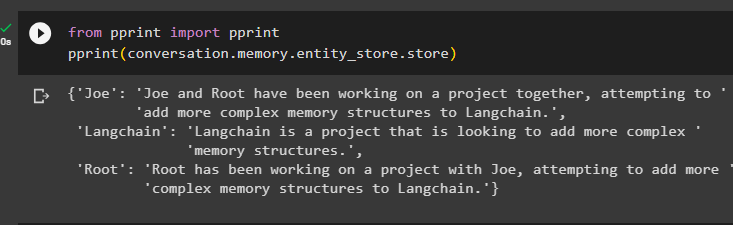

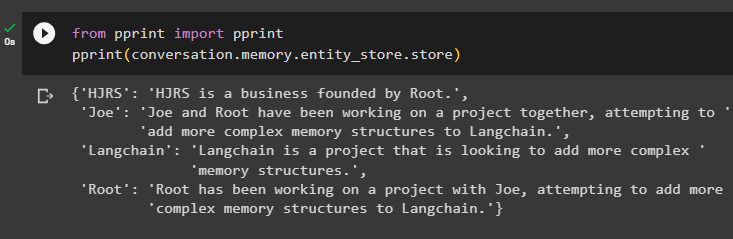

The user can inspect the memory stores directly to get the information stored in them using the following code:

pprint(conversation.memory.entity_store.store)

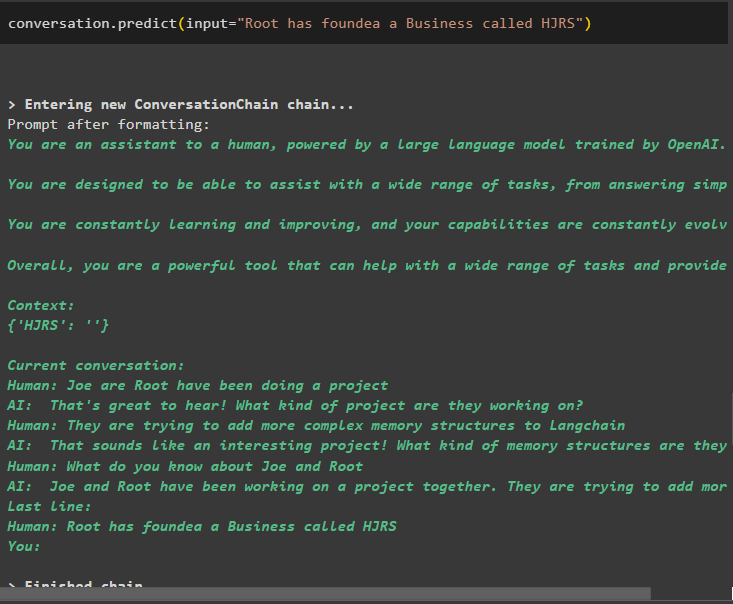

Provide more information to be stored in the memory as more information gives more accurate results:

Extract information from the memory store after adding more information about the entities:

pprint(conversation.memory.entity_store.store)

The memory has information about multiple entities like HJRS, Joe, LangChain, and Root:

Now extract information about a specific entity using the query or prompt defined in the input variable:

That is all about using the entity memory using the LangChain framework.

Conclusion

To use the entity memory in LangChain, simply install the required modules to import libraries required to build models after setting up the OpenAI environment. After that, build the LLM model and store entities in the memory by providing information about the entities. The user can also extract information using these entities and build these memories in the chains with stirred information about entities. This post has elaborated on the process of using the entity memory in LangChain.