Two methods, embed_query() and embed_documents(), are provided by the base class. The first of these operates on a single document, whereas the other one can operate on many documents.

This article comprehends the practical demonstration of embedding in LangChain using the OpenAI text embeddings.

Example: Obtaining a Single Input Text Using the OpenAI Text Embedding

For the first illustration, we input a single text string and retrieve the OpenAI text embedding for it. The program begins by installing the needed libraries.

The first library that we need to install into our project is LangChain. It does not come with the Python standard library so we have to install it separately. Since langchain is available on PyPi, we can easily install it using the pip command on the terminal. Thus, we run the following command to install the LangChain library:

The library is installed as soon as the requirements are satisfied.

We also need the OpenAI library to be installed in our project so we can access the OpenAI models. This library can be inaugurated by writing the pip command:

Now, both the required libraries are installed into our project file. We have to import the required modules.

import os

os.environ["OPENAI_API_KEY"]="sk-YOUR_API_KEY”

To obtain the OpenAI embeddings, we have to import the OpenAIEmbeddings class from the “langchain.embeddings.openai” package. Then, we set the API key as the environment variable. We need the secret API key to access the different OpenAI models. This key can be generated from the OpenAI platform. Simply signup and obtain a secret key from your profile’s “view secret key” section. This key can be used across different projects for a specific client.

The environment variables are used to store the API keys for a particular environment rather than hardcoding them into the functions. So, to set the API key as the environment variable, we have to import the “os” module. The os.environ() method is used to set the API key as the environment variable. It contains a name and a value. The name that we set is “OPENAI_API_KEY” and the secret key is set to “value”.

input_text = "This is for demonstration."

outcome = model.embed_query(input_text)

print(outcome)

print(len(outcome))

We already interfaced with the OpenAI embedding wrapper. Thereafter, we call the constructor of the OpenAIEmbedding class. OpenAI provides a variety of embedding models but you have to pay for them. Here, we go with the default embedding model of OpenAI, i.e. text-embedding-ada-002, which is free. When you do not provide any model name as a parameter, the default model is used.

Then, we specify the text that we have to obtain the embedding of. The text is specified as “This is for demonstration.” and store it in the input_text variable. After that, the embed_query() method to embed the provided text is called with the model that is used and the input_text that has the text string to retrieve the embedding which is provided as a parameter. The retrieved embeddings are assigned to the outcome object.

Lastly, to put the result on view, we have Python’s print() method. We simply have to pass the object that stores the value that we want to display to the print() method. Hence, we invoke this function twice; first to display the list of floating numbers and second to print out the length of these values using the len() method with it.

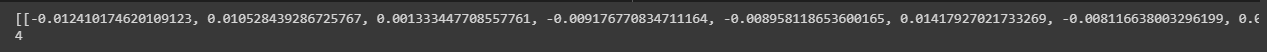

The list of floating values can be seen in the following snapshot with the length of these values:

Example: Obtaining Multiple Input Text/Document Using the OpenAI Text Embedding

Apart from obtaining embedding for a single input text, we can also retrieve it for multiple input strings. We implement this into this illustration.

We already installed the libraries in the previous illustration. Another library that we need to install here is Python’s tiktoken library. Write the command on the terminal to install it:

The tiktoken package is a Byte Pair Encoding tokenizer. It is used with the OpenAI models and breaks down text into tokens. This is used because the provided strings are sometimes a bit long for the specified OpenAI model. So, it splits the text and encodes them into tokens. Now, let’s work on the main project.

model = OpenAIEmbeddings(openai_api_key="sk-YOUR_API_KEY”

strings = ["This is for demonstration.", "This string is also for demonstration.", "This is another demo string.", "This one is last string."]

result = model.embed_documents(strings)

print(result)

print(len(result))

The OpenAIEmbeddings class is imported from the “langchain.embeddings.openai” package. In the previous example, we set the API key as the environment variable. But for this one, we pass it directly to the constructor. So, we don’t have to import the “os” module here.

After invoking the OpenAI model which is the OpenAIEmbeddings, we pass the secret API key to it. In the next line, the text strings are specified. Here, we store four text strings in the object strings. These strings are “This is for demonstration”, “This string is also for demonstration”, “This is another demo string”, and “This one is the last string.”

You can specify multiple strings by simply separating each with a comma. In the previous instance, the embed_text() method is called but we cannot use it here as it only works for the single text string. To embed multiple strings, the method that we have is the embed_document(). So, we call it with the specified OpenAI model and text strings as an argument. The output is kept in the result object. Finally, to display the output, the Python print() method is utilized with the object result as its parameter. Also, we want to see the length of these floating values. Thus, we invoke the len() method within the print() method.

The retrieved output is provided in the following image:

Conclusion

This post discussed the concept of embedding in LangChain. We learned what embedding is and how it works. A practical implementation of embedding the text strings is shown here. We carried out two illustrations. The first example worked on retrieving the embedding of a single text string and the second example comprehended how to obtain the embedding of multiple input strings using the OpenAI embedding model.