This guide will illustrate the process of using a conversation summary in LangChain.

How to Use Conversation Summary in LangChain?

LangChain provides libraries like ConversationSummaryMemory that can extract the complete summary of the chat or conversation. It can be used to get the main information of the conversation without having to read all the messages and text available in the chat.

To learn the process of using the conversation summary in LangChain, simply head into the following steps:

Step 1: Install Modules

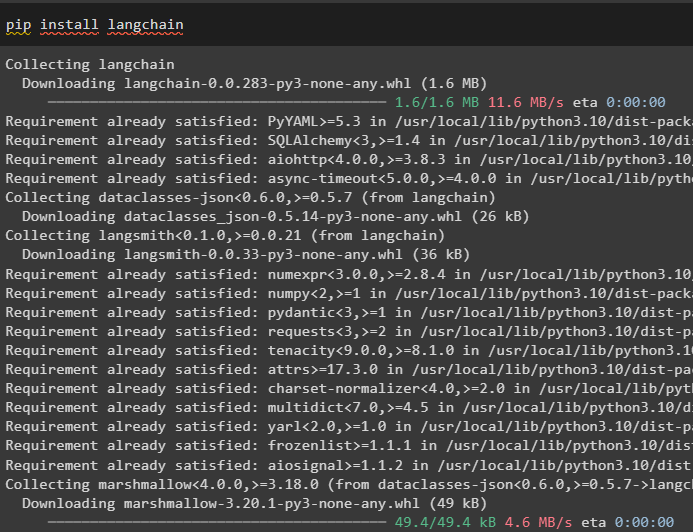

First, install the LangChain framework to get its dependencies or libraries using the following code:

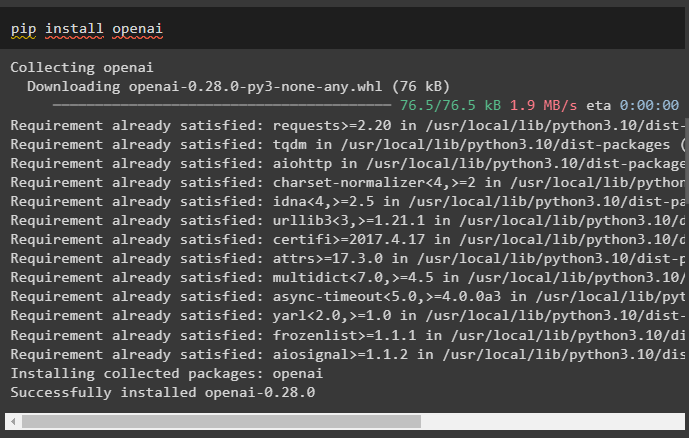

Now, install the OpenAI modules after installing the LangChain using the pip command:

After installing the modules, simply set up the environment using the following code after getting the API key from the OpenAI account:

import getpass

os.environ["OPENAI_API_KEY"] = getpass.getpass("OpenAI API Key:")

Step 2: Using Conversation Summary

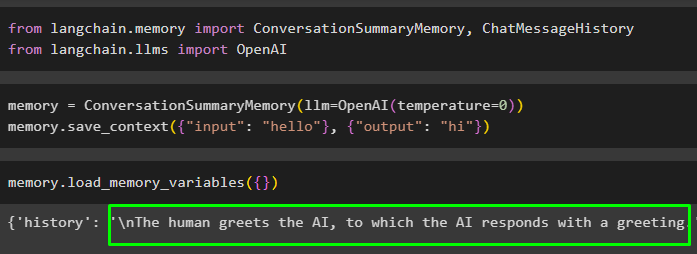

Get into the process of using the conversation summary by importing the libraries from LangChain:

from langchain.llms import OpenAI

Configure the memory of the model using the ConversationSummaryMemory() and OpenAI() methods and save the data in it:

memory.save_context({"input": "hello"}, {"output": "hi"})

Run the memory by calling the load_memory_variables() method to extract the data from the memory:

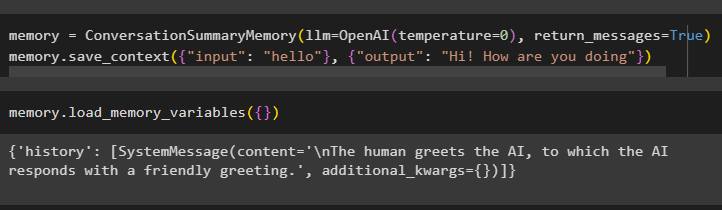

The user can also get the data in the form of conversation like each entity with a separate message:

memory.save_context({"input": "hello"}, {"output": "Hi! How are you doing"})

To get the message of AI and humans separately, execute the load_memory_variables() method:

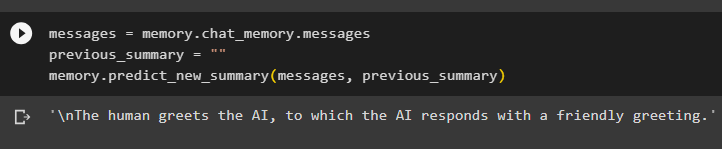

Store the summary of the conversation in the memory and then execute the memory to display the summary of the chat/conversation on the screen:

previous_summary = ""

memory.predict_new_summary(messages, previous_summary)

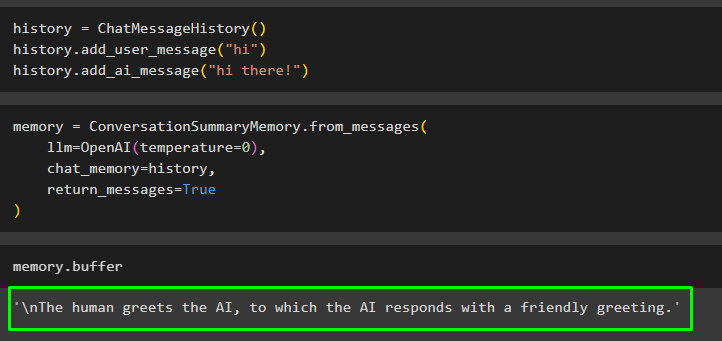

Step 3: Using Conversation Summary With Existing Messages

The user can also get the summary of the conversation that exists outside the class or chat using the ChatMessageHistory() message. These messages can be added to the memory so it can automatically generate the summary of the complete conversation:

history.add_user_message("hi")

history.add_ai_message("hi there!")

Build the model such as LLM using the OpenAI() method to execute the existing messages in the chat_memory variable:

llm=OpenAI(temperature=0),

chat_memory=history,

return_messages=True

)

Execute the memory using the buffer to get the summary of the existing messages:

Execute the following code to build the LLM by configuring the buffer memory using the chat messages:

llm=OpenAI(temperature=0),

buffer="""The human asks the asks machine about itself

The system replies that AI is built for good as it can help humans to achieve their potential""",

chat_memory=history,

return_messages=True

)

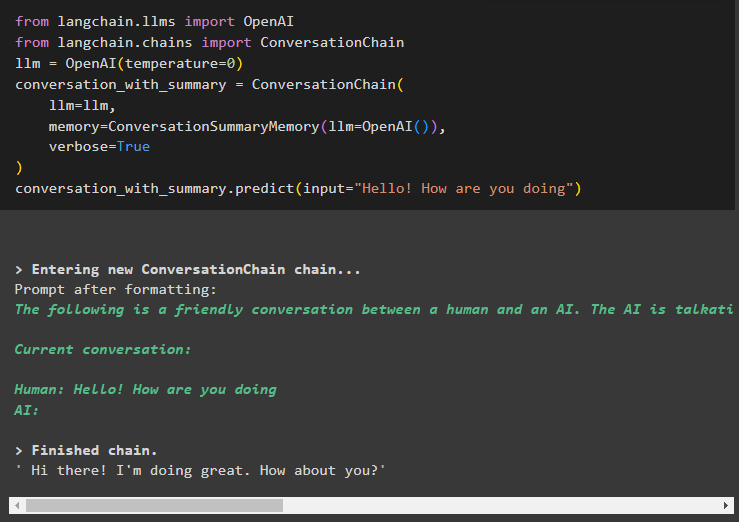

Step 4: Using Conversation Summary in Chain

The next step explains the process of using the conversation summary in a chain using the LLM:

from langchain.chains import ConversationChain

llm = OpenAI(temperature=0)

conversation_with_summary = ConversationChain(

llm=llm,

memory=ConversationSummaryMemory(llm=OpenAI()),

verbose=True

)

conversation_with_summary.predict(input="Hello! How are you doing")

Here we have started building chains by starting the conversation with a courteous inquiry:

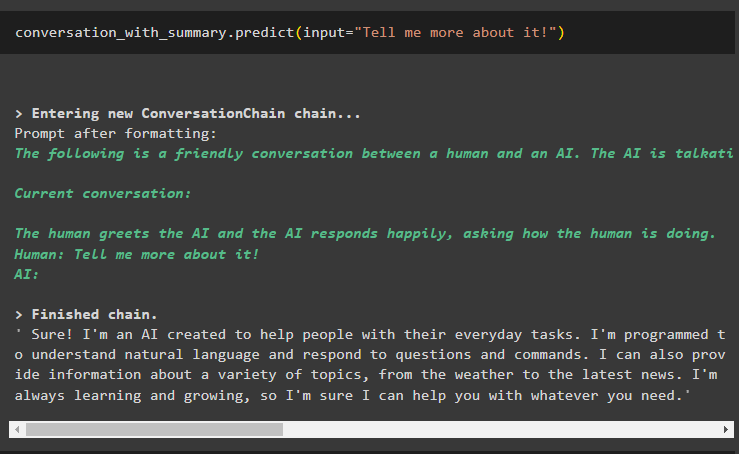

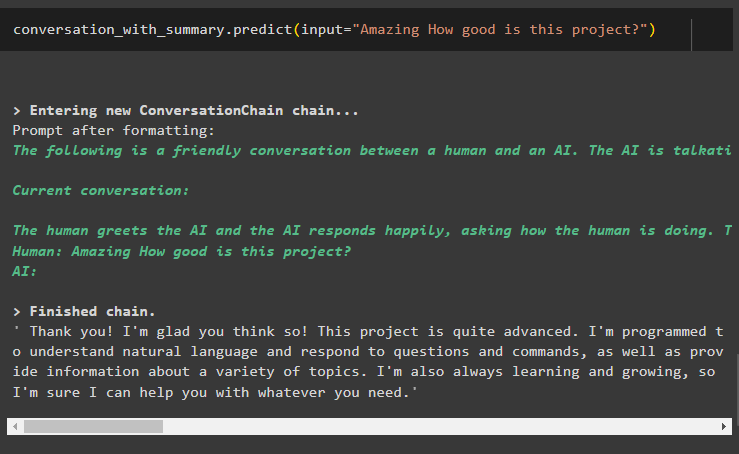

Now get into the conversation by asking a bit more about the last output to expand on it:

The model has explained the last message with a detailed introduction to the AI technology or chatbot:

Extract a point of interest from the previous output to take the conversation in a specific direction:

Here we are getting detailed answers from the bot using the conversation summary memory library:

That is all about using the conversation summary in LangChain.

Conclusion

To use the conversation summary message in LangChain, simply install the modules and frameworks required to set up the environment. Once the environment is set, import the ConversationSummaryMemory library to build LLMs using the OpenAI() method. After that, simply use the conversation summary to extract the detailed output from the models which is the summary of the previous conversation. This guide has elaborated on the process of using conversation summary memory using the LangChain module.