This guide will illustrate the process of using the conversation knowledge graph in LangChain.

How to Use Conversation Knowledge Graph in LangChain?

The ConversationKGMemory library can be used to recreate the memory that can be used to get the context of the interaction. To learn the process of using the conversation knowledge graph in LangChain, simply go through the listed steps:

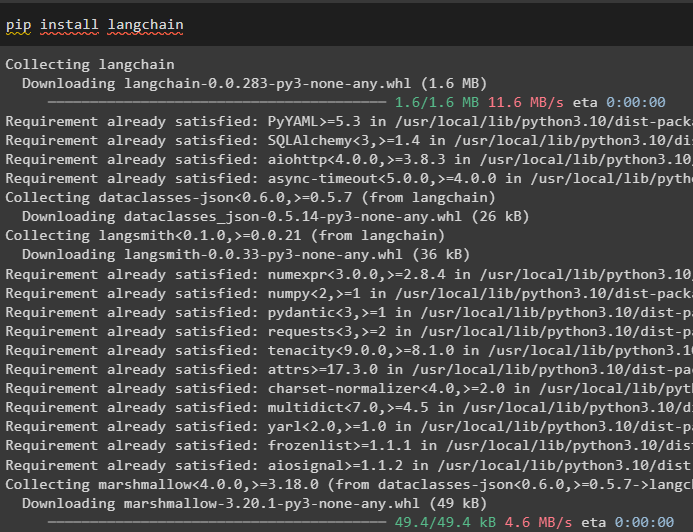

Step 1: Install Modules

First, get started with the process of using the conversation knowledge graph by installing the LangChain module:

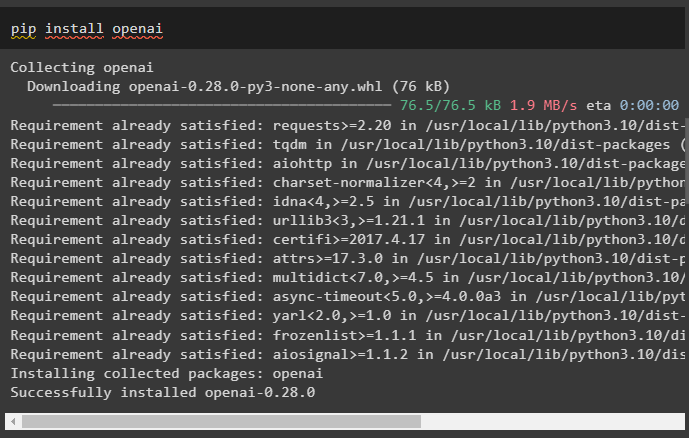

Install the OpenAI module which can be installed using the pip command to get its libraries for building Large Language Models:

Now, set up the environment using the OpenAI API key that can be generated from its account:

import getpass

os.environ["OPENAI_API_KEY"] = getpass.getpass("OpenAI API Key:")

Step 2: Using Memory With LLMs

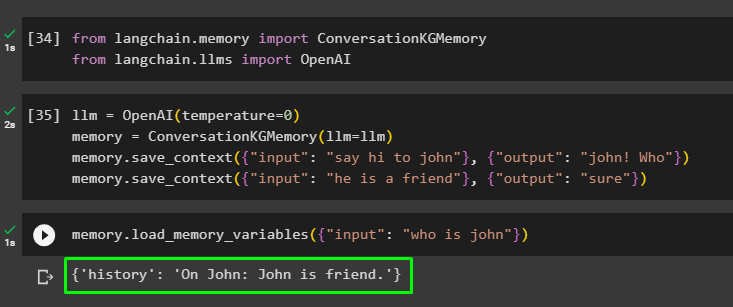

Once the modules are installed, start using the memory with LLM by importing the required libraries from the LangChain module:

from langchain.llms import OpenAI

Build the LLM using the OpenAI() method and configure the memory using the ConversationKGMemory() method. After that, save the prompt templates using multiple inputs with their respective response to train the model on this data:

memory = ConversationKGMemory(llm=llm)

memory.save_context({"input": "say hi to john"}, {"output": "john! Who"})

memory.save_context({"input": "he is a friend"}, {"output": "sure"})

Test the memory by loading the memory_variables() method using the query related to the above data:

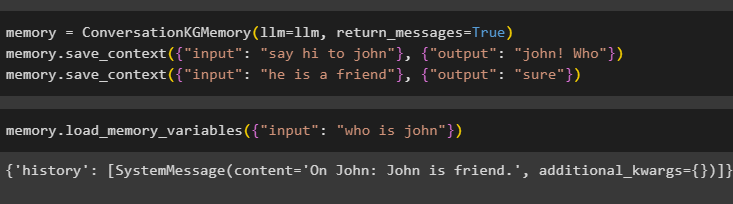

Configure the memory using the ConversationKGMemory() method with the return_messages argument to get the history of the input as well:

memory.save_context({"input": "say hi to john"}, {"output": "john! Who"})

memory.save_context({"input": "he is a friend"}, {"output": "sure"})

Simply test the memory by providing the input argument with its value in the form of a query:

Now, test the memory by asking the question that is not mentioned in the training data, and the model has no idea about the response:

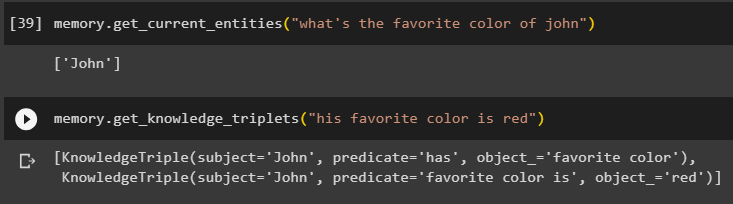

Use the get_knowledge_triplets() method by responding to the query asked previously:

Step 3: Using Memory in Chain

The next step uses the conversation memory with the chains to build the LLM model using the OpenAI() method. After that, configure the prompt template using the conversation structure and the text will be displayed while getting the output by the model:

from langchain.prompts.prompt import PromptTemplate

from langchain.chains import ConversationChain

template = """This is the template for the interaction among human and machine

The system is an AI model that can talk or extract information about multiple aspects

If it does not understand the question or have the answer, it simply says so

The system extract data stored in the "Specific" section and does not hallucinate

Specific:

{history}

Conversation:

Human: {input}

AI:"""

#Configure the template or structure for providing prompts and getting response from the AI system

prompt = PromptTemplate(input_variables=["history", "input"], template=template)

conversation_with_kg = ConversationChain(

llm=llm, verbose=True, prompt=prompt, memory=ConversationKGMemory(llm=llm)

)

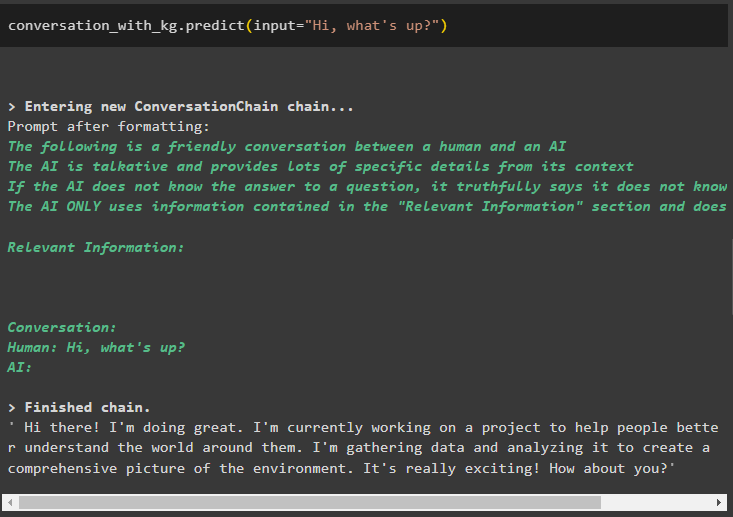

Once the model is created, simply call the conversation_with_kg model using the predict() method with the query asked by the user:

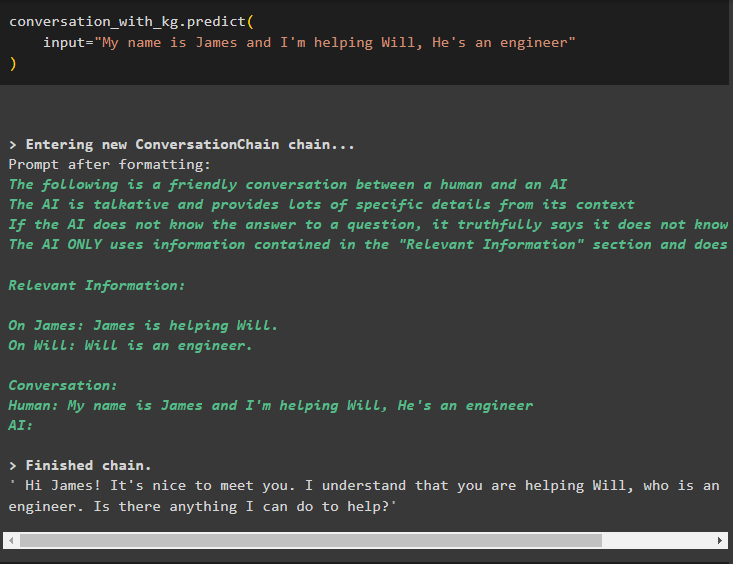

Now, train the model using conversation memory by giving the information as the input argument for the method:

input="My name is James and I'm helping Will, He's an engineer"

)

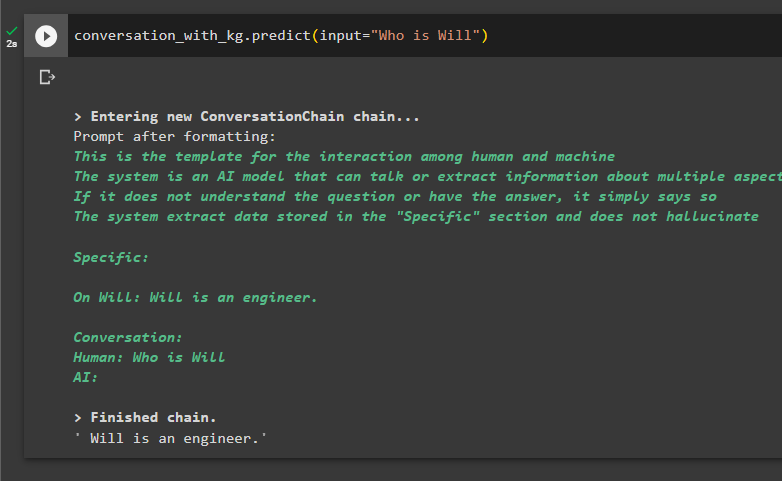

Here is the time to test the model by asking the queries to extract information from the data:

That is all about using the conversation knowledge graph in LangChain.

Conclusion

To use the conversation knowledge graph in LangChain, install the modules or frameworks to import libraries for using the ConversationKGMemory() method. After that, build the model using the memory to build the chains and extract information from the training data provided in the configuration. This guide has elaborated on the process of using the conversation knowledge graph in LangChain.