This guide will illustrate the process of using ConversationBufferMemory in LangChain.

How to Use ConversationBufferMemory in LangChain?

To learn the process of using the ConversationBufferMemory in LangChain, simply go through the listed steps:

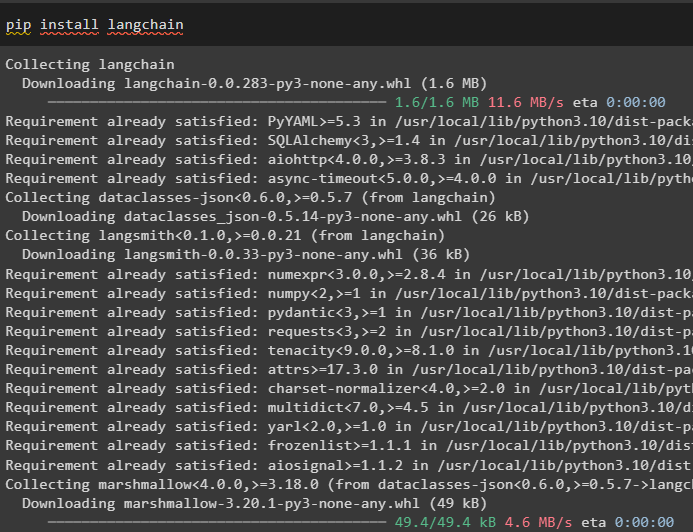

Step 1: Install Modules

Firstly, get into the process of using ConversationBufferMemory by installing the LangChain framework:

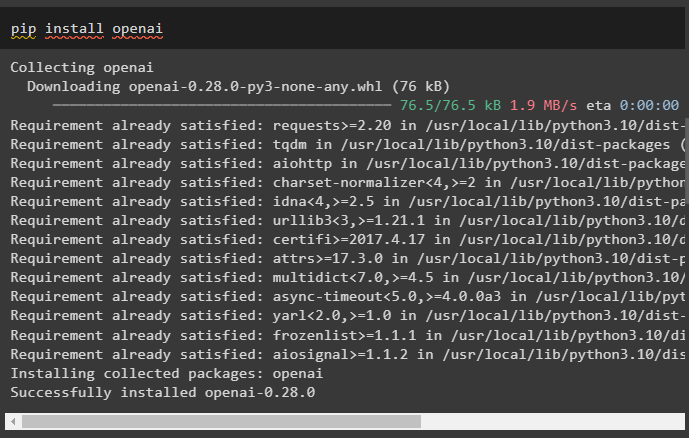

Install the OpenAI framework with its dependencies to get the environment using its API key:

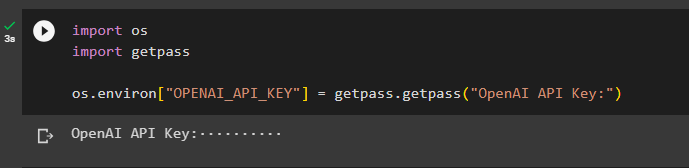

Now, simply set up the environment using the API key from the OpenAI account:

import getpass

os.environ["OPENAI_API_KEY"] = getpass.getpass("OpenAI API Key:")

Step 2: Using ConversationBufferMemory Library

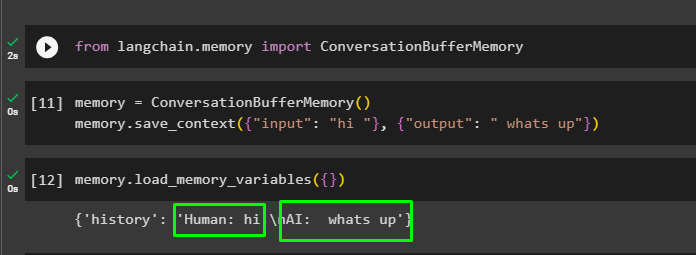

After setting up the requirements for OpenAI, simply import the ConversationBufferMemory library from LangChain:

Build a memory for the model using the library imported in the previous code and set the template for the model by providing the input and output values:

memory.save_context({"input": "hi "}, {"output": " whats up"})

Call the load_memory_variables() method using the memory variable to get the history of the chat in the memory:

Step 3: Testing Buffer Memory

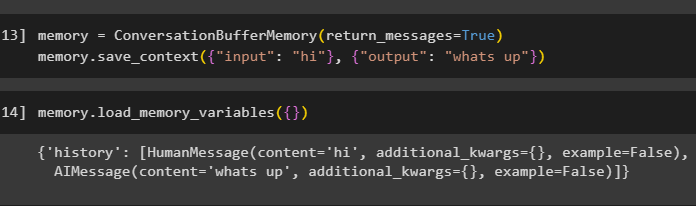

Now configure the ConversationBufferMemory() method with the return_messages argument to get the input and output separately:

memory.save_context({"input": "hi"}, {"output": "whats up"})

Now, load the memory variables using the memory having the chat stored in it to display the history of the chatbot:

Step 4: Using Buffer Memory in a Chain

The ConversationBufferMemory() method can also be used with Chain to build the memory in the LLMChain. Configure the LLMs using the OpenAI() methods and then use the ConversationChain() method to configure the chain:

from langchain.chains import ConversationChain

llm = OpenAI(temperature=0)

conversation = ConversationChain(

llm=llm,

verbose=True,

memory=ConversationBufferMemory()

)

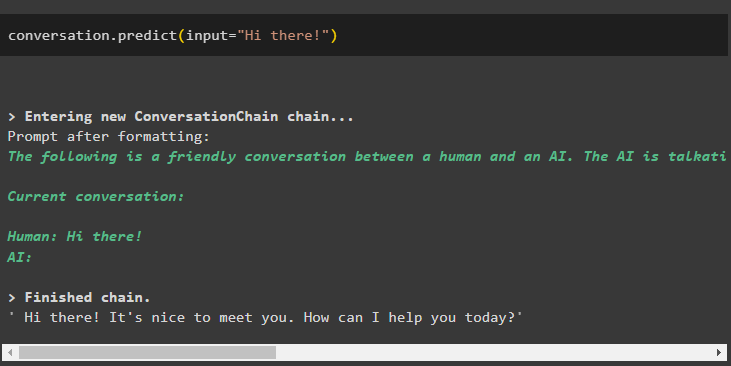

Step 5: Testing the Buffer Memory in a Chain

After that, simply run the predict() method with the query provided in the input variable mentioned as the argument/parameter of the function:

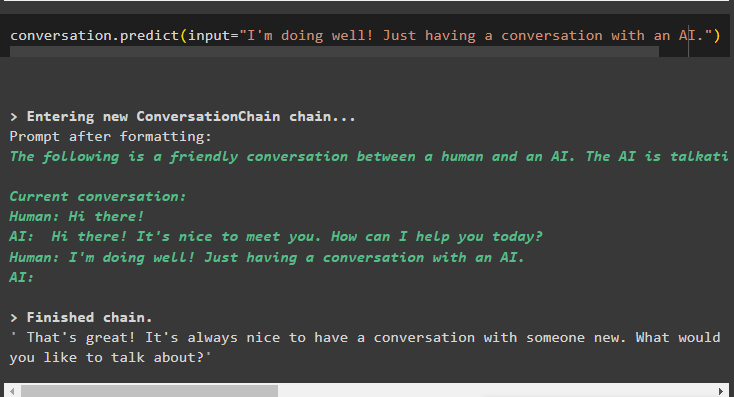

Now keep going after the AI has generated the response with the query string in the input variable:

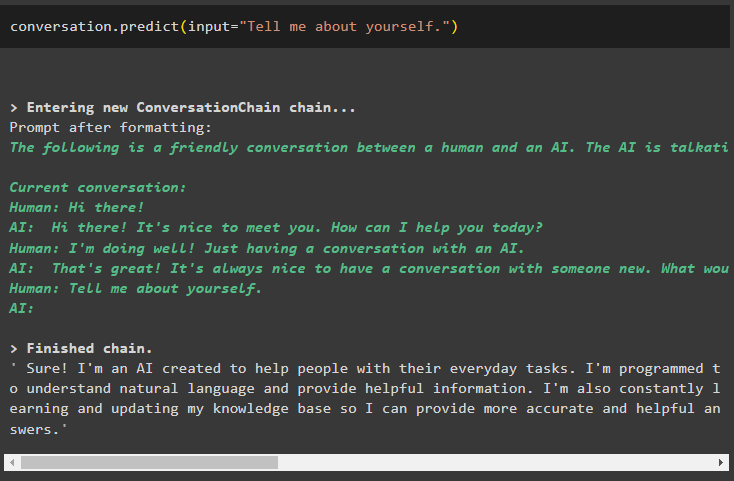

Now, use the context to ask the query using the previous conversation/chat and execute the code:

The model has successfully used the context of the conversation and answered the question like humans do in chats:

That is all about using the ConversationBufferMemory library in LangChain.

Conclusion

To use the ConversationBufferMemory in LangChain, install LangChain and OpenAI modules to import the ConversationBufferMemory library. Once the library is imported, build the model and add a buffer memory to it by using the ConversationBufferMemory library. The user can also use the buffer memory with the chains to get answers from the LLM using the OpenAI module. This post has elaborated on the process of using the ConversationBufferMemory in LangChain.