LangChain is a framework to build Large Language Models using classes that are inherited by chains. These chains are used with the models so they can have the previous observations stored so the model can generate text using them as the context. Using all the classes can build LLMs by running the chain logic and the most direct approach is using the call methods in LangChain.

This guide will demonstrate the process of using the different call methods in LangChain.

How to Use Different Call Methods in LangChain?

To use the “call” methods in LangChain for running chains, simply follow the listed instructions:

Step 1: Setup Prerequisites

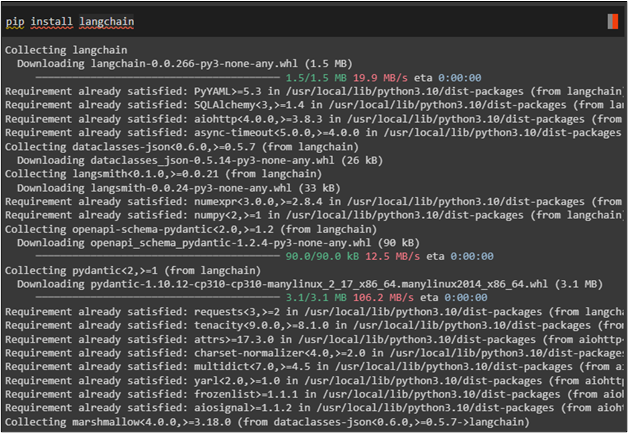

First, get all the LangChain packages and libraries by installing the LangChain framework using the “pip install” command:

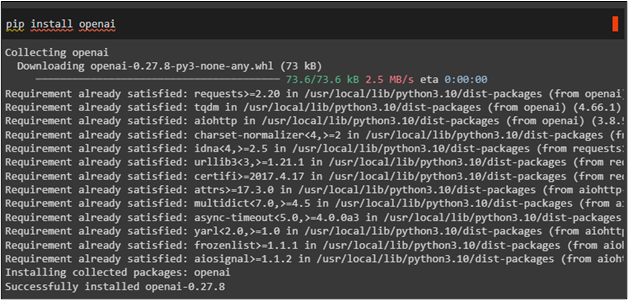

Next, install OpenAI using the following command to get its libraries for using the call methods:

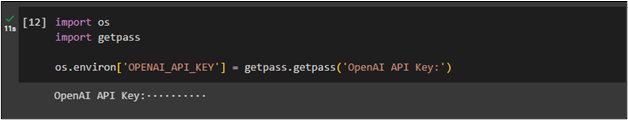

Now, connect to the OpenAI environment using its API key by calling the “getpass()” method with the “os” library:

import getpass

os.environ['OPENAI_API_KEY'] = getpass.getpass('OpenAI API Key:')

Step 2: Import Libraries

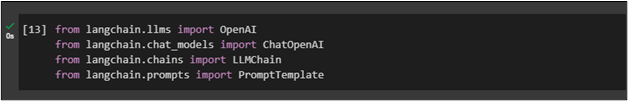

After that, simply import the required libraries like OpenAI, ChatOpenAI, and PromptTemplate libraries. They are used to configure the template for the query:

from langchain.chat_models import ChatOpenAI

from langchain.chains import LLMChain

from langchain.prompts import PromptTemplate

Step 3: Build LLM Model

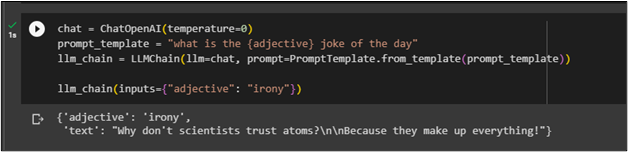

Configure the chat model using the ChatOpenAI() function and set the template of the query with the variable inside the query which will be called in the llm_chain() function:

prompt_template = "what is the {adjective} joke of the day"

llm_chain = LLMChain(llm=chat, prompt=PromptTemplate.from_template(prompt_template))

llm_chain(inputs={"adjective": "irony"})

Executing the above code will generate the answer which is a joke with irony as its adjective:

Method 1: Using llm_chain() Method

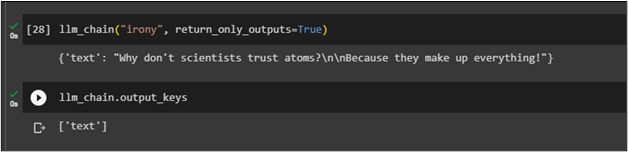

By default, the call function returns the input and output key values and the user can get either of them by setting their key values:

After setting the output key value to get the output only, simply execute the call function to get the output key value:

The following screenshot displays the output for the query and the key value after running the chain:

Method 2: Using run() Function

Execute the run() function to get the answer from the model and the run() function only displays the string output:

Method 3: Using Multiple Methods

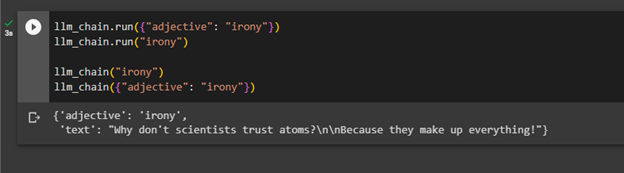

The following code is used to execute the “llm_chain()” methods with and without “run()” method:

llm_chain.run("irony")

llm_chain("irony")

llm_chain({"adjective": "irony"})

Calling and executing the above methods exactly serves the same purpose and get a similar output as the following screenshots display only one output. The “llm_chain.run()” and “llm_chain()” methods are two methods with different arguments but get a similar response that will be a joke with “irony” as its adjective:

That’s all about using the call methods in LangChain to run chains.

Conclusion

To use the call functions in LangChain, simply install LangChain and OpenAI modules to import the required libraries. After that, connect to the OpenAI environment using its API key and then configure the model to execute call functions. The user can use different call methods like llm_chain() methods and run() functions to execute call methods. This post has illustrated the process of using different call methods in LangChain.