Quick Outline

This post contains the following sections:

- How to Use an Async API Agent in LangChain

- Method 1: Using Serial Execution

- Method 2: Using Concurrent Execution

- Conclusion

How to Use an Async API Agent in LangChain?

Chat models perform multiple tasks simultaneously like understanding the structure of the prompt, its complexities, extracting information, and many more. Using the Async API agent in LangChain allows the user to build efficient chat models that can answer multiple questions at a time. To learn the process of using the Async API agent in LangChain, simply follow this guide:

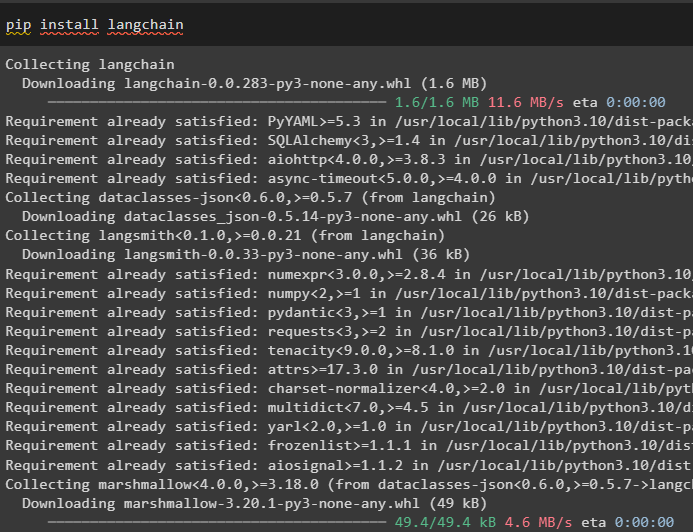

Step 1: Installing Frameworks

First of all, install the LangChain framework to get its dependencies from the Python package manager:

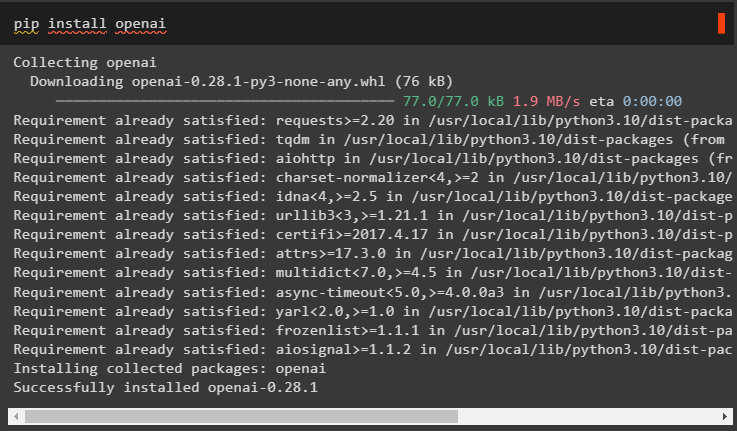

After that, install the OpenAI module to build the language model like llm and set its environment:

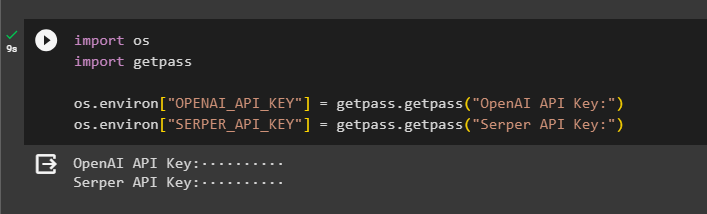

Step 2: OpenAI Environment

The next step after the installation of modules is setting up the environment using the OpenAI’s API key and Serper API to search for data from Google:

import getpass

os.environ["OPENAI_API_KEY"] = getpass.getpass("OpenAI API Key:")

os.environ["SERPER_API_KEY"] = getpass.getpass("Serper API Key:")

Step 3: Importing Libraries

Now that the environment is set, simply import the required libraries like asyncio and other libraries using the LangChain dependencies:

import time

import asyncio

from langchain.agents import AgentType

from langchain.llms import OpenAI

from langchain.callbacks.stdout import StdOutCallbackHandler

from langchain.callbacks.tracers import LangChainTracer

from aiohttp import ClientSession

Step 4: Setup Questions

Set a question dataset containing multiple queries related to different domains or topics that can be searched on the internet (Google):

"Who is the winner of the U.S. Open championship in 2021",

"What is the age of the Olivia Wilde's boyfriend",

"Who is the winner of the formula 1 world title",

"Who won the US Open women's final in 2021",

"Who is Beyonce's husband and What is his age",

]

Method 1: Using Serial Execution

Once all the steps are completed, simply execute the questions to get all the answers using the serial execution. It means that one question will be executed/displayed at a time and also return the complete time it takes to execute these questions:

tools = load_tools(["google-serper", "llm-math"], llm=llm)

agent = initialize_agent(

tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True

)

s = time.perf_counter()

#configuring time counter to get the time used for the complete process

for q in questions:

agent.run(q)

elapsed = time.perf_counter() - s

#print the total-time used by the agent for getting the answers

print(f"Serial executed in {elapsed:0.2f} seconds.")

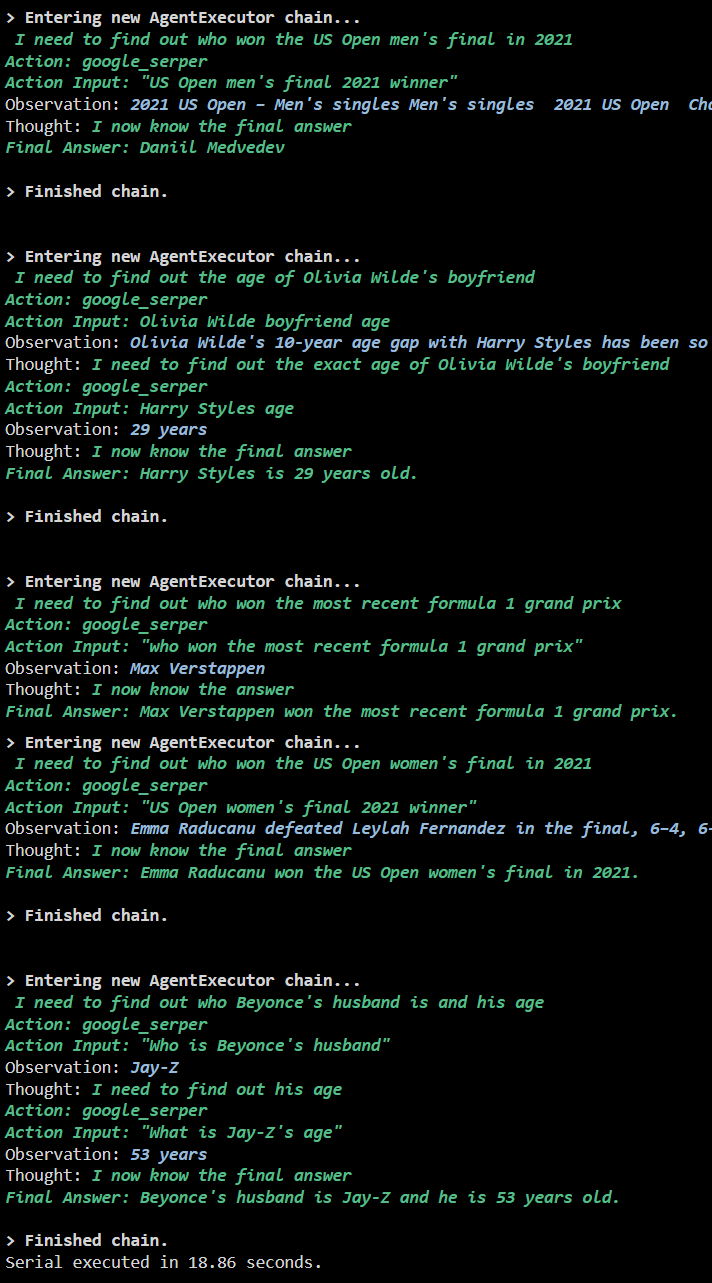

Output

The following screenshot displays that each question is answered in a separate chain and once the first chain is finished then the second chain becomes active. The serial execution takes more time to get all the answers individually:

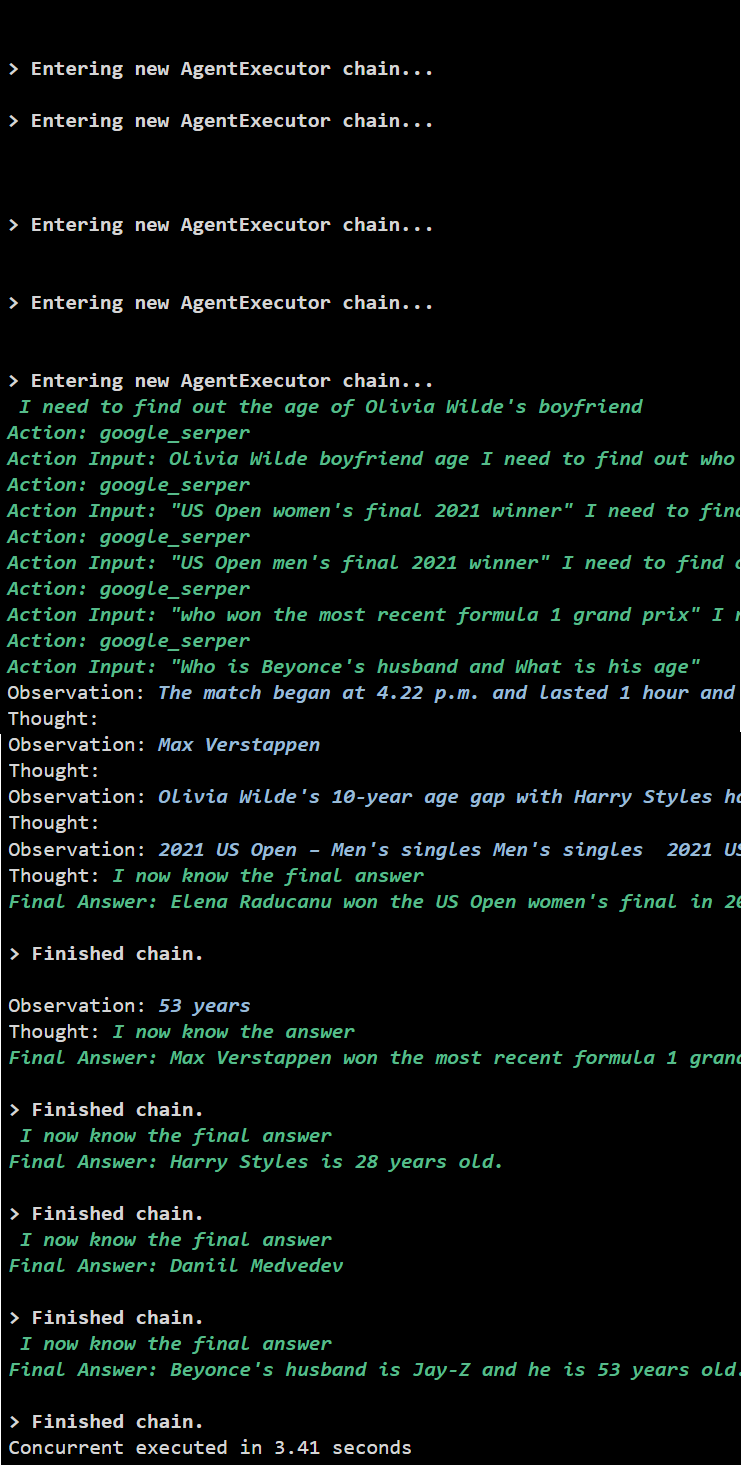

Method 2: Using Concurrent Execution

The Concurrent execution method takes all the questions and gets their answers simultaneously.

tools = load_tools(["google-serper", "llm-math"], llm=llm)

#Configuring agent using the above tools to get answers concurrently

agent = initialize_agent(

tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True

)

#configuring time counter to get the time used for the complete process

s = time.perf_counter()

tasks = [agent.arun(q) for q in questions]

await asyncio.gather(*tasks)

elapsed = time.perf_counter() - s

#print the total-time used by the agent for getting the answers

print(f"Concurrent executed in {elapsed:0.2f} seconds")

Output

The concurrent execution extracts all the data at the same time and takes way less time than the serial execution:

That’s all about using the Async API agent in LangChain.

Conclusion

To use the Async API agent in LangChain, simply install the modules to import the libraries from their dependencies to get the asyncio library. After that, set up the environments using the OpenAI and Serper API keys by signing in to their respective accounts. Configure the set of questions related to different topics and execute the chains serially and concurrently to get their execution time. This guide has elaborated on the process of using the Async API agent in LangChain.