Natural Language Generation or NLG is the component of NLP to process natural language using chains in the LangChain module. Generation of the natural language means that the machine should fetch data from the different datasets and generate data according to the command. The user can create chains with objects stored in its memory and it will generate data according to the request generated by the human.

This guide will explain the process of initializing chains with memory objects in LangChain.

How to Initialize Chains with Memory Objects in LangChain?

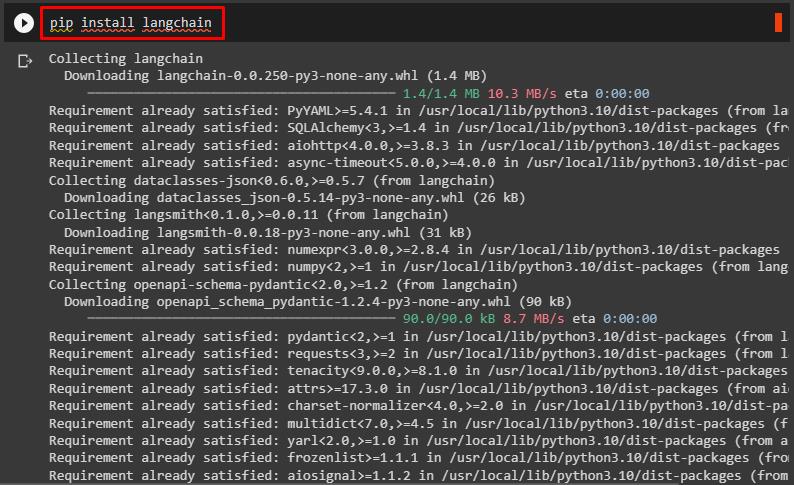

To learn the process of initializing chains with memory objects in LangChain, simply install LangChain using the following code and then follow this step-by-step guide:

Method 1: Using LLMChain and BufferMemory Library

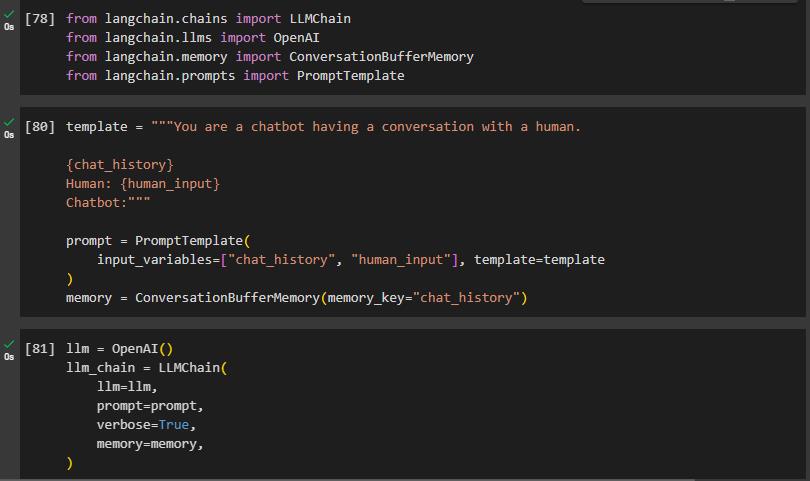

Use the following code to import libraries for initializing chains with memory objects from the LangChain module

from langchain.llms import OpenAI

from langchain.memory import ConversationBufferMemory

from langchain.prompts import PromptTemplate

The following code provides the template for the chatbot to initialize chains using the chat history stored in the memory. The chatbot template uses these memory objects to build a chain and keep storing and reply to the commands accordingly

Human: {human_input}

Chatbot:"""

prompt = PromptTemplate(

input_variables=["chat_history", "human_input"], template=template

)

memory = ConversationBufferMemory(memory_key="chat_history")

The following code uses the OpenAI functions and LLMChain function with multiple arguments:

llm_chain = LLMChain(

llm=llm,

prompt=prompt,

verbose=True,

memory=memory,

)

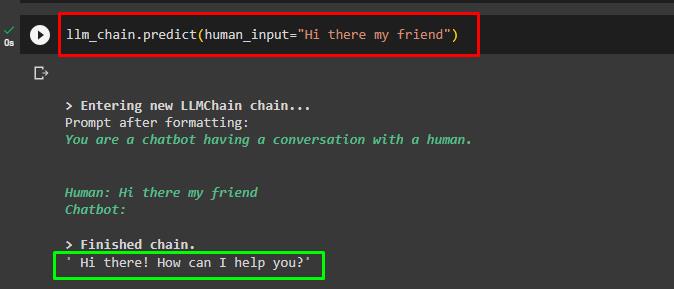

After configuring the model for initializing the chains with memory objects in LangChain, simply use the following code to input the human command:

Running the above code will prompt the chatbot to display the answer after creating the chain by storing the data in its memory:

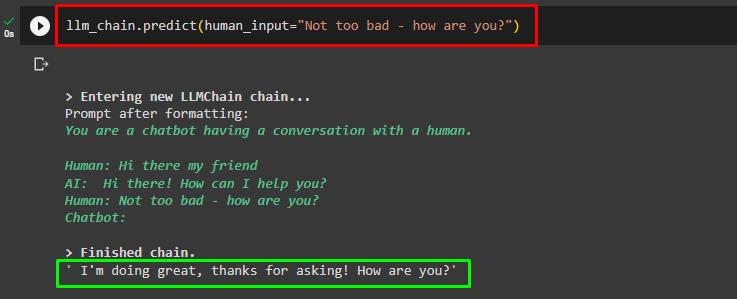

Again, prompt the human input to extend the conversation using the following command and the user can also change the command written in human_input:

Executing the above screenshot displays the output from the chatbot as well as the previous conversation to build a chain:

Method 2: Using Chat Models Module

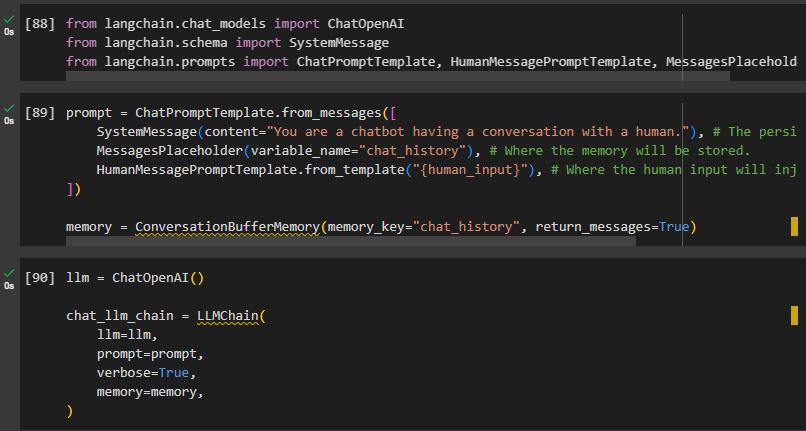

The next method of initializing chains with memory objects in LangChain is using the ChatOpenAI from the chat_models. The following code block imports multiple modules to initialize chains to create a chatbot with memory objects:

from langchain.schema import SystemMessage

from langchain.prompts import ChatPromptTemplate, HumanMessagePromptTemplate,

MessagesPlaceholder

After importing the necessary modules, simply use the following code to create a template for the chatbot using the ChatPromptTemplate class. The template will store history in the memory and use human input as the prompt to fetch data according to the input:

SystemMessage(content="You are a chatbot having a conversation with a human."),

MessagesPlaceholder(variable_name="chat_history"),

HumanMessagePromptTemplate.from_template("{human_input}"),

])

memory = ConversationBufferMemory(memory_key="chat_history", return_messages=True)

The following code uses LLMChain class with parameters to set LLM, prompt, verbose, and memory with llm as the ChatOpenAI function:

chat_llm_chain = LLMChain(

llm=llm,

prompt=prompt,

verbose=True,

memory=memory,

)

After executing the above code, the prompt is given below:

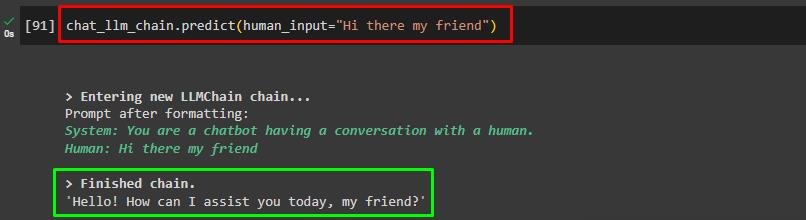

The following code uses the human input inside the chat_llm_chain module using the predict function:

The following screenshot displays the output according to the input after initializing chains:

That is all about the process of initializing chains with memory objects in LangChain.

Conclusion

To initialize chains with memory objects in LangChain, install the LangChain framework before importing the required modules from the LangChain framework. After that, simply import LLM and BufferMemory libraries using the LangChain module to initialize the chains with memory objects. Another method used in this guide is to initialize chains using the Chat Model form LangChain framework. This guide has explained the process of initializing chains with memory objects in LangChain.