PySpark – sumDistinct()

sumDistinct() in PySpark returns the distinct total (sum) value from a particular column in the DataFrame. It will return the sum by considering only unique values. It will not take duplicate values to form a sum.

Before that, we have to create PySpark DataFrame for demonstration.

Example:

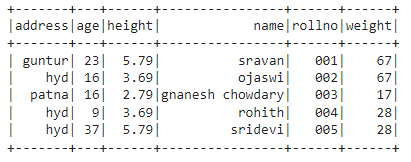

We will create a dataframe with 5 rows and 6 columns and display it using the show() method.

import pyspark

#import SparkSession for creating a session

from pyspark.sql import SparkSession

#create an app named linuxhint

spark_app = SparkSession.builder.appName('linuxhint').getOrCreate()

# create student data with 5 rows and 6 attributes

students =[{'rollno':'001','name':'sravan','age':23,'height':5.79,'weight':67,'address':'guntur'},

{'rollno':'002','name':'ojaswi','age':16,'height':3.69,'weight':67,'address':'hyd'},

{'rollno':'003','name':'gnanesh chowdary','age':16,'height':2.79,'weight':17,'address':'patna'},

{'rollno':'004','name':'rohith','age':9,'height':3.69,'weight':28,'address':'hyd'},

{'rollno':'005','name':'sridevi','age':37,'height':5.79,'weight':28,'address':'hyd'}]

# create the dataframe

df = spark_app.createDataFrame( students)

#display dataframe

df.show()

Output:

In the above PySpark dataframe, we observed that in age column 16 occurred 2 times, in height column 5.79 and 3.69 occurred two times, and in weight column 67 and 28 occurred two times.

So the sumDistinct() considers these duplicate values as a single value and form the sum within the column.

Using select() method

We can get the distinct total value from the column in the dataframe using the select() method. Using the sumDistinct() method, we can get the distinct total value from the column. To use this method, we have to import it from pyspark.sql.functions module, and finally, we can use the collect() method to get the distinct sum from the column

Syntax:

Where,

- df is the input PySpark DataFrame

- column_name is the column to get the distinct sum value

If we want to return the distinct total value from multiple columns, we must use the sumDistinct() method inside the select() method by specifying the column name separated by a comma.

Syntax:

Where,

- df is the input PySpark DataFrame

- column_name is the column to get the distinct sum value

Example 1: Single Column

This example will get the distinct total value from the height column in the PySpark dataframe.

import pyspark

#import SparkSession for creating a session

from pyspark.sql import SparkSession

#import sumDistinct() function

from pyspark.sql.functions import sumDistinct

#create an app named linuxhint

spark_app = SparkSession.builder.appName('linuxhint').getOrCreate()

# create student data with 5 rows and 6 attributes

students =[{'rollno':'001','name':'sravan','age':23,'height':5.79,'weight':67,'address':'guntur'},

{'rollno':'002','name':'ojaswi','age':16,'height':3.69,'weight':67,'address':'hyd'},

{'rollno':'003','name':'gnanesh chowdary','age':16,'height':2.79,'weight':17,'address':'patna'},

{'rollno':'004','name':'rohith','age':9,'height':3.69,'weight':28,'address':'hyd'},

{'rollno':'005','name':'sridevi','age':37,'height':5.79,'weight':28,'address':'hyd'}]

# create the dataframe

df = spark_app.createDataFrame( students)

#return the distinct sum from the height column

df.select(sumDistinct('height')).collect()

Output:

The above example returns the distinct total value (sum) from the height column.

Example 2: Multiple Columns

This example will get the distinct total value from the height, age, and weight columns in the PySpark dataframe.

import pyspark

#import SparkSession for creating a session

from pyspark.sql import SparkSession

#create an app named linuxhint

spark_app = SparkSession.builder.appName('linuxhint').getOrCreate()

# create student data with 5 rows and 6 attributes

students =[{'rollno':'001','name':'sravan','age':23,'height':5.79,'weight':67,'address':'guntur'},

{'rollno':'002','name':'ojaswi','age':16,'height':3.69,'weight':67,'address':'hyd'},

{'rollno':'003','name':'gnanesh chowdary','age':16,'height':2.79,'weight':17,'address':'patna'},

{'rollno':'004','name':'rohith','age':9,'height':3.69,'weight':28,'address':'hyd'},

{'rollno':'005','name':'sridevi','age':37,'height':5.79,'weight':28,'address':'hyd'}]

# create the dataframe

df = spark_app.createDataFrame( students)

#return the distinct sum from the height,age and weight columns

df.select(sumDistinct('height'),sumDistinct('age'),sumDistinct('weight')).collect()

Output:

The above example returns the distinct total value (sum) from the height, age, and weight columns.

PySpark – countDistinct()

countDistinct() in PySpark returns the distinct number of values (count) from a particular column in the DataFrame. It will return the count by considering only unique values. It will not take duplicate values to form a count.

Before that, we have to create PySpark DataFrame for demonstration.

Example:

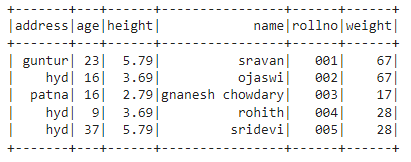

We will create a dataframe with 5 rows and 6 columns and display it using the show() method.

import pyspark

#import SparkSession for creating a session

from pyspark.sql import SparkSession

#create an app named linuxhint

spark_app = SparkSession.builder.appName('linuxhint').getOrCreate()

# create student data with 5 rows and 6 attributes

students =[{'rollno':'001','name':'sravan','age':23,'height':5.79,'weight':67,'address':'guntur'},

{'rollno':'002','name':'ojaswi','age':16,'height':3.69,'weight':67,'address':'hyd'},

{'rollno':'003','name':'gnanesh chowdary','age':16,'height':2.79,'weight':17,'address':'patna'},

{'rollno':'004','name':'rohith','age':9,'height':3.69,'weight':28,'address':'hyd'},

{'rollno':'005','name':'sridevi','age':37,'height':5.79,'weight':28,'address':'hyd'}]

# create the dataframe

df = spark_app.createDataFrame( students)

#display dataframe

df.show()

Output:

In the above PySpark dataframe, we observed that in age column 16 occurred 2 times, in height column 5.79 and 3.69 occurred two times, and in weight column 67 and 28 occurred two times.

So the countDistinct() considers these duplicate values as single values and forms the count in the column.

Using select() method

We can get the distinct count from the column in the dataframe using the select() method. Using the countDistinct() method, we can get a distinct count of rows from the column. To use this method, we have to import it from pyspark.sql.functions module, and finally, we can use the collect() method to get the distinct count from the column

Syntax:

Where,

- df is the input PySpark DataFrame

- column_name is the column to get the distinct count.

If we want to return the distinct count from multiple columns, we have to use the countDistinct() method inside the select() method by specifying the column name separated by a comma.

Syntax:

Where,

- df is the input PySpark DataFrame

- column_name is the column to get the distinct number of rows.

Example 1: Single Column

This example will get the distinct count from the height column in the PySpark dataframe.

import pyspark

#import SparkSession for creating a session

from pyspark.sql import SparkSession

#import countDistinct() function

from pyspark.sql.functions import countDistinct

#create an app named linuxhint

spark_app = SparkSession.builder.appName('linuxhint').getOrCreate()

# create student data with 5 rows and 6 attributes

students =[{'rollno':'001','name':'sravan','age':23,'height':5.79,'weight':67,'address':'guntur'},

{'rollno':'002','name':'ojaswi','age':16,'height':3.69,'weight':67,'address':'hyd'},

{'rollno':'003','name':'gnanesh chowdary','age':16,'height':2.79,'weight':17,'address':'patna'},

{'rollno':'004','name':'rohith','age':9,'height':3.69,'weight':28,'address':'hyd'},

{'rollno':'005','name':'sridevi','age':37,'height':5.79,'weight':28,'address':'hyd'}]

# create the dataframe

df = spark_app.createDataFrame( students)

#return the distinct count from the height column

df.select(countDistinct('height')).collect()

Output:

In the above example, the distinct count from the height column is returned.

Example 2: Multiple Columns

This example will get the distinct count from the height, age, and weight columns in the PySpark dataframe.

import pyspark

#import SparkSession for creating a session

from pyspark.sql import SparkSession

#import countDistinct() function

from pyspark.sql.functions import countDistinct

#create an app named linuxhint

spark_app = SparkSession.builder.appName('linuxhint').getOrCreate()

# create student data with 5 rows and 6 attributes

students =[{'rollno':'001','name':'sravan','age':23,'height':5.79,'weight':67,'address':'guntur'},

{'rollno':'002','name':'ojaswi','age':16,'height':3.69,'weight':67,'address':'hyd'},

{'rollno':'003','name':'gnanesh chowdary','age':16,'height':2.79,'weight':17,'address':'patna'},

{'rollno':'004','name':'rohith','age':9,'height':3.69,'weight':28,'address':'hyd'},

{'rollno':'005','name':'sridevi','age':37,'height':5.79,'weight':28,'address':'hyd'}]

# create the dataframe

df = spark_app.createDataFrame( students)

#return the distinct count from the height,age and weight columns

df.select(countDistinct('height'),countDistinct('age'),countDistinct('weight')).collect()

Output:

The above example returns the distinct count from the height, age, and weight columns.

Conclusion:

We discussed how to get the distinct sum (total) value using sumDistinct() and the distinct number of rows (count) using countDistinct() from the PySpark DataFrame using the select() method.