This post illustrates the process of using serialization for building LangChain applications.

How to Use Serialization for Building LangChain Applications?

Serialization is the process of understanding text or language written in different formats as it is not preferred to write them in programming languages. To learn the process of using serialization for building the LangChain applications, simply follow the listed steps and examples:

Prerequisites

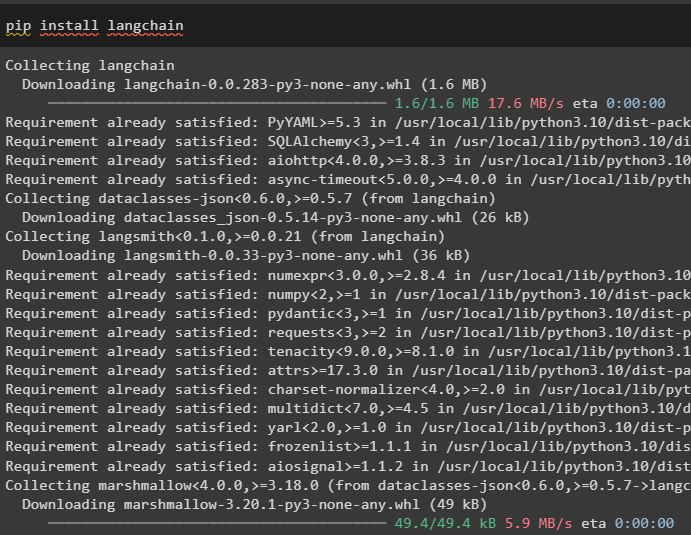

Before heading into the process of using serialization, it is required to install the LangChain framework module to get its dependencies and libraries:

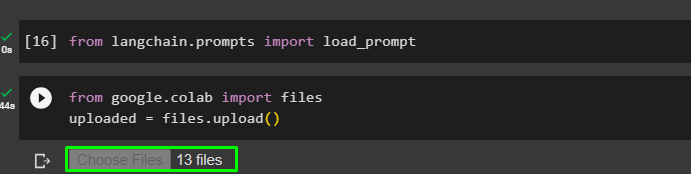

After that, simply import libraries to perform serialization in LangChain to build its application:

Another prerequisite for the serialization process is to upload multiple files written in different formats like JSON and YAML:

uploaded = files.upload()

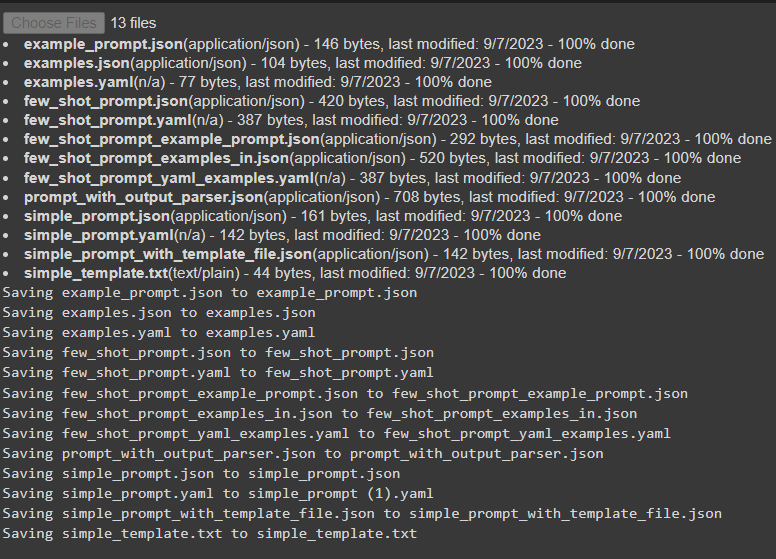

Output

The following is the list of files uploaded for this guide and their contents will be displayed whenever the file is being used in the example:

Method 1: Using Prompt Templates

The first method uses the prompt templates configured in different files and we have used the same prompt template in different formats as follows:

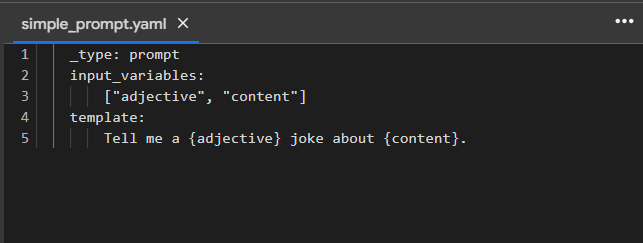

Step 1: Loading From YAML

The first step is to use the template for a single prompt in the YAML format named simple_prompt.yaml:

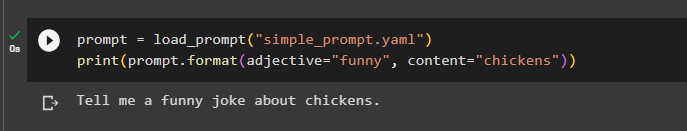

Now, simply load the file in the YAML format and print the prompt by giving the values of the variables as below:

print(prompt.format(adjective="funny", content="chickens"))

Step 2: Loading From JSON

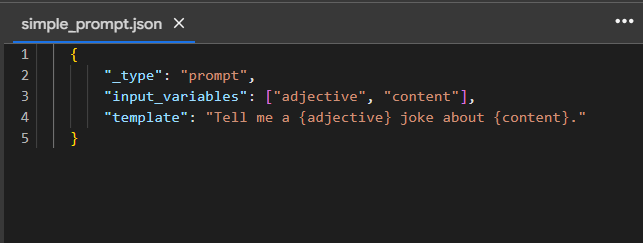

Here we have created the same prompt in the JSON format with the file named simple_prompt.json:

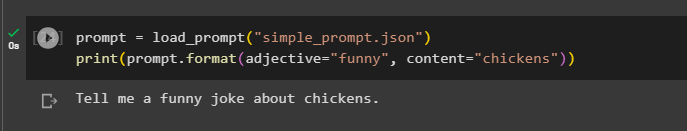

The same command will be used as the previous step to return the format of the prompt written in JSON format:

print(prompt.format(adjective="funny", content="chickens"))

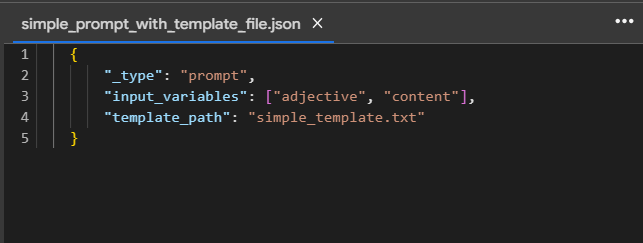

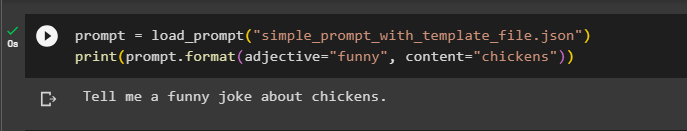

Step 3: Loading Template From File

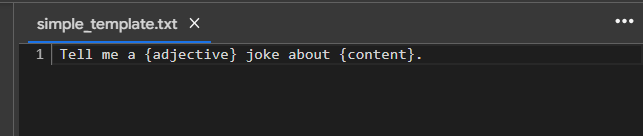

This step uses the text file with a simple line of text that will be the template for our prompt with some variables like adjective and content:

We cannot use the text file directly as the compiler cannot understand the high-level languages so we need to convert it into the JSON or YAML format. This file uses the path of the text file and with type of the file and its variables:

Now, simply execute the command to load the YAML file with the name of the file and then provide the values for the variable:

print(prompt.format(adjective="funny", content="chickens"))

The first method used three formats of files to get the template of the prompt in different styles and then executed using LangChain.

Method 2: Using Few-Shot Prompt Templates

The next method uses the Few-Shot prompt template with the prompts/text written in different formats as the previous method used:

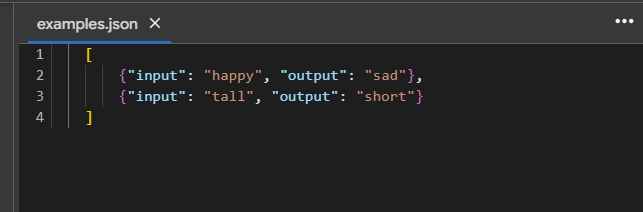

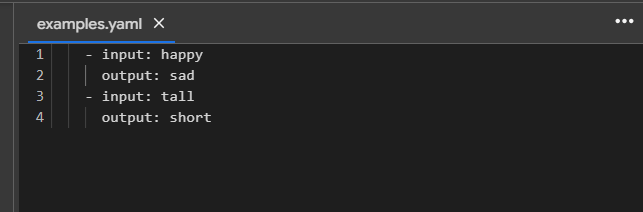

Step 1: Making Example Sets

Before using different files with the Few-Shot prompt template, we have created a few examples in YAML and JSON formats:

Step 2: Loading From YAML

Create another file in YAML format to load the Few-Shot template:

Load the YAML file and call the prompt.format() method with the variable name as its argument to get the output using the Few-Shot template:

print(prompt.format(adjective="funny"))

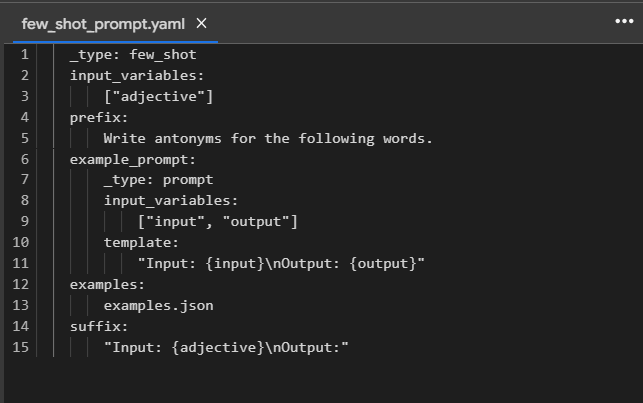

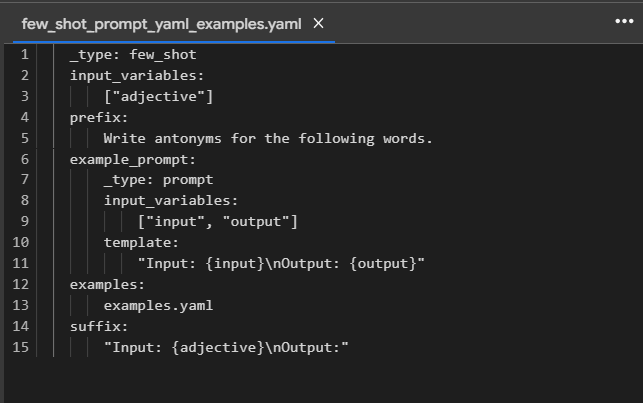

Create another file in the YAML format that use the template for the prompt asking for the antonyms of different words:

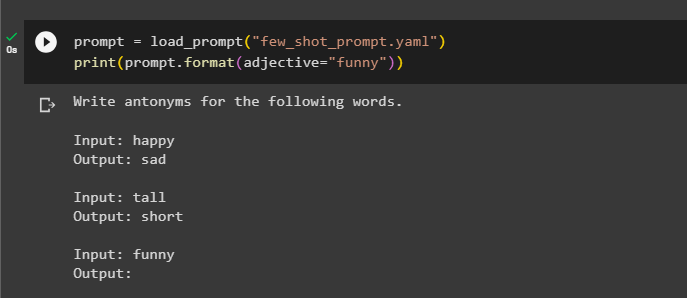

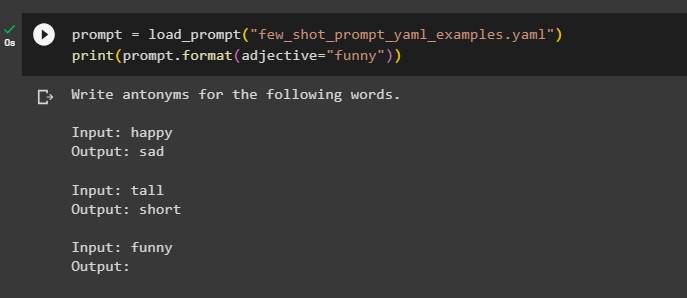

Execute the below-written code to load the YAML file and call the prompt.format() function to get the antonyms:

print(prompt.format(adjective="funny"))

Step 3: Loading From JSON

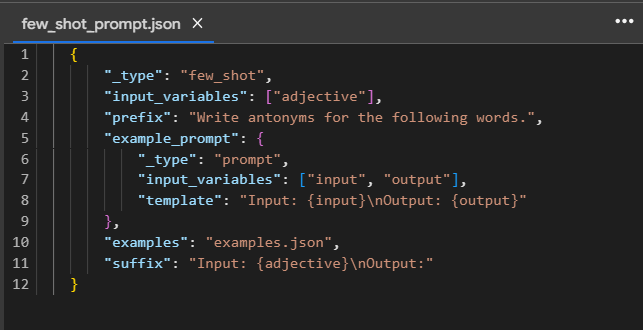

The next uses the same example but in a different format which is the JSON, and the following screenshot displays the content of the JSON file:

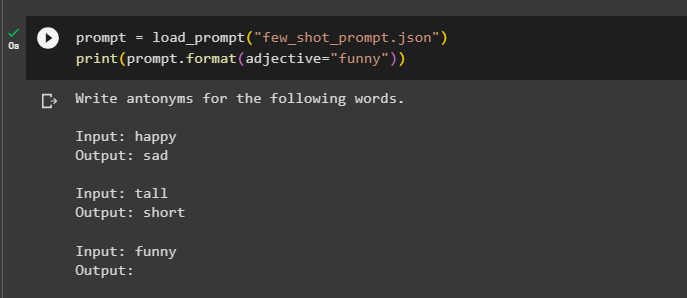

Simply execute the prompt.format() function using the file in JSON format to get the prompt template for antonyms query:

print(prompt.format(adjective="funny"))

Step 4: Loading Files From Config

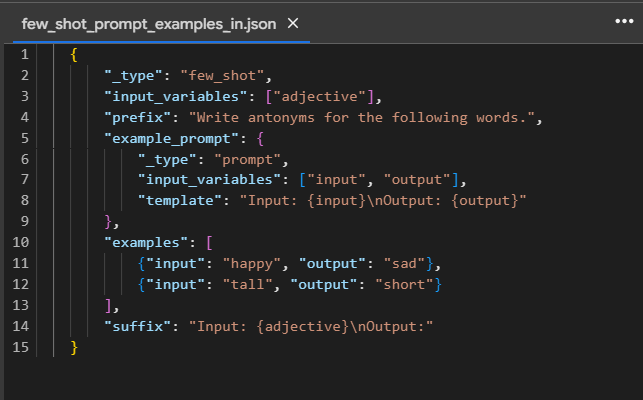

The example uses the JSON file without calling or referring any external file as all the configurations are done in this file:

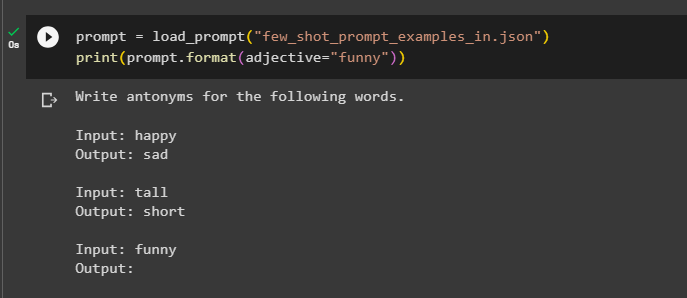

Load the file and then call the prompt.format() method to return the prompt configured in the file:

print(prompt.format(adjective="funny"))

Step 5: Loading Prompts From Files

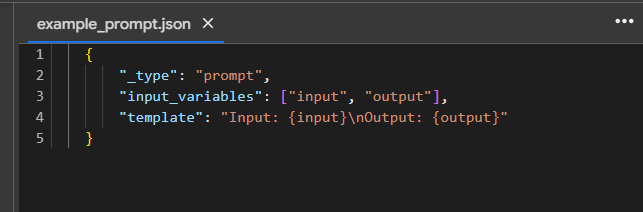

The example prompt configures the template of the prompt in the JSON format:

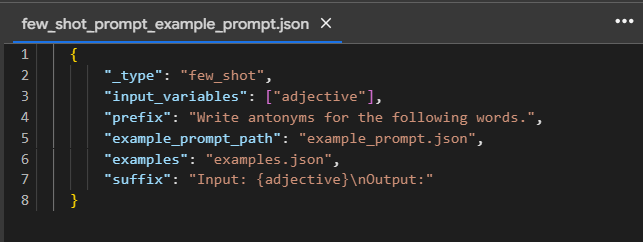

This file uses the Few-Shot prompt template by calling the examples.json file:

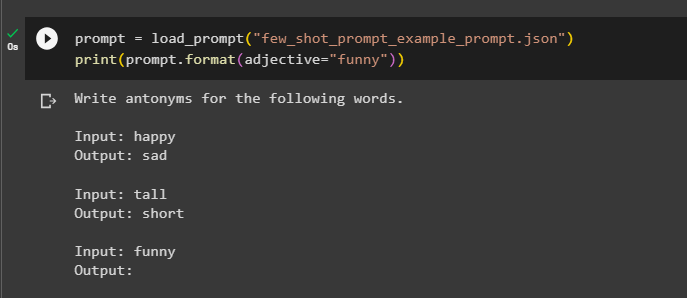

Execute the above file’s template and displays the output on the screen:

print(prompt.format(adjective="funny"))

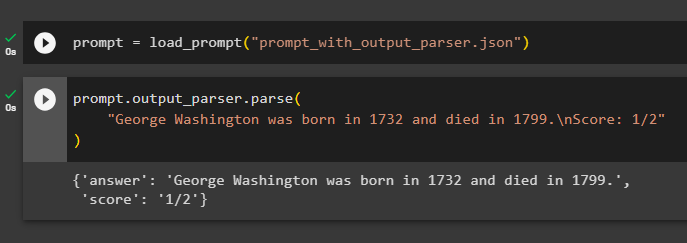

Step 6: Loading Output Parser

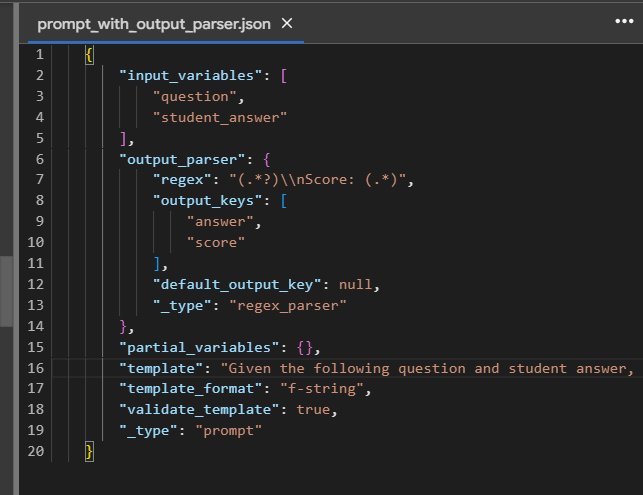

The following screenshot displays the file in JSON format that uses output parser along with the prompt template:

Loading the file using the load_prompt() method and assign it to the prompt variable:

Execute the prompt form the template text and use the output parser to execute the prompt:

"George Washington was born in 1732 and died in 1799.\nScore: 1/2"

)

That is all about using the serialization process for building LangChain applications.

Conclusion

To use serialization for building the LangChain application, simply install the LangChain framework to import the load_prompt library. After that, use this library to load files with templates of the prompts in different formats like JSON, YAML, and text. The text file cannot be used directly so the user needs to create a JSON or YAML file referring to the text file and use it to load the prompt. This post has illustrated the process of using serialization for building LangChain applications.