This guide will illustrate the process of running LLMChains in LangChain.

How to Run LLMChains in LangChain?

LangChain provides the features or dependencies for building LLMChains using the LLMs/Chatbots and prompt templates. To learn the process of building and running the LLMChains in LangChain, simply follow the following stepwise guide:

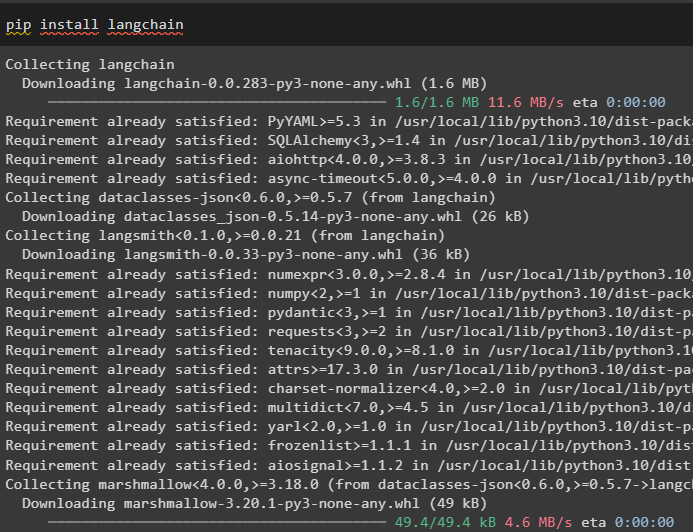

Step 1: Install Packages

First, get started with the process by installing the LangChain module to get its dependencies for building and running LLMChains:

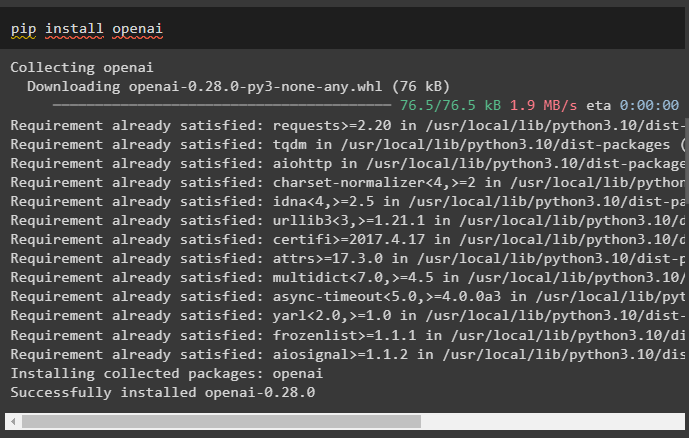

Install the OpenAI framework using the pip command to get the libraries to use OpenAI() function for building LLMs:

After the installation of modules, simply set up the environment variables using the API key from the OpenAI account:

import getpass

os.environ["OPENAI_API_KEY"] = getpass.getpass("OpenAI API Key:")

Step 2: Import Libraries

Once the setup is complete and all the required packages are installed, import the required libraries for building the prompt template. After that, simply build the LLM using the OpenAI() method and configure the LLMChain using the LLMs and prompt template:

from langchain import OpenAI

from langchain import LLMChain

prompt_template = "give me a good title for business that makes {product}?"

llm = OpenAI(temperature=0)

llm_chain = LLMChain(

llm=llm,

prompt=PromptTemplate.from_template(prompt_template)

)

llm_chain("colorful clothes")

Step 3: Running Chains

Get the input list containing various products produced by the business and run the chain to display the list on the screen:

{"product": "socks"},

{"product": "computer"},

{"product": "shoes"}

]

llm_chain.apply(input_list)

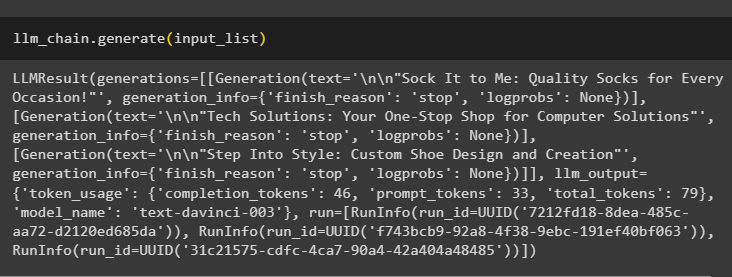

Run the generate() method using the input_list with LLMChains to get the output related to the conversation generated by the model:

Step 4: Using Single Input

Add another product to run the LLMChains by using only a single input and then predict the LLMChain to generate the output:

Step 5: Using Multiple Inputs

Now, build the template for using multiple inputs for providing the command to the model before running the chain:

prompt = PromptTemplate(template=template, input_variables=["adjective", "subject"])

llm_chain = LLMChain(prompt=prompt, llm=OpenAI(temperature=0))

llm_chain.predict(adjective="sad", subject="ducks")

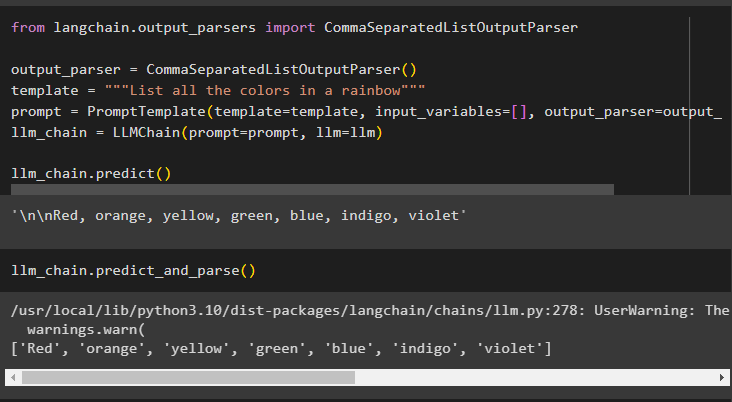

Step 6: Using Output Parser

This step uses the output parser method to run the LLMChain to get the output based on the prompt:

output_parser = CommaSeparatedListOutputParser()

template = """List all the colors in a rainbow"""

prompt = PromptTemplate(template=template, input_variables=[], output_parser=output_parser)

llm_chain = LLMChain(prompt=prompt, llm=llm)

llm_chain.predict()

Using the parse() method to get the output will generate a comma-separated list of all the colors in the rainbow:

Step 7: Initializing From Strings

This step explains the process of using a string as the prompt to run the LLMChain using the LLM model and template:

llm_chain = LLMChain.from_string(llm=llm, template=template)

Provide the values of the variables in the string prompt to get the output from the model by running LLMChain:

That is all about running the LLMChains using the LangChain framework.

Conclusion

To build and run the LLMChains in LangChain, install the prerequisites like packages and set up the environment using OpenAI’s API key. After that, import the required libraries for configuring the prompt template and model for running LLMChain using the LangChain dependencies. The user can use output parsers and string commands to run LLMChains as demonstrated in the guide. This guide has elaborated on the complete process of running the LLMChains in LangChain.