Quick Outline

This post will demonstrate the following:

How to Replicate the MRKL System Using Agents in LangChain

- Step 1: Installing Frameworks

- Step 2: Setting OpenAI Environment

- Step 3: Importing Libraries

- Step 4: Building Database

- Step 5: Uploading Database

- Step 6: Configuring Tools

- Step 7: Building & Testing the Agent

- Step 8: Replicate the MRKL System

- Step 9: Using ChatModel

- Step 10: Test the MRKL Agent

- Step 11: Replicate the MRKL System

How to Replicate the MRKL System Using Agents in LangChain?

LangChain allows the user to build agents that can be used to perform multiple tasks for the language models or chatbots. Agents store their work with all the steps in the memory attached to the language model. Using these templates, the agent can replicate the working of any system like MRKL to get the optimized results without having to build them again.

To learn the process of replicating the MRKL system using agents in LangChain, simply go through the listed steps:

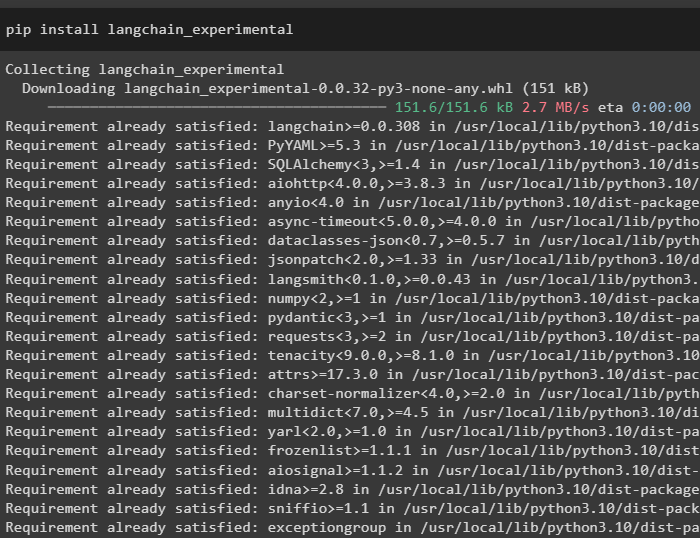

Step 1: Installing Frameworks

First of all, install the LangChain experimental modules using the pip with the langchain-experimental command:

Install the OpenAI module to build the language model for the MRKL system:

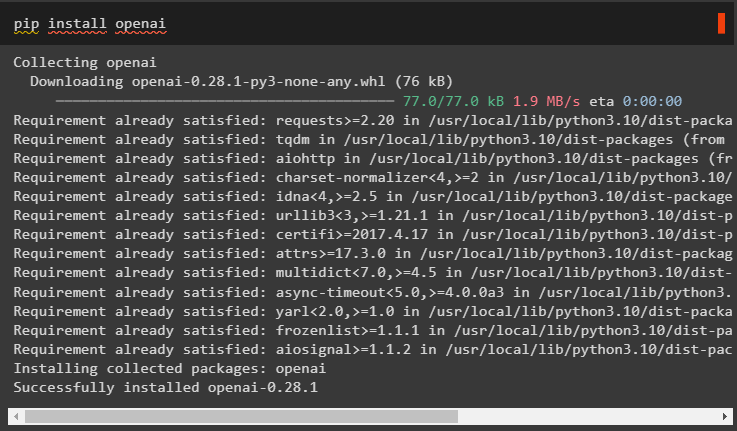

Step 2: Setting OpenAI Environment

Import the os and getpass libraries to access the operating for prompting the user to provide the API keys for the OpenAI and SerpAPi accounts:

import getpass

os.environ["OPENAI_API_KEY"] = getpass.getpass("OpenAI API Key:")

os.environ["SERPAPI_API_KEY"] = getpass.getpass("Serpapi API Key:")

Step 3: Importing Libraries

Use the dependencies from the LangChain to import the required libraries for building the language model, tools, and agents:

from langchain.llms import OpenAI

from langchain.utilities import SerpAPIWrapper

from langchain.utilities import SQLDatabase

from langchain_experimental.sql import SQLDatabaseChain

from langchain.agents import initialize_agent, Tool

from langchain.agents import AgentType

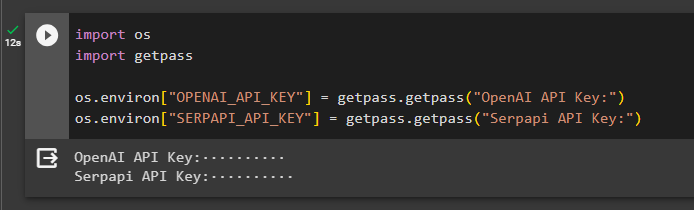

Step 4: Building Database

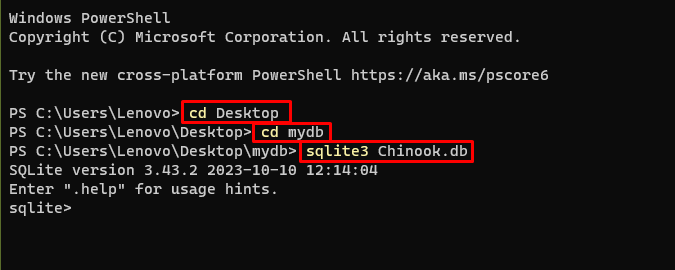

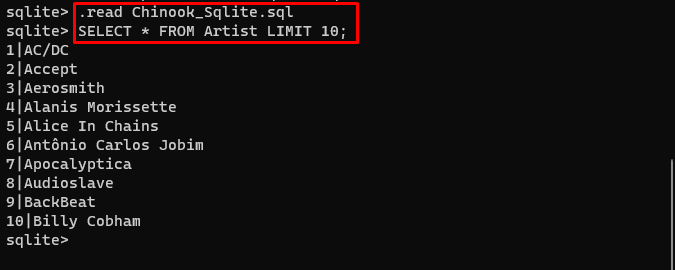

The MRKL uses external knowledge sources to extract information from data. This post uses SQLite which can be downloaded using this guide to build the database. The following command confirms the process of downloading the SQLite by displaying its installed version:

Use the following commands head inside a directory to create the database using the command prompt:

cd mydb

sqlite3 Chinook.db

Download the Database file and store it in the directory to use the following command for creating the “.db” file:

SELECT * FROM Artist LIMIT 10;

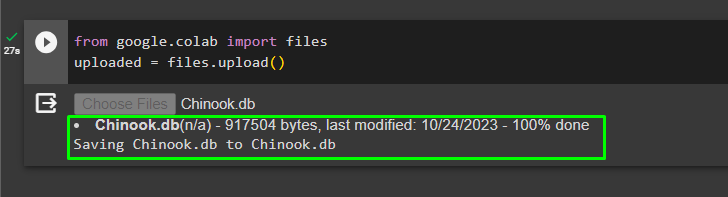

Step 5: Uploading Database

Once the database is created successfully, upload the file in the Google collaboratory:

uploaded = files.upload()

The user can access the uploaded file on the notebook to copy its path from its drop-down menu:

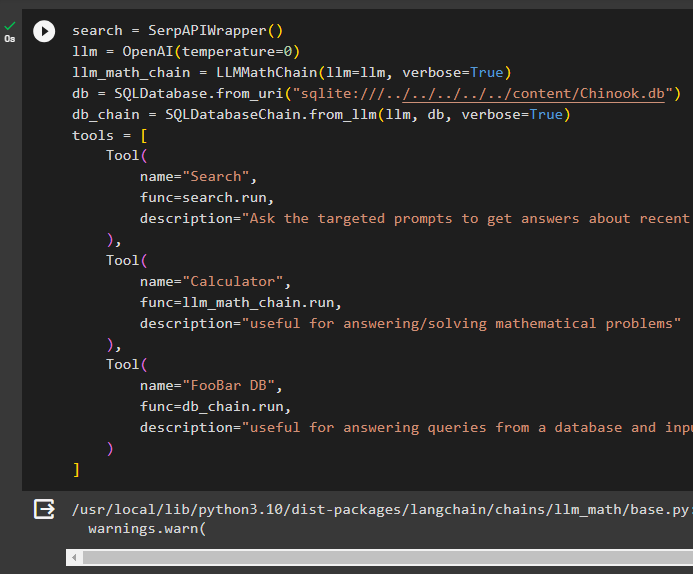

Step 6: Configuring Tools

After building the database, configure the language model, tools, and chains for the agents:

llm = OpenAI(temperature=0)

llm_math_chain = LLMMathChain(llm=llm, verbose=True)

db = SQLDatabase.from_uri("sqlite:///../../../../../content/Chinook.db")

db_chain = SQLDatabaseChain.from_llm(llm, db, verbose=True)

tools = [

Tool(

name="Search",

func=search.run,

description="Ask the targeted prompts to get answers about recent affairs"

),

Tool(

name="Calculator",

func=llm_math_chain.run,

description="useful for answering/solving mathematical problems"

),

Tool(

name="FooBar DB",

func=db_chain.run,

description="useful for answering queries from a database and input question must have the complete context"

)

]

- Define the llm variable using the OpenAI() method to get the language model.

- The search is the tool that calls the SerpAPIWrapper() method to access its environment.

- The LLMMathChain() method is used to get the answers related to mathematical problems.

- Define the db variable with the path of the file inside the SQLDatabase() method.

- The SQLDatabaseChain() method can be used to get the information from the database.

- Define tools like search, calculator, and FooBar DB for building the agent to extract data from different sources:

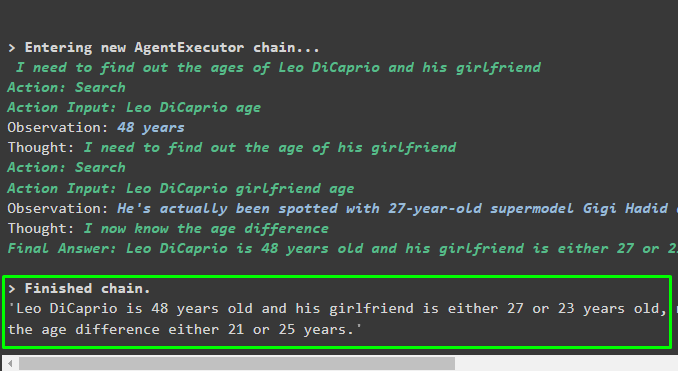

Step 7: Building & Testing the Agent

Initialize the MRKL system using the tools, llm, and agent to get the answers to the questions asked by the user:

Execute the MRKL system using the run() method with the question as its argument:

Output

The agent has produced the final answer with the complete path used by the system to extract the final answer:

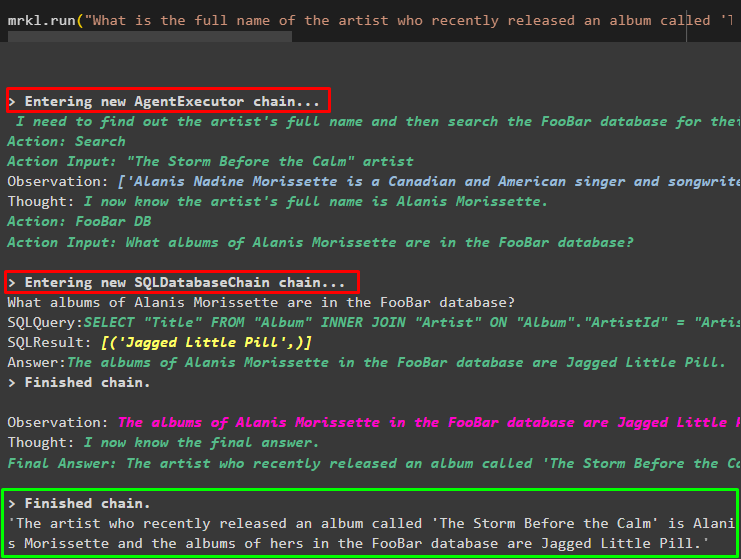

Step 8: Replicate the MRKL System

Now, simply use the mrkl keyword with the run() method to get answers from different sources like databases:

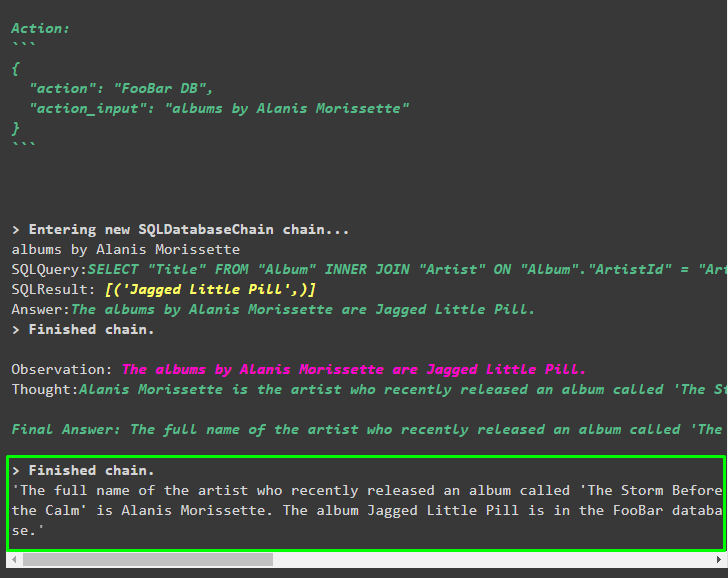

The agent has automatically transformed the question into the SQL query to fetch the answer from the database. The agent searches for the correct source to get the answer and then assembles the query to extract the information:

Step 9: Using ChatModel

The user can simply change the language model by using the ChatOpenAI() method to make it a ChatModel and use the MRKL system with it:

search = SerpAPIWrapper()

llm = ChatOpenAI(temperature=0)

llm1 = OpenAI(temperature=0)

llm_math_chain = LLMMathChain(llm=llm1, verbose=True)

db = SQLDatabase.from_uri("sqlite:///../../../../../content/Chinook.db")

db_chain = SQLDatabaseChain.from_llm(llm1, db, verbose=True)

tools = [

Tool(

name="Search",

func=search.run,

description="Ask the targeted prompts to get answers about recent affairs"

),

Tool(

name="Calculator",

func=llm_math_chain.run,

description="useful for answering/solving mathematical problems"

),

Tool(

name="FooBar DB",

func=db_chain.run,

description="useful for answering queries from a database and input question must have the complete context"

)

]

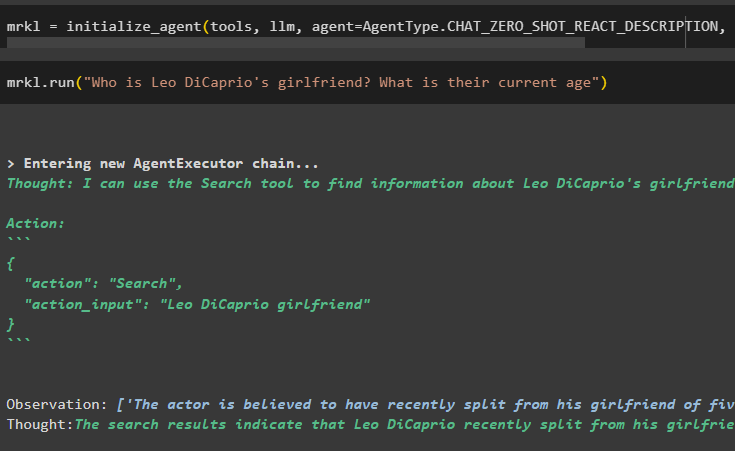

Step 10: Test the MRKL Agent

After that, build the agent and initialize it in the mrkl variable using the initialize_agent() method. Add the parameter of the method to integrate the components like tools, llm, agent, and verbose to get the complete process in the output:

Execute the question by running the mrkl system as displayed in the following screenshot:

Output

The following snippet displays the final answer extracted by the agent:

Step 11: Replicate the MRKL System

Use the MRKL system by calling the run() method with the question in the natural language to extract information from the database:

Output

The agent has displayed the final answer extracted from the database as displayed in the following screenshot:

That’s all about the process of replicating the MRKL system using agents in LangChain:

Conclusion

To replicate the MRKL system using agents in LangChain, install the modules to get the dependencies for importing the libraries. The libraries are required to build the language model or chat model to get the answers from multiple sources using the tools. The agents are configured to use the tools for extracting outputs from different sources like the internet, databases, etc. This guide has elaborated on the process of replicating the MRKL system using agents in LangChain.