In the sales sector, the Time Series Analysis is the most important factor to analyze the sales day to day. It is necessary to change all the date-time related data to Date/DateTime. Spark is one of the open-source programs which executes and analyzes this in cluster nodes through machine learning/DataScience techniques. In this guide, we will discuss about the to_date() function that is available in the pyspark.sql.functions module which converts the input Date/TimeStamp string type column to date. As part of this tutorial, we will see the different examples of converting the TimeStamp/Date string to date.

Pyspark.sql.functions.to_date()

To_date() is available in pyspark.sql.functions module. It is necessary to import this function from this module. It accepts two parameters. The first parameter is the column which is the date/time stamp format. The second parameter is the format in which the values exist in the column (first parameter). It converts into specified format with date type. It is also possible to use this function in Spark SQL functions. We can use the SELECT command to get the date from the Date/Timestamp string type.

Syntax:

- 1. We can use the select() method to display the columns and specify the function within this method.

- 2. We can use the select() method to display the columns and specify the function within this method.

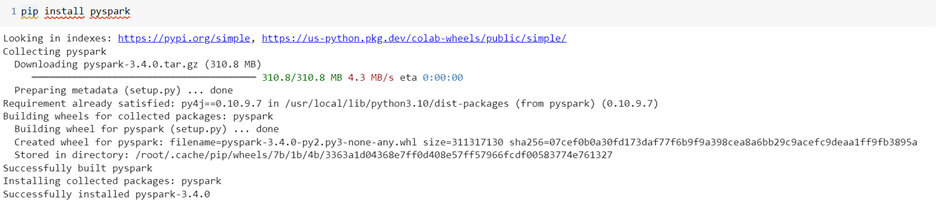

First, install the PySpark module using the pip command.

Example 1: To_Date() with Format

Let’s create a PySpark DataFrame with 1 column named “Actual Input” with 5 records and convert it into the date type with the “dd-MM-yyyy” format. We use the select() method to display the converted date. Here, we store it in the “YEAR-MONTH-DAY” column.

from pyspark.sql import SparkSession

from pyspark.sql.functions import col,to_date

linuxhint_spark_app = SparkSession.builder.appName('Linux Hint').getOrCreate()

first_df =[["25-05-1999"],["04-05-2015"],["01-05-2019"],["23-06-2006"],["01-01-1992"]]

# create the dataframe using the first_df

first_df_obj = linuxhint_spark_app.createDataFrame(first_df,["Actual Input"])

# Actual DataFrame

first_df_obj.show()

# Dataframe with date in the format -> dd-mm-yyyy

first_df_obj.select(col("Actual Input"),to_date(col("Actual Input"),"dd-MM-yyyy").alias("YEAR-MONTH-DAY")).show()

Output:

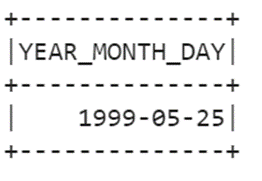

Example 2: Using the SQL To_Date() with Format

Now, we use the Spark SQL query to convert the string date to the “dd-MM-YYYY” format.

from pyspark.sql import SparkSession

from pyspark.sql.functions import col,to_date

linuxhint_spark_app = SparkSession.builder.appName('Linux Hint').getOrCreate()

# to_date() with SQL

linuxhint_spark_app.sql("SELECT to_date('25-05-1999','dd-MM-yyyy') YEAR_MONTH_DAY").show()

Output:

Example 3: Convert the TimeStamp Column to Date

Quickly create a PySpark DataFrame with one column named “Timestamp” with 5 records. Use the to_date() function to extract the date from the timestamp and store all 5 records in the “Extracted Date” column.

from pyspark.sql import SparkSession

from pyspark.sql.functions import col,to_date

linuxhint_spark_app = SparkSession.builder.appName('Linux Hint').getOrCreate()

first_df =[["2023-05-25 02:30:00"],["2021-05-25 02:30:00"],["2020-06-10 02:30:00"],["2021-06-15 02:30:00"],["2012-01-05 02:30:00"]]

# create the dataframe using the first_df

first_df_obj = linuxhint_spark_app.createDataFrame(first_df,["Timestamp"])

# Actual DataFrame

first_df_obj.show(truncate=False)

# Convert the above Timestamp column to Date

first_df_obj.select(to_date(first_df_obj["Timestamp"], 'yyyy-MM-dd HH:mm:ss').alias('Extracted Date')).show()

Output:

Example 4: TimeStamp Column to Date with SQL

Let’s select the date from the TimeStamp in the SELECT query using the Spark SQL function.

linuxhint_spark_app.sql("SELECT to_date('2023-05-25 02:30:00') as Extracted_Date").show(truncate=False)

# Extract Date from the timestamp format - '05-25-1999 12:01:19.000'

linuxhint_spark_app.sql("SELECT to_date('05-25-1999 12:01:19.000','MM-dd-yyyy HH:mm:ss.SSSS') as Extracted_Date").show(truncate=False)

Output:

Conclusion

Now, we are able to understand how to convert the Date column (string type) to date using the to_date() function. Using this, we can also extract the date from the TimeStamp. It is also possible to use this function in the Spark SQL functions. In this scenario, we used the SELECT command to display the extracted date. Unique examples were discussed in this tutorial to understand this function much better.