exp() Function

The exp() function in PySpark is used to return the exponential value of any given number present in a DataFrame column. Mathematically, it is defined as e^x.

x is the value present in the PySpark DataFrame column.

It can be used with the select method, since select() is used to display the values in the PySpark DataFrame.

Syntax

Parameter:

It takes the column name as a parameter to return an exponential value for that column.

Example 1

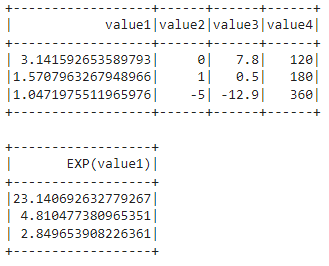

Let’s create a PySpark DataFrame with 3 rows and 4 columns, plus all numeric types and returns exponential values.

import math

from pyspark.sql import SparkSession

from pyspark.sql.functions import exp

spark_app = SparkSession.builder.appName('_').getOrCreate()

#create math values

values =[(math.pi,0,7.8,120),

(math.pi/2,1,0.5,180),

(math.pi/3,-5,-12.9,360)

]

#assign columns by creating the PySpark DataFrame

dataframe_obj = spark_app.createDataFrame(values,['value1','value2','value3','value4'])

dataframe_obj.show()

#get the exponential values of the value1 column

dataframe_obj.select(exp(dataframe_obj.value1)).show()

Output:

So for column, value1, we returned exponential values.

Exponential Value of 3.141592653589793 is 23.140692632779267

Exponential Value of 1.5707963267948966 is 4.810477380965351.

Exponential Value of 1.0471975511965976 is 2.849653908226361.

Example 2

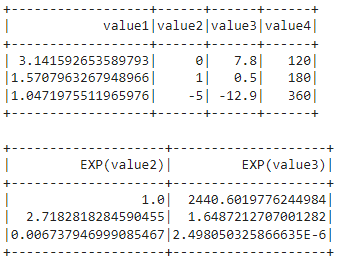

Now, we will return exponential values for value2 and value3 columns.

import math

from pyspark.sql import SparkSession

from pyspark.sql.functions import exp

spark_app = SparkSession.builder.appName('_').getOrCreate()

#create math values

values =[(math.pi,0,7.8,120),

(math.pi/2,1,0.5,180),

(math.pi/3,-5,-12.9,360)

]

#assign columns by creating the PySpark DataFrame

dataframe_obj = spark_app.createDataFrame(values, ['value1','value2','value3','value4'])

dataframe_obj.show()

#get the exponential values values of value2 and value3 column

dataframe_obj.select(exp(dataframe_obj.value2), exp(dataframe_obj.value3)).show()

Output:

Column — value2:

Exponential value of 0 is 1.0

Exponential value of 1 is 2.7182818284590455

Exponential value of -0.08726646259971647 is 0.006737946999085467.

column — value3:

Exponential value of 7.8 is 2440.6019776244984

Exponential value of 0.5 is 1.6487212707001282

Exponential value of -12.9 is 2.498050325866635E-6.

expm1() Function

The expm1() function in PySpark is used to return the exponential value minus one of any given number present in a DataFrame column. Mathematically, it is defined as e^(x)-1.

X is the value present in the PySpark DataFrame column.

It can be used with the select method because select() is used to display the values in the PySpark DataFrame.

Syntax:

Parameter:

It takes the column name as a parameter to return an exponential value minus 1 for that column.

Example 1

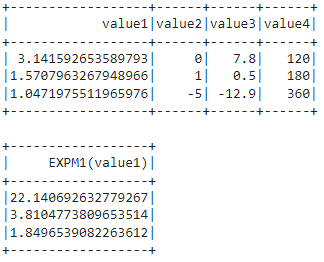

Let’s create a PySpark DataFrame, with 3 rows and 4 columns, plus all numeric types and returns exponential values minus 1.

import math

from pyspark.sql import SparkSession

from pyspark.sql.functions import expm1

spark_app = SparkSession.builder.appName('_').getOrCreate()

#create math values

values =[(math.pi,0,7.8,120),

(math.pi/2,1,0.5,180),

(math.pi/3,-5,-12.9,360)

]

#assign columns by creating the PySpark DataFrame

dataframe_obj = spark_app.createDataFrame(values, ['value1','value2','value3','value4'])

dataframe_obj.show()

#get the exponential values minus 1 of value1 column

dataframe_obj.select(expm1(dataframe_obj.value1)).show()

Output:

So for the column – value1, we returned exponential values minus 1.

Exponential Value Minus 1 of 3.141592653589793 is 22.140692632779267

Exponential Value Minus 1 of 1.5707963267948966 is 3.8104773809653514.

Exponential Value Minus 1 of 1.0471975511965976 is 1.8496539082263612.

Example 2

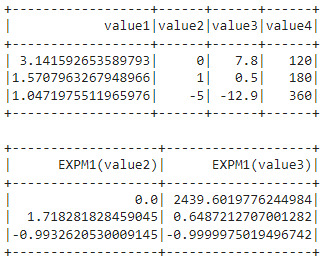

We will return exponential values minus 1 for value2 and value3 columns.

import math

from pyspark.sql import SparkSession

from pyspark.sql.functions import expm1

spark_app = SparkSession.builder.appName('_').getOrCreate()

#create math values

values =[(math.pi,0,7.8,120),

(math.pi/2,1,0.5,180),

(math.pi/3,-5,-12.9,360)

]

#assign columns by creating the PySpark DataFrame

dataframe_obj = spark_app.createDataFrame(values, ['value1','value2','value3','value4'])

dataframe_obj.show()

#get the exponential values minus 1 values of value2 and value3 column

dataframe_obj.select(expm1(dataframe_obj.value2), expm1(dataframe_obj.value3)).show()

Output:

column – value2:

Exponential value minus 1 of 0 is 0.0

Exponential value minus 1 of 1 is 1.718281828459045

Exponential value minus 1 of -0.08726646259971647 is -0.9932620530009145.

column – value3:

Exponential value minus 1 of 7.8 is 2439.6019776244984

Exponential value minus 1 of 0.5 is 0.6487212707001282

Exponential value minus 1 of -12.9 is -0.9999975019496742.

Conclusion

In this PySpark tutorial, we discussed the exp() and expm1() functions. The exp() function in PySpark returns the exponential value of any given number present in a DataFrame column. Mathematically, it is defined as e^x. The expm1() function in PySpark returns the exponential value minus one of any given number present in a DataFrame column. Mathematically, it is defined as e^(x)-1.