We will discuss a variety of Date functions in PySpark in this tutorial.

- datediff()

- months_between()

- add_months()

- date_add()

- date_sub()

- year()

- month()

- dayofmonth(), dayofweek(), dayofyear()

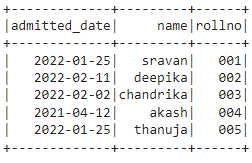

Let’s first create a DataFrame

Example:

Here, we are going to create PySpark dataframe with 5 rows and 3 columns with dates.

import pyspark

#import SparkSession for creating a session

from pyspark.sql import SparkSession

#create an app named linuxhint

spark_app = SparkSession.builder.appName('linuxhint').getOrCreate()

# create student data with 5 rows and 3 attributes

students =[

{'rollno':'001','name':'sravan','admitted_date':'2022-01-25'},

{'rollno':'002','name':'deepika','admitted_date':'2022-02-11'},

{'rollno':'003','name':'chandrika','admitted_date':'2022-02-02'},

{'rollno':'004','name':'akash','admitted_date':'2021-04-12'},

{'rollno':'005','name':'thanuja','admitted_date':'2022-01-25'}

]

# create the dataframe

df = spark_app.createDataFrame( students)

# display dataframe

df.show()

Output:

datediff()

This method is used to return the number of days between the current date and the given date. To use this method, we have to import it from sql.functions. So, we just import all the methods from pyspark.sql.functions.

Syntax:

where:

- column_date1 is first date column

- column_date2 is the second date column subtracted from column_date1.

- alias is used to specify the label for the result column

We can display the resultant DataFrame by passing this method inside select() method.

Syntax:

We can also subtract the date column with current date. We can get the current date using current_date() function.

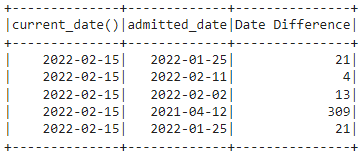

Example:

In this example, we will subtract the admitted_date column from current_date() as Date Difference by selecting current_date() and admitted_date and to display the DataFrame using show() method.

import pyspark

#import SparkSession for creating a session

from pyspark.sql import SparkSession

# import all sql functions

from pyspark.sql.functions import *

#create an app named linuxhint

spark_app = SparkSession.builder.appName('linuxhint').getOrCreate()

# create student data with 5 rows and 3 attributes

students =[{'rollno':'001','name':'sravan','admitted_date':'2022-01-25'},

{'rollno':'002','name':'deepika','admitted_date':'2022-02-11'},

{'rollno':'003','name':'chandrika','admitted_date':'2022-02-02'},

{'rollno':'004','name':'akash','admitted_date':'2021-04-12'},

{'rollno':'005','name':'thanuja','admitted_date':'2022-01-25'}

]

# create the dataframe

df = spark_app.createDataFrame( students)

# calculate date difference from the current date - 2022-02-15 with admitted_date

df.select(current_date(),col("admitted_date"),

datediff(current_date(),col("admitted_date")).alias("Date Difference")).show()

Output:

months_between()

This method is used to return the number of months between the current date and given date. To use this method, we have to import it from sql.functions. So, we import all the methods from pyspark.sql.functions.

Syntax:

where:

- column_date1 is first date column

- column_date2 is the second date column subtracted from column_date1.

- alias is used to specify the label for the result column

We can display the resultant DataFrame by passing this method inside select() method.

Syntax:

We can also subtract the date column with current date. We can get the current date using current_date() function.

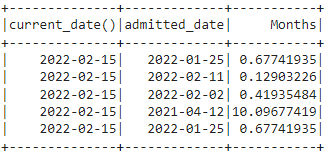

Example:

In this example, we will get the months between the admitted_date column and current_date() as Months by selecting current_date() and admitted_date and to display the DataFrame using show() method.

import pyspark

#import SparkSession for creating a session

from pyspark.sql import SparkSession

# import all sql functions

from pyspark.sql.functions import *

#create an app named linuxhint

spark_app = SparkSession.builder.appName('linuxhint').getOrCreate()

# create student data with 5 rows and 3 attributes

students =[{'rollno':'001','name':'sravan','admitted_date':'2022-01-25'},

{'rollno':'002','name':'deepika','admitted_date':'2022-02-11'},

{'rollno':'003','name':'chandrika','admitted_date':'2022-02-02'},

{'rollno':'004','name':'akash','admitted_date':'2021-04-12'},

{'rollno':'005','name':'thanuja','admitted_date':'2022-01-25'}

]

# create the dataframe

df = spark_app.createDataFrame( students)

# Get the months between the current date - 2022-02-15 and admitted_date

df.select(current_date(),col("admitted_date"),

months_between(current_date(),col("admitted_date")).alias("Months")).show()

Output:

add_months()

This method is used to add months to the given date.

Syntax:

where,

- column is the date column

- number_of_months is the total number of months to be added to the column date.

- alias is used to specify the label for the result column.

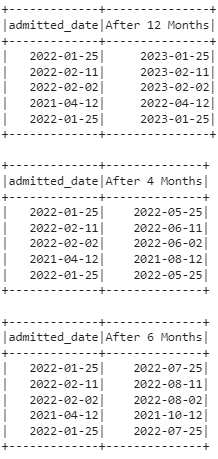

Example:

In this example, we will add months to the admitted_date and display the DataFrame using show() method.

import pyspark

#import SparkSession for creating a session

from pyspark.sql import SparkSession

# import all sql functions

from pyspark.sql.functions import *

#create an app named linuxhint

spark_app = SparkSession.builder.appName('linuxhint').getOrCreate()

# create student data with 5 rows and 3 attributes

students =[{'rollno':'001','name':'sravan','admitted_date':'2022-01-25'},

{'rollno':'002','name':'deepika','admitted_date':'2022-02-11'},

{'rollno':'003','name':'chandrika','admitted_date':'2022-02-02'},

{'rollno':'004','name':'akash','admitted_date':'2021-04-12'},

{'rollno':'005','name':'thanuja','admitted_date':'2022-01-25'}

]

# create the dataframe

df = spark_app.createDataFrame( students)

# add 12 months to the admitted_date

df.select(col("admitted_date"),

add_months(col("admitted_date"),12).alias("After 12 Months")).show()

# add 4 months to the admitted_date

df.select(col("admitted_date"),

add_months(col("admitted_date"),4).alias("After 4 Months")).show()

# add 6 months to the admitted_date

df.select(col("admitted_date"),

add_months(col("admitted_date"),6).alias("After 6 Months")).show()

Output:

date_add()

This method is used to add days to the given date.

Syntax:

Where:

- column is the date column

- number_of_days is the total number of days to be added to the column date.

- alias is used to specify the label for the result column.

Example:

In this example, we will add days to the admitted_date and display the DataFrame using show() method.

import pyspark

#import SparkSession for creating a session

from pyspark.sql import SparkSession

# import all sql functions

from pyspark.sql.functions import *

#create an app named linuxhint

spark_app = SparkSession.builder.appName('linuxhint').getOrCreate()

# create student data with 5 rows and 3 attributes

students =[{'rollno':'001','name':'sravan','admitted_date':'2022-01-25'},

{'rollno':'002','name':'deepika','admitted_date':'2022-02-11'},

{'rollno':'003','name':'chandrika','admitted_date':'2022-02-02'},

{'rollno':'004','name':'akash','admitted_date':'2021-04-12'},

{'rollno':'005','name':'thanuja','admitted_date':'2022-01-25'}

]

# create the dataframe

df = spark_app.createDataFrame( students)

# add 12 Days to the admitted_date

df.select(col("admitted_date"),

date_add(col("admitted_date"),12).alias("After 12 Days")).show()

# add 4 Days to the admitted_date

df.select(col("admitted_date"),

date_add(col("admitted_date"),4).alias("After 4 Days")).show()

# add 6 Days to the admitted_date

df.select(col("admitted_date"),

date_add(col("admitted_date"),6).alias("After 6 Days")).show()

Output:

date_sub()

This method is used to subtract days to the given date.

Syntax:

Where:

- column is the date column

- number_of_days is the total number of days to be subtracted from the column date.

- alias is used to specify the label for the result column.

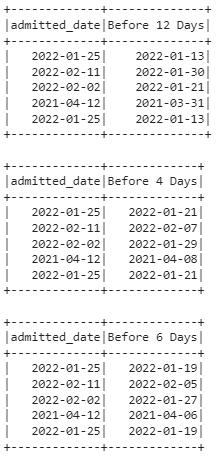

Example:

In this example, we will subtract days from the admitted_date and display the DataFrame using the show() method.

import pyspark

#import SparkSession for creating a session

from pyspark.sql import SparkSession

# import all sql functions

from pyspark.sql.functions import *

#create an app named linuxhint

spark_app = SparkSession.builder.appName('linuxhint').getOrCreate()

# create student data with 5 rows and 3 attributes

students =[{'rollno':'001','name':'sravan','admitted_date':'2022-01-25'},

{'rollno':'002','name':'deepika','admitted_date':'2022-02-11'},

{'rollno':'003','name':'chandrika','admitted_date':'2022-02-02'},

{'rollno':'004','name':'akash','admitted_date':'2021-04-12'},

{'rollno':'005','name':'thanuja','admitted_date':'2022-01-25'}

]

# create the dataframe

df = spark_app.createDataFrame( students)

# subtract 12 Days from the admitted_date

df.select(col("admitted_date"),

date_sub(col("admitted_date"),12).alias("Before 12 Days")).show()

# subtract 4 Days from the admitted_date

df.select(col("admitted_date"),

date_sub(col("admitted_date"),4).alias("Before 4 Days")).show()

# subtract 6 Days from the admitted_date

df.select(col("admitted_date"),

date_sub(col("admitted_date"),6).alias("Before 6 Days")).show()

Output:

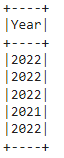

year()

year() is used to return the year from the given date.

Syntax:

Where:

- column is the date column

- alias is used to specify the label for the result column.

Example:

In this example, we will extract year from the admitted_date and display the Year column using the show() method.

import pyspark

#import SparkSession for creating a session

from pyspark.sql import SparkSession

# import all sql functions

from pyspark.sql.functions import *

#create an app named linuxhint

spark_app = SparkSession.builder.appName('linuxhint').getOrCreate()

# create student data with 5 rows and 3 attributes

students =[{'rollno':'001','name':'sravan','admitted_date':'2022-01-25'},

{'rollno':'002','name':'deepika','admitted_date':'2022-02-11'},

{'rollno':'003','name':'chandrika','admitted_date':'2022-02-02'},

{'rollno':'004','name':'akash','admitted_date':'2021-04-12'},

{'rollno':'005','name':'thanuja','admitted_date':'2022-01-25'}

]

# create the dataframe

df = spark_app.createDataFrame( students)

# select year from admitted_date column

df.select(year(col("admitted_date")).alias("Year")).show()

Output:

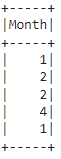

month()

month() is used to return the month from the given date.

Syntax:

Where:

- column is the date column

- alias is used to specify the label for the result column.

Example:

In this example, we will extract month from the admitted_date and display the Month column using the show() method.

import pyspark

#import SparkSession for creating a session

from pyspark.sql import SparkSession

# import all sql functions

from pyspark.sql.functions import *

#create an app named linuxhint

spark_app = SparkSession.builder.appName('linuxhint').getOrCreate()

# create student data with 5 rows and 3 attributes

students =[{'rollno':'001','name':'sravan','admitted_date':'2022-01-25'},

{'rollno':'002','name':'deepika','admitted_date':'2022-02-11'},

{'rollno':'003','name':'chandrika','admitted_date':'2022-02-02'},

{'rollno':'004','name':'akash','admitted_date':'2021-04-12'},

{'rollno':'005','name':'thanuja','admitted_date':'2022-01-25'}

]

# create the dataframe

df = spark_app.createDataFrame( students)

# select month from admitted_date column

df.select(month(col("admitted_date")).alias("Month")).show()

Output:

dayofmonth(), dayofweek(), dayofyear()

dayofmonth() is used to return the day of the month from the given date.

dayofweek() is used to return the day of the week from the given date.

dayofyear() is used to return the day of the year from the given date.

Syntax:

dataframe.select(dayofweek(col("column")).alias("result_column"))

dataframe.select(dayofyear(col("column")).alias("result_column"))

where:

- column is the date column

- alias is used to specify the label for the result column.

Example:

In this example, we will extract day of week, month, and year from the admitted_date and display using show() method.

import pyspark

#import SparkSession for creating a session

from pyspark.sql import SparkSession

# import all sql functions

from pyspark.sql.functions import *

#create an app named linuxhint

spark_app = SparkSession.builder.appName('linuxhint').getOrCreate()

# create student data with 5 rows and 3 attributes

students =[{'rollno':'001','name':'sravan','admitted_date':'2022-01-25'},

{'rollno':'002','name':'deepika','admitted_date':'2022-02-11'},

{'rollno':'003','name':'chandrika','admitted_date':'2022-02-02'},

{'rollno':'004','name':'akash','admitted_date':'2021-04-12'},

{'rollno':'005','name':'thanuja','admitted_date':'2022-01-25'}

]

# create the dataframe

df = spark_app.createDataFrame( students)

# select day of week from admitted_date column

df.select(dayofweek(col("admitted_date")).alias("Day of week")).show()

# select day of month from admitted_date column

df.select(dayofmonth(col("admitted_date")).alias("Day of Month")).show()

# select day of year from admitted_date column

df.select(dayofyear(col("admitted_date")).alias("Day of Year")).show()

Output:

Conclusion

From this article, we learned how to use date functions on PySpark DataFrame. These are very useful in DateAPI Applications. It is also on how to add days and columns to the existing date.